Gesture recognition technology is here to stay and will make life smarter and easier. With wearable device market booming in the past few years, gesture control of these devices is also becoming increasingly popular. But deploying a reliable and efficient gesture recognition system to any project could be costly and time consuming. What if you have a ready made board that does this function for you? All you have to do is connect this device to your project and define the gestures and controls using a smartphone app, and voilà, your product can be controlled with your hands!

This is now possible, thanks to the crowdfunding campaign I came across recently. A group of five young engineers and entrepreneurs from Boston have come up with such a device that makes gesture recognition easy for you. Gesto is the first open-source boards for wearable gesture control that combines muscle signals with motion patterns. A MassChallenge Boston finalist, this small form factor board can be worn around the user’s body (wrist, forearm, arm, torso, or leg). It combines information received from the spatial sensors and the user’s muscle activity, and analyses them in real time to recognize new gestures and motion patterns. It eliminates the need for using cameras and tedious calibrations to use the body itself as a controller. The board is recognised by other devices in the same way a keyboard or mouse is recognised, and hence the user can send direct commands to the device and control it. Let us take a look at the features of this board.

Biosignal analysis

Gesto boards don’t have a ground electrode; they use a virtual ground. This eliminates the need of extra cables and electrodes to measure muscle activity in any part of the body. The biosignals received from the user’s muscle are analysed in real-time. All the tools a developer need to perform muscle analysis – software filters, machine learning algorithms, feature extraction, data compression, integration etc – are available for free in different languages such as C, MATLAB, Python and Java. These features let a user get raw data from the board and use the patterns for other analysis too.

DualBurst

The gesture recognition algorithm used in this project is known as DualBurst because it combines muscle signals from body and motion signals from accelerometer for pattern analysis. This feature lets you recognise and define different types of hand movements:

- Singular gestures: Pinch, wave, hand fold, finger press and similar gestures that depend on the muscle contractions.

- Air drawing: Letters, numbers, figures etc that is performed with wrist or arm movement.

- Directional gestures: Gestures in the three dimensional space such as raising and lowering hand, rotation of fist etc.

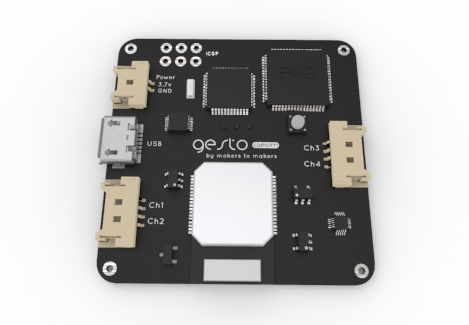

The inside of Caelum and Stella

The main parts of the board – microcontroller unit (MCU) and analogue-to-digital converter (ADC) – are common in both the devices.

MCU: ATmega1284P is an 8-bit, reduced instruction set computing (RISC) based single chip MCU belonging to Atmel’s AVR family. It combines 128KB in-system programming (ISP) flash memory with read-while-write capabilities, 16KB of static random access memory (SRAM), 4KB of electrically erasable programmable read-only memory (EEPROM), 32 general purpose input/output (I/O) lines, 32 general purpose working registers, a real time counter, a Joint Test Action Group (JTAG) IEEE 1149.1 compliant test interface for on-chip debugging and programming, and six software selectable power saving modes among other functionalities and features.

EMG: The integrated circuit (IC) used here is Texas Instruments’ ADS1294, a multichannel, 24-bit ADC with an integrated front end for biopotential measurements. It acts like an EMG and measures the electrical activity of the muscles.

Both the board models also have electromyogram (EMG) electrode cable connectors. Depending on the target audience and target purpose these have additional components.

Exclusive to Caelum

Since Caelum is targeted at including gesture recognition to any project quickly, easily, and without the need for programming, it comes with added components and larger form factor.

Accelerometer: MMA8652FC from Freescale, a small form factor, 12-bit, 3-axis digital accelerometer is used in the Gesto boards. It has flexible user-programmable options and two configurable interrupt pins. The inertial wake-up interrupt signals saves power by monitoring events and going to low-power mode during inactivity.

Bluetooth chip: RN42-HID by Roving Networks (now acquired by Microchip) class-2 Bluetooth radio with enhanced data rate (Bluetooth EDR) and human interface device (HID) support.

In addition to the above, the board has battery connection, micro USB connection, and in-circuit serial programming (ICSP) connection.

Exclusive to Stella

This board is like an external sensor that can be connected to Arduino and Raspberry Pi boards for gesture recognition, signal analysis and conversion into actions. This also lets you connect the board to external systems using Bluetooth, Wi-Fi and infrared (IR).

Stella comes with pins for ground (GND), IC power supply pin (PWR), Master Out Slave In (MOSI), Master In Slave Out (MISO), and the clock (CLK e SC).

The author is a dancer, karaoke aficionado, and a technical correspondent at EFY. Find her on Twitter @AnuBomb.