New method in robotics boosts precision in pick-and-place tasks by learning from simulations, allowing robots to handle various jobs with enhanced accuracy.

Credits:Image: John Freidah/MIT Department of Mechanical Engineering

Pick-and-place machines automate the placement of objects in structured locations, serving various applications such as electronics assembly and inspection. However, many lack “precise generalization,” struggling to perform multiple tasks accurately. A recent study in Science Robotics have introduced a new method called SimPLE (Simulation to Pick Localize and placE), which enhances precision by using an object’s CAD model to learn how to systematically handle objects without prior experience, effectively organizing unstructured arrangements.

The promise of SimPLE is that it enables solving a variety of tasks using the same hardware and software, by employing simulations to learn models tailored to each specific task. SimPLE was developed by the Manipulation and Mechanisms Lab at MIT (MCube).

It is noted that their work demonstrates the capability to achieve the levels of positional accuracy necessary for many industrial pick and place tasks without any additional specialization.

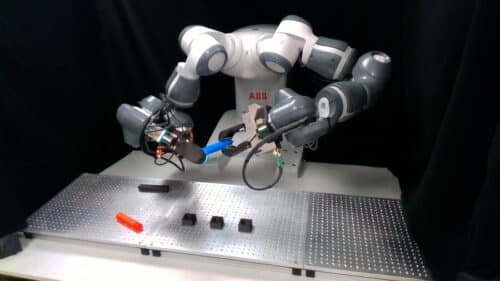

The SimPLE solution utilizes a dual-arm robot equipped with visuotactile sensors and is built on three key components: task-aware grasping, integrated sight and touch perception (visuotactile perception), and regrasp planning. It compares real observations with simulated ones using supervised learning to estimate a range of probable object positions, enabling precise placement.

In testing, SimPLE effectively demonstrated its capability to pick and place a variety of objects with different shapes. It achieved successful placements more than 90 percent of the time for six objects, and over 80 percent of the time for eleven objects.

There’s an intuitive understanding within the robotics community that both vision and touch are beneficial, yet systematic demonstrations of their utility for complex robotics tasks have been rare. This study extends beyond the aim to mimic human capabilities, demonstrating from a strictly functional perspective the benefits of combining tactile sensing and vision with dual-hand manipulation.