Introduction

Evaluating solid-state drives (SSDs) for use in enterprise applications can be tricky business. In addition to selecting the right interface type, endurance level and capacity, decision makers must also look beyond product data sheets to determine the right SSD to accelerate their applications. Often the specifications on SSD vendor collateral are based on multiple and different benchmark tests, or other criteria that may not represent one’s unique environment. This paper will examine basic SSD differences and highlight key criteria that should be considered in choosing the right device for a given workload/application.

Interface Options

The industry typically classifies enterprise-class SSDs by interface type. The main interfaces to evaluate are SATA, SAS and PCIe. From here, it is easy to qualify the devices based on factors such as price, performance, capacity, endurance and form factor. SATA is usually the least expensive of the device types, but also brings up the rear in terms of performance due to the speed limitations of the 6Gb/s SATA bus. SAS SSDs are mostly deployed inside SAN or NAS storage arrays. With dual-port interfaces, they can be configured with multiple paths to multiple array controllers for high availability. SAS drives deliver nearly double the performance of SATA devices, thanks to the 12Gb/s SAS interface. SATA and SAS SSDs are the most widely deployed interface types today, with capacities that can reach more than 4TB.

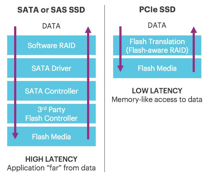

At the high-end of the performance spectrum are PCIe SSDs. These devices connect directly into the PCIe bus and are able to deliver much higher speeds. By implementing specialized controllers that closely resemble memory architectures, PCIe products eliminate traditional storage protocol overhead, thereby reducing latencies and access times when compared to SATA or SAS. Given the importance of latency in enterprise applications, PCIe is often preferred as it reduces IO wait times, improves CPU utilization and enables more users or threads per SSD. SATA and SAS SSDs typically have higher latency than PCIe SSDs. This is primarily due to the software stack that must be traversed to read and write data. The diagram in Figure 1 above illustrates the layers of this stack.

More on PCIe

A PCIe connection consists of one or more data transmission lanes, connected serially. Each lane consists of two pairs of wires, one for receiving and one for transmitting. The PCIe specification supports one, four, eight or sixteen lanes in a single PCIe slot typically denoted as x1, x4, x8 or x16. Each lane is an independent connection between the PCI controller and the SSD, and bandwidth scales linearly, so an x8 connection will have twice the bandwidth of a x4 connection. PCIe transfer rates will depend on the generation of the base specification. Base 2.0 or Gen-2 provides 4Gb/s per lane, so a Gen-2 with 16 lanes can deliver an aggregate bandwidth of 64Gb/s. Gen- 3 provides 8Gb/s per lane, so accordingly a Gen-3 with 8 lanes provides 64Gb/s.

When evaluating PCIe devices, it is important to look for references to generation and number of lanes (Gen -2 x4 or Gen-3 x8 and so on). While PCIe devices tend to be the most expensive per GB, they can deliver more than 10 times the performance of SATA. Table 1 on the following page shows a quick summary of the distinctions between the three SSD interfaces.

Why NVMe Matters for PCIe

Another recent PCIe development is a standards-based device driver called NVM Express or NVMe. Most operating systems are shipping the standard NVMe driver today, which eliminates the hassles of deploying proprietary drivers from multiple vendors. Compared to the SATA interface, NVMe is designed to work with pipeline-rich, random access, memory-based storage. As such, it requires only a single message for 4K transfers (compared to 2 for SATA) and has the ability to process multiple queues instead of only one. In fact, NVMe allows for up to 65,536 simultaneous queues. Highlighted in Table 2 to the right are more details comparing SATA (which follows the Advanced Host Controller Interface standard) to NVMe-compliant PCIe.

The NVM Express Work Group has not stopped at just a driver. Coming soon are standards for monitoring and managing multi-vendor PCIe installations under a single pane of glass, as well as common methods for creating low-latency networks between NVMe devices for clustering and high availability (NVMe-over-Fabrics).

| Specification | SATA SSD | SAS SSD | PCIe SSD |

| IOPS | 75k / | 130k / | 740k / |

| Read/Write | 11.5k | 110k | 160k |

| Sequential | 500MB / | 1.1GB / | 3GB / |

| Read/Write | 450MB | 765MB | 1.6GB |

| Capacity | 80GB-4TB | 100GB-2TB | 1.6-4.8TB |

| Power |

9

|

11

|

<25

|

|

(Watts)

|

|||

| Price | $1.00/GB | $1.50/GB | $2.25/GB |

| Price/Read |

$0.02/IOP

|

$0.01/IOP

|

$0.001/IOP

|

|

IOP

|

|||

Table 1. Typical Specifications for SATA, SAS and PCIe SSDs

| Feature | SATA/AHCI | PCIe/NVMe |

| Maximum Queue | One command | 65,536 queues; |

| Depth | per queue; 32 | 65,536 |

| commands per | commands per | |

| queue | queue | |

| Uncacheable | Six per non-queued | Two per |

| Register Accesses | command; Nine per | command |

| (2000 cycles | queued command | |

| each) | ||

| Message Signal | A single interrupt; | 2048 MSI-X |

| Interrupts (MSI-X) | No steering | interrupts |

| and Interrupt | ||

| Steering | ||

| Parallelism and | Requires | No locking |

| Multiple Threads | synchronization lock | |

| to issue a command |

Table 2. Features Comparison of SATA/AHCI vs. PCIe/NVMe

SSD Performance Scaling

SSDs deployed inside storage arrays as “All-Flash” or “Hybrid” (where storage controllers use tiering or caching between HDDs and SSDs to aggregate devices together and manage data protection) provide large capacity shared storage that can take advantage of SSD performance characteristics. These architectures are ideal for many enterprise use cases, but not for databases like MySQL and NoSQL. For the latter, each server node has its own SSD/HDDs and the databases scale and handle data protection with techniques like sharding that stripe data across lots of individual nodes.

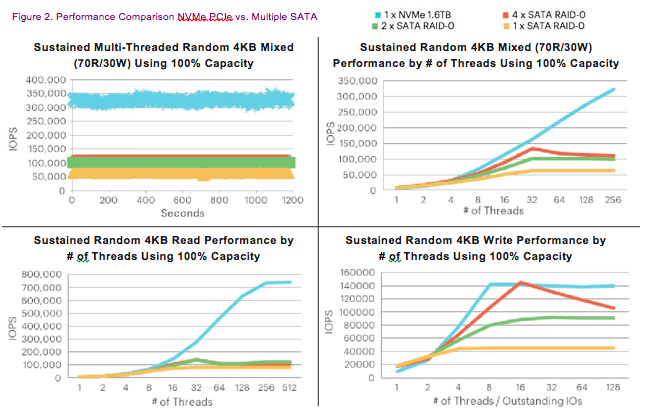

For MySQL and NoSQL environments, achieving optimal SSD performance is usually done with PCIe devices due to their low latency and high speeds. Depending on the workload requirements, there are scenarios where striping data across SATA or SAS SSDs inside a single server using RAID -0 can add capacity to the node. However, striping numerous SATA or SAS drives does not necessarily guarantee similar performance to PCIe. As workloads or thread counts increase, SATA and SAS latencies are magnified and software overhead tends to throttle the aggregate performance of the devices. As a result, a single PCIe SSD can often be less expensive than multiple “cheaper” SATA or SAS devices aggregated together. This is why many vendors have started to speak about performance SSDs as a cost per IOP, rather than the traditional cost per GB that is more familiar from the traditional storage world. Illustrated in Figure 2 to the right is an example of NVMe-compliant PCIe devices compared to 1, 2 and 4 SATA devices in RAID-0 using a tool called SSD Bench.

This data illustrates that the overhead of RAID on SATA offsets the potential for linear scalability that can be gained from a single PCIe SSD. (Contd.)