If AI is to be used in industrial applications, parallel computing with the shortest response times in real time is often required. This places new demands on embedded computer technology, such as computer-on-modules (COMs)

The artificial intelligence (AI) market is currently experiencing an incredible boom. According to ResearchAndMarkets, the global AI market is expected to grow by 36.6 per cent annually to US$ 190.61 billion by 2025. AI is already all around us today. In our personal lives we experience AI, for example, in product, film and music recommendations as well as service hotlines, where AI bots answer general customer questions completely autonomously.

AI is also behind smartphone features, such as face and gesture recognition and personal (voice) assistants. However, according to Deloitte’s study “Exponential Technologies in Manufacturing,” one of the most important drivers for new AI is also the production sector.

Next to less-critical use cases—such as AI for predictive maintenance or demand forecasting—industrial AI can also be used in areas of application that require real-time capabilities. These include, for example:

- Industrial image processing, where AI helps capture many different states and/or product features, and evaluates these via intelligent pattern recognition to enable more reliable quality control. Forbes states that AI can increase error detection rate by up to 90 per cent.

- Cooperative and collaborative robots that share the same work space with humans can react flexibly to unforeseen events, making decisions based on situational awareness.

- Similar requirements apply to autonomous industrial vehicles.

- AI applications in the semiconductor industry showed, for example, 30 per cent reduction in reject rates when using Big Data to analyse root-cause data, which must be collected with high precision and in real time.

- Lastly, there is the large area of real-time production planning and control of Industry 4.0 factories. Here, AI helps optimise the processes at the edge, thereby increasing utilisation of machines and, ultimately, productivity of the entire factory.

Use of AI in industrial environments requires highly-advanced integrated logic. This is because, in fast processes—as in the case of inspection systems—there is often no further control authority. This is different, for example, in medical technology, where AI results of automatic image analysis are always controlled by a doctor and where AI’s job is simply to make recommendations, accelerating data evaluation steps in the process.

For this reason, AI systems for use in industrial environments must always ensure that AI decision-making processes are traceable and—as regards machine and occupational safety requirements—100 per cent correct.

Training the AI is therefore much more complex in an industrial environment. There are also hardly any negative examples. This aspect, too, is different from medical technology, where thousands of negative and positive diagnoses can be used to train and teach systems.

In industry, on the other hand, errors must be avoided right from the start. This is why digital twins, that is, digital images of machines and systems, are often used here to simulate negative findings and then, for instance, rule out certain robot movements from the outset. So how do you develop and execute faultless industrial AI solutions for real-time requirements?

Bringing AI to the factory

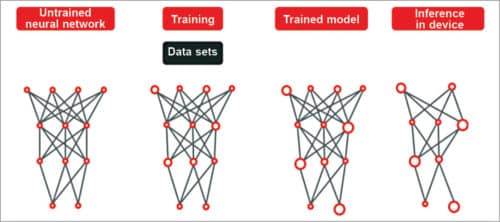

To get a better understanding, a look at the basics of modern AI systems is helpful. First, it is necessary to distinguish between machine learning (where knowledge is constantly expanded with new clear information) and deep learning (where systems train themselves using large amounts of data and independently interpret new information). The procedure for the latter, that is, deep learning, is almost always identical, even for the most diverse tasks.

Many computing units—usually general-purpose graphics units (GPGPUs)—are combined to form a deep neural network (DNN). This deep learning network must then be trained. In the field of image processing, for example, pictures of various trees can be used to train the system. The amount of image data required is immense. Real-life research projects speak of 130,000 to 700,000 images. Using all this information, neural networks then develop parameters and routines based on case-specific algorithms to reliably identify a tree.

GPGPU: an important technology for AI

In most systems, the main pattern recognition work is done in the GPGPU-based cloud with its immense parallel computing power. However, in the manufacturing industry this is—at least for the time being—ground for exclusion, as processes are generally fast. Here, it must be ensured that intelligence resides at the edge, that is, in the immediate vicinity of or even within the device itself.

This is why industrial devices, machines and systems are mostly equipped with AI systems that use knowledge-based intelligence for real-time applications and pass data for deep learning on to central clouds that cannot be connected in real time yet. This means, it is possible today to keep training a higher-level system with all new data and to keep local devices up to date with regular software updates, so that such systems already classify as self-learning systems.

Only, in this case, learning does not follow a curve but takes place in cyclical steps. This is also a reason why issues such as digital twins or industrial edge servers are so important. If you can provide both, even deep learning can become increasingly real-time capable.

The right embedded processors

It does not matter which setup OEMs choose for their AI. The required, often massive, parallel-processing power for individual real-time capable machines or systems is nevertheless very high, even when using ordinary, purely knowledge-based AI. Latest embedded accelerated processing units (APUs) from companies like AMD support this need, as they offer a powerful GPU in addition to the classic x86 processor, whose general-purpose functions also support parallel AI computing processes, such as those used in data centres.

Using discrete embedded GPUs from the same manufacturer, this can be scaled further, making it possible to adapt open parallel computing performance to the precise requirements of the industrial AI application.

AMD Ryzen Embedded V1000. With significantly increased computing and graphics performance, the ultra-low power and industrially-robust AMD Ryzen Embedded V1000 series is a good choice. With a total of 3.6 TFLOPs from a multipurpose CPU and powerful GPGPU combined, it offers flexible computing power that until a few years ago was only achievable with systems that consumed several hundred watts.

Today, this computing power is already available from 15 watts. This makes the processors also suitable for integration in fan-less, completely enclosed and, hence, highly-robust devices for factory use. As real-time processors, these also support memory with error correction code (ECC), which is essential for most industrial machines and systems.

As far as necessary software environment for fast and effective introduction of AI and deep learning is concerned, AMD embedded processors offer comprehensive support for tools and frameworks, such as TensorFlow, Caffe and Keras. At gpuopen, a wide range of software tools and programming environments for deep learning and AI applications are available. These include popular open source platform ROCm for GPGPU applications.

The open source idea is particularly important in this context, as it prevents OEMs from becoming dependent on a proprietary solution. HIPfy is an available tool with which proprietary applications can be transferred into portable HIP C++ applications, so that dangerous dependence on individual GPU manufacturers can be effectively avoided.

AI development has also become much easier with the availability of OpenCL 2.2, because since then, OpenCL C++ kernel language has been integrated into OpenCL, which makes writing parallel programs much easier. With such an ecosystem, both knowledge-based AI and deep learning are comparatively easy to implement, and are no longer just the reserve of billion-dollar IT giants like Google, Apple, Microsoft and Facebook.

Fast design-in with COMs

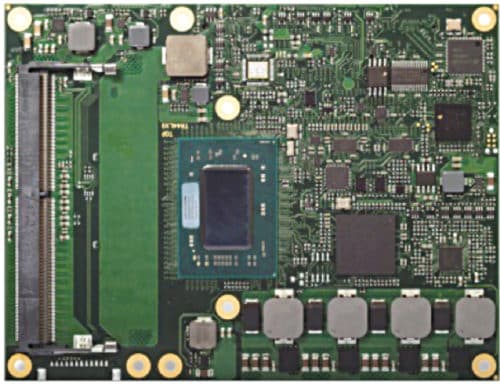

Now the only remaining question is: How can OEMs design these hardware-based AI enablers into their applications as quickly and efficiently as possible? One of the most efficient ways is via standardised computer-on-modules (COMs) that have been equipped with comprehensive support for GPGPU processing.

COMs have a slim footprint, support designs with OEM-specific feature sets, and as application-ready super components these come pre-integrated with everything that developers would otherwise have to arduously assemble in a full custom design. This makes COMs crucial for faster time-to-market.

As these are not only application-ready but also functionally-validated by many users, COMs offer high design security. This means that OEMs can expect to save between 50 and 90 per cent of their NRE costs when using modules. Thanks to the modular approach, the application can also be scaled to requirements. With a simple module swap, new performance classes can be integrated into existing carrier board designs without any further design effort, so that OEMs can easily add these innovative features to the functionality of their designs.

COM Express, the leading standard for high-end modules

A leading form factor among modules for this performance class is COM Express standard. This has been developed over many years by PICMG and is supported by all leading embedded computing suppliers. Companies like congatec—who have long been working closely with AMD and recently even extended the availability of AMD Geode processors—offer AMD Ryzen Embedded V1000 processor-based modules, for example, in COM Express Basic Type 6 form factor, which offers sufficient capacity to cover the entire performance range from 15 watts to 54 watts TDP.

Thanks to RTS hypervisor support, the real-time capable conga-TR4 module can also support AI platforms where deep learning systems and digital twins are to be connected via virtual machines so that hard real-time processing can always be ensured.

And with its standardised APIs, the module is also prepared for data exchange via the Internet of Things (IoT) gateways in compliance with SGET UIC standard. This allows OEMs to fully concentrate on developing the application—any missing glue logic can be specifically-developed and provided upon customer request.

Embedded high-end edge server design options

For OEMs wanting to bring their digital twins and deep learning intelligence to the industrial edge, there are many attractive options from companies like congatec. For example, embedded designs based on AMD EPYC Embedded 3000 processors come with long-term availability of up to 10 years. With up to 16 cores, 10-Gigabit Ethernet performance and up to 64 PCIe lanes, processors of the embedded server-class even enable deep learning applications at the edge of the Industrial IoT (IIoT). Hence, developers have everything they need on the hardware side for deep learning-based AI platforms for real-time industrial use.

Zeljko Loncaric is marketing engineer at congatec AG

Artificial intelligence offers tremendous potential for industry. It creates entirely new opportunities for flexible, efficient production, even when it comes to complex and increasingly customized products in small batch runs. As a result, production is made even more reliable and efficient.