Multi-modal solution that enables simultaneous, extremely accurate recognition of multiple voice commands and sounds

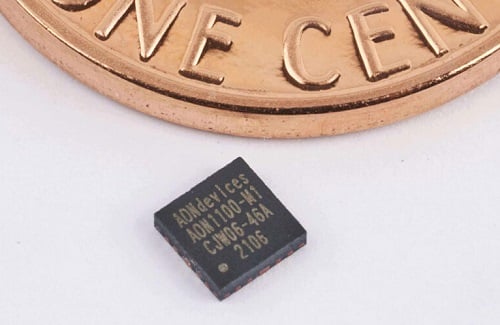

AONDevices, a provider of ultra-low-power, high-performance processors has launched samples of the new AON1100 Edge AI processor that enables unprecedented sensing capabilities in battery-powered always-on devices requiring support for local wake words, voice commands, sound event detection, context detection and sensor fusion at high accuracy – all while maintaining industry-leading low power consumption for extended battery life and better end-user experience.

The AON1100 is a multi-modal solution that enables simultaneous, extremely accurate recognition of multiple voice commands and sounds such as a baby crying or a car backfiring using a single microphone. The AON1100 also simultaneously detects specific motion patterns, such as walking or falling.

When used in phones, headsets, wearables, game controllers, vehicles or smart home appliances, the AON1100 enables natural human-machine interface at the device level without sending any data to the cloud, improving the user experience and ensuring privacy.

AON1100 features two cores: AONVoice and AONSens, which are based on AONDevices’ proprietary neural network technology. AONVoice delivers 90% accuracy in 0 dB SNR conditions. Including front-end processing and all required memory, the AONVoice core consumes less than 150µW in 40nm ULP silicon. This power is measured in 100% constant speech conditions.

“The AON1100 is a testament to unparalleled ability to innovate across algorithms, systems and silicon,” said Mouna Elkhatib, CEO of AONDevices.

“In addition, we enable a wide range of new voice, audio and sensor use cases.”

Engineering samples of the AON1100 chip are available now. AONVoice and AONSens cores are also available for licensing as IP cores for integration in an SoC to high-volume customers.