Millions of people across the globe suffer from neuro conjugate disorder, or some other form of paralysis, which does not let them move any body part except their head and the eyes. To overcome this challenge, we can make a smart personal computer (PC) that empowers such physically challenged persons to control a computational system with the help of facial movements. It enables control using face and eye movements and works even in low light or dark conditions.

Millions of people across the globe suffer from neuro conjugate disorder, or some other form of paralysis, which does not let them move any body part except their head and the eyes. To overcome this challenge, we can make a smart personal computer (PC) that empowers such physically challenged persons to control a computational system with the help of facial movements. It enables control using face and eye movements and works even in low light or dark conditions.

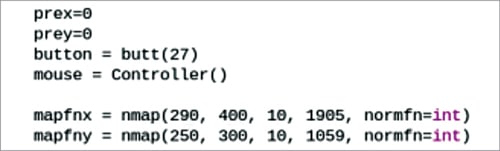

Coordinate movements in the PC =(1080,720) to (0,0)

where x=1080, y=720

mapx=(x), mapy=(y)

The solution is based on three different functions:

- Blinking detection of the right eye for system operation

- Detection of the eye movement using image processing

- Translation of the eye movement and blink to control graphic user interface (GUI) of the PC

The challenges

The above three functions come with several challenges, which can be handled with various steps for the device to work perfectly. These are:

Differentiating between natural and intentional eye blink

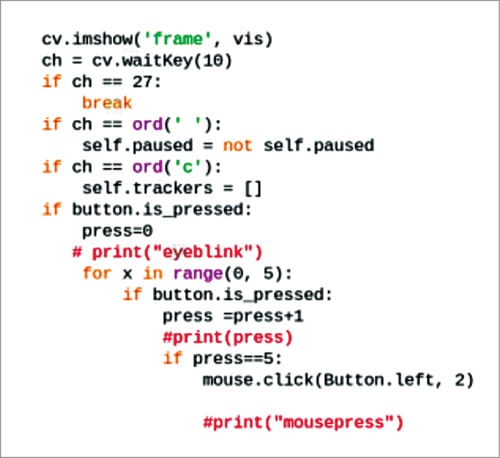

Our eyes involuntarily blink at regular intervals so that they remain moist and free of dust. To perform a left or right mouse click, here an eye will have to blink intentionally. However, there has to be some way to distinguish between involuntary and intentional eye blinks so that the device functions properly.

To do that, two sensors (one corresponding to each eye) can be integrated in such a way that when both of them detect an eye blink at the same time, it is considered a natural eye blink. If the blinking happens in only one eye (either left or right), it is considered as an intentional eye blink, resulting in a left or right mouse click.

Although this technique is effective, there is one problem. It increases the bill of material to several hundred rupees. Also, people having problem with one eye cannot use this solution.

Therefore, this solution has been modified with the inclusion of a code. Now, if an eye blinks within a second, it is considered as natural. But if an eye blinks for more than a second (say, four seconds), it is considered as intentional.

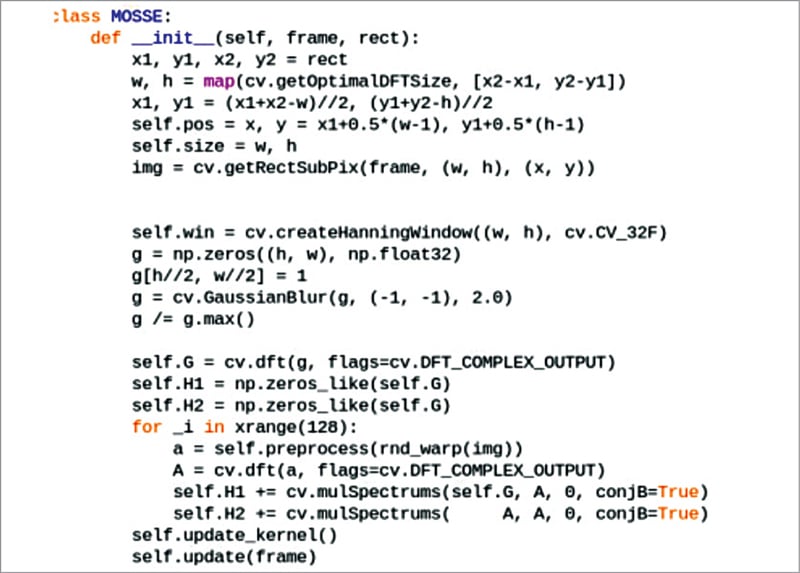

Detecting eye movement using image processing

For a mouse cursor to move, the human eye needs to refer to an object and move the mouse relative to it. Using eye movement, a physically challenged person can effortlessly operate a PC. But image processing of eye movement does not give accurate results, does not work in low light or completely dark conditions, and the entire process is quite difficult.

Thus, a light mounted on the sensor can be used to track eye movement whenever the person moves his/her head.

Translating eye movement and blink to control the PC

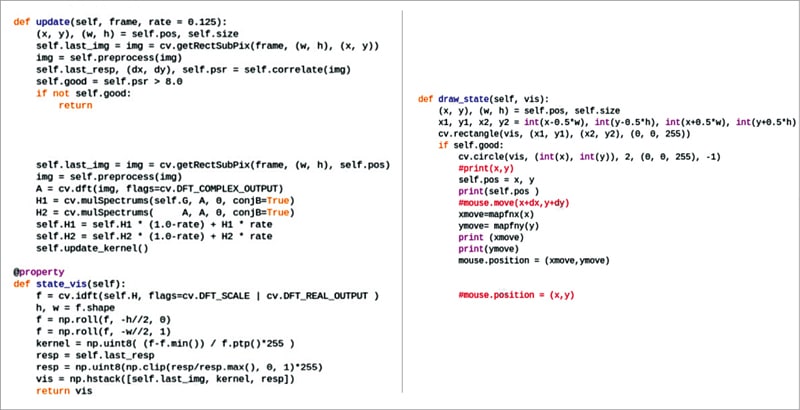

This solution detects eye blinks and moves the light mounted on the eyeglass. But it is useless without recording this movement on the PC’s GUI to obtain accurate movement of the mouse cursor. While it needs to cover the entire length and width of a PC monitor, a human head can move only up to a certain degree. To solve this problem, a small head movement needs to be converted into large pixel movement for the mouse cursor.

Prerequisites

To develop the basics, prepare the SD card with the latest Raspbian OS and check whether it has a pre-installed Python IDLE. Next, install the following Python libraries and modules for the project:

- OpenCV

- pynput

- numpy

- gpiozero

To do so, open the terminal and use the following commands:

sudo pip3 install python-opencv

sudo pip3 install numpy

sudo pip3 install gpiozero

sudo pip3 install pynput

sudo pip3 install nmap

After installing all the libraries, include the OpenCV official GitHub repository inside the Raspberry Pi using the following command:

git clone https://github.com/opencv/opencv

We are now ready to code.

Coding

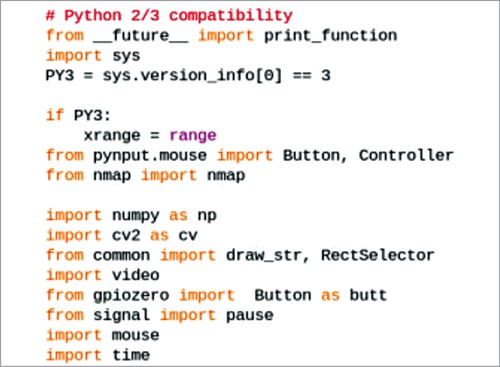

You will need to modify the example code found in the OpenCV library folder and fuse your code in it to prepare the device. For that, open the OpenCV folder→platforms folder→python, and select the mouse.py code. Copy and paste it in a new text file named headcontrolGUI.py and save it.

You will need to get the eye blink sequence from the sensor for a mouse click. To get it, access the gpio pins and import the gpiozero module into the code to read the blink sensor data.

Also, import the pynput module to create a virtual mouse input for the GUI of Raspberry Pi OS.

Next, create an if condition to check the eye status. If the eye status=blink, then set four seconds to determine if it is an intentional blink or a natural one. If the blink is intentional, pass the pynput command to virtual mouse left or right click.

Construction and testing

Put the eyeblink sensor on right side of the eye glasses and wire it to a Raspberry Pi pin. Connect a camera to the RPi board and place it more than 15cm away from the middle of both the eyes, such that the entire face fits within the camera frame perfectly.

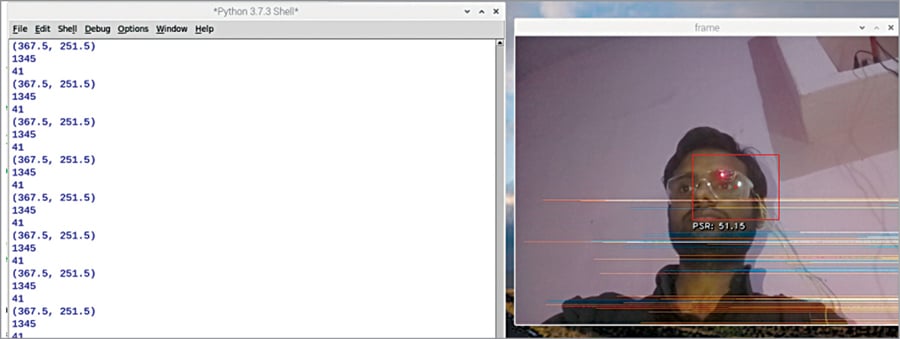

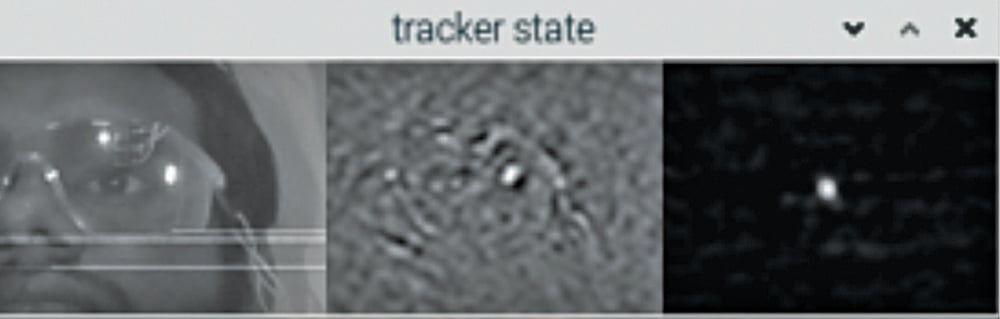

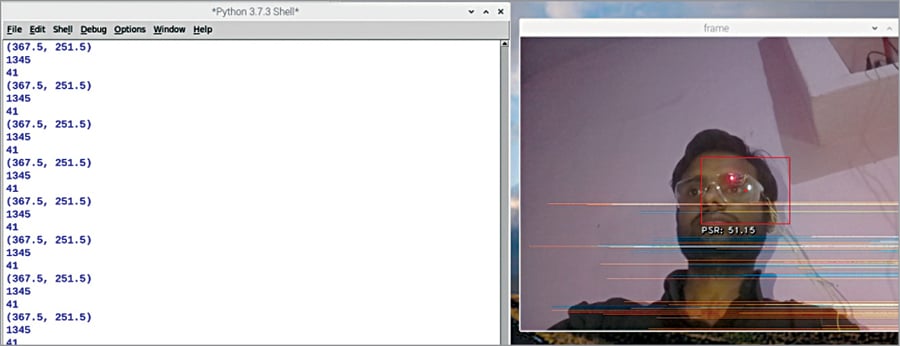

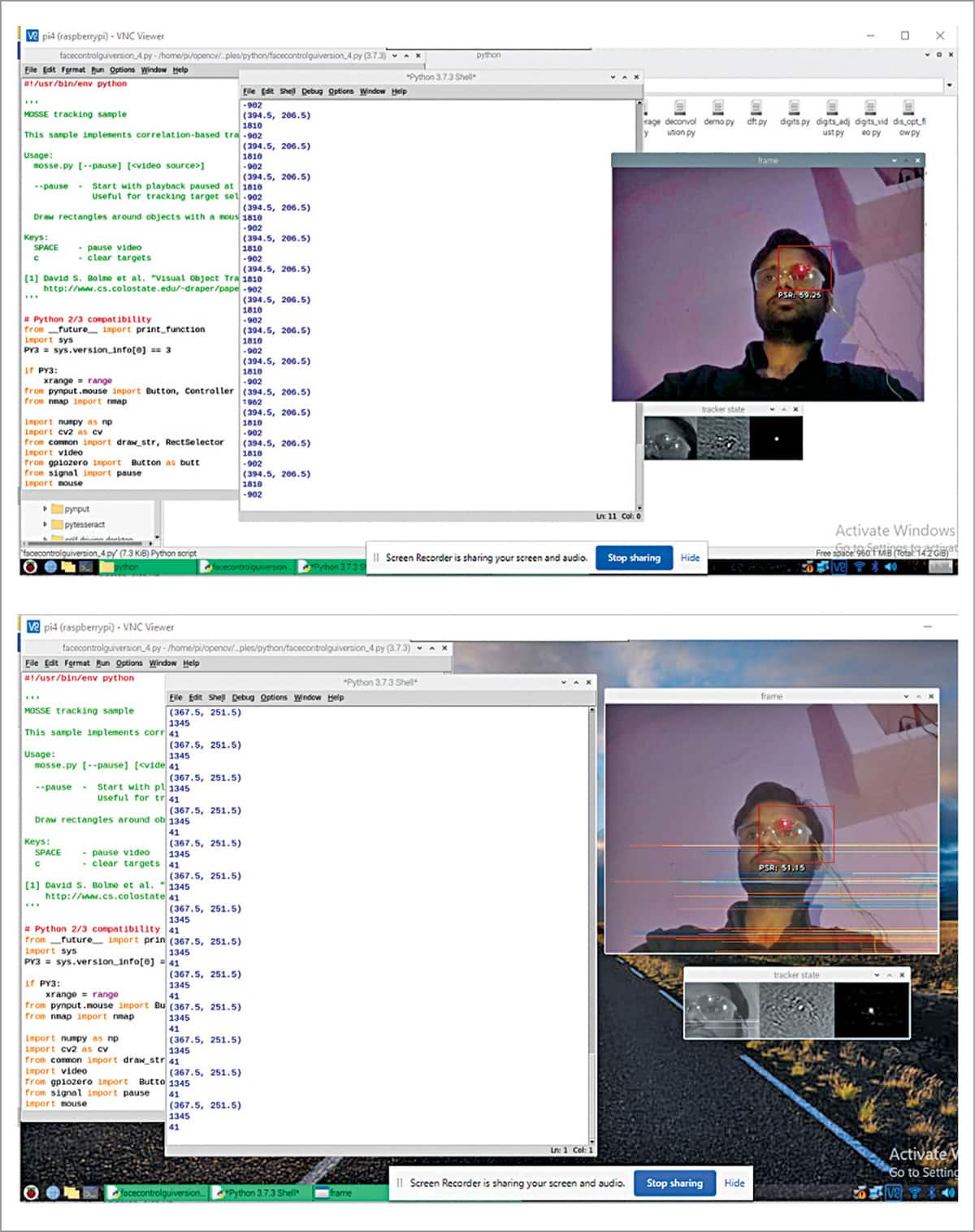

Wear the glasses and run the code. Make sure to mark the red light in the eye (as shown in Fig. 3) with a mouse to track. When successful, a person can operate a PC with eye movement. To move the mouse, move the eye in left, right, upward, and downward directions. To click the mouse, blink (shut) the eye for up to four seconds.

Download Source Code

Ashwini Kumar Sinha is a technology enthusiast at EFY

This article was reviewed and transferred from EFY June 2022 Issue and was published online here.