Grab a front-row seat to semiconductor pioneers shaping the future with AI-specific custom silicon chips.

The artificial intelligence (AI) market is rapidly expanding, offering significant growth opportunities for the semiconductor industry. This growth is mainly due to the increasing demand for AI chips, essential for executing AI workloads. In 2023, the revenue from semiconductors designed for AI workloads will reach $53.4 billion, representing a substantial 20.9% increase from 2022. This growth indicates the reliance on specialised chips to handle complex AI processes.

This statistic underlines the crucial role of AI in driving growth in the semiconductor industry. As AI technology advances and integrates into various sectors, the demand for specialised AI chips will surge, presenting lucrative opportunities for semiconductor companies. The focus on developing and supplying AI-specific semiconductors is not just a response to current market trends but a strategic move toward capturing a significant share of the burgeoning AI market. On top of that, the rise of AI marks a substantial departure from traditional technology paradigms, emphasising the critical role of hardware in enabling advanced AI applications.

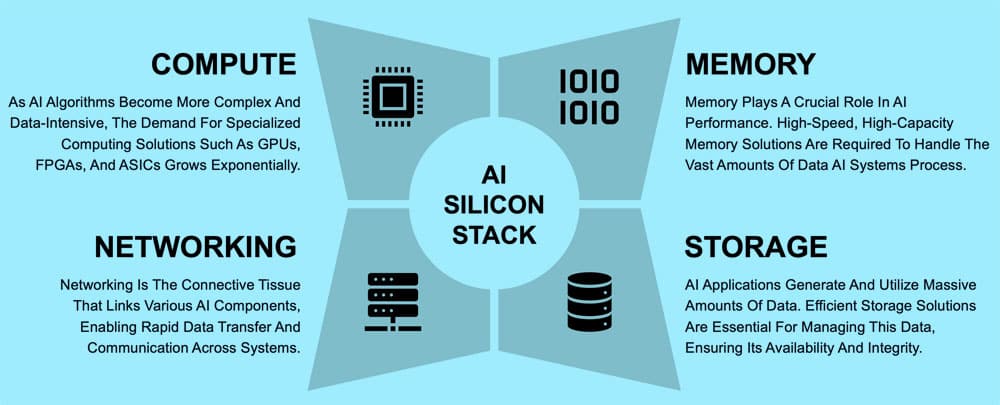

Semiconductor companies, historically focused on mass-producing generic components, now find themselves at the forefront of a technological revolution. These companies can gain a competitive edge in a rapidly evolving market by providing unique memory, storage, computing, and networking solutions.

Compute: The heart of AI operations

Compute power is the driving force behind AI. As AI algorithms become more complex and data-intensive, the demand for specialised computing solutions such as GPUs, FPGAs, and ASICs grows exponentially. These specialised processors offer faster, more efficient processing capabilities essential for tasks like deep learning and real-time data analytics.

Companies that develop advanced computing solutions tailored to specific AI applications can address various needs, from edge computing devices to large-scale data centres. Semiconductor companies can establish themselves as indispensable partners in the AI ecosystem by focusing on customisation and efficiency.

Memory: Accelerating AI performance

Memory plays a crucial role in AI performance. High-speed, high-capacity memory solutions are required to handle the vast amounts of data AI systems process. Technologies like high bandwidth memory (HBM) and on-chip memory architectures are gaining prominence, offering the speed and efficiency necessary for complex

AI tasks.

Semiconductor companies that innovate in-memory technology can significantly impact AI performance. By developing memory solutions that offer higher bandwidth and lower latency, these companies can enable more advanced AI applications, leading to new market opportunities.

Storage: The backbone of AI’s data infrastructure

AI applications generate and utilise massive amounts of data. Efficient storage solutions are essential for managing this data, ensuring its availability and integrity. Innovations in non-volatile memory, solid-state drives, and other storage technologies are crucial for supporting the data-intensive nature of AI.

By developing advanced storage solutions that offer higher capacity, faster access times, and more excellent reliability, semiconductor companies can cater to the growing needs of AI systems. It opens up new market avenues and positions these companies as leaders in AI infrastructure development.

Networking: Connecting AI’s diverse ecosystem

Networking is the connective tissue that links various AI components, enabling rapid data transfer and communication across systems. The need for advanced networking solutions to support high-speed data transmission and low-latency communication becomes imperative as AI applications expand in complexity.

Semiconductor companies that invest in networking technologies, such as high-speed Ethernet, 6G, and beyond, can play a pivotal role in the AI landscape. They enable more seamless and integrated AI applications by facilitating faster and more efficient data communication.

Why these domains are crucial for AI

The importance of computing, memory, storage, and networking in AI cannot be understated.

• Compute power determines the speed and efficiency of AI processing

• Memory dictates how quickly and effectively an AI system can access and process data

• Storage is critical to managing the vast amounts of data generated by AI applications

• Networking ensures that all components of an AI system can communicate effectively

Together, these four domains form the backbone of AI infrastructure, impacting everything from the feasibility of AI applications to their performance and scalability. Semiconductor companies that can provide innovative solutions in these areas are not just contributing to the advancement of AI, they will also shape the future of technology.

Seizing the AI Silicon opportunity

In summary, the AI Silicon opportunity offers a unique and potent avenue for growth in the semiconductor sector. As AI technologies evolve, the demand for specialised memory, storage, computing, and networking solutions will surge, creating new markets and opportunities for innovation.

Semiconductor companies that effectively capitalise on this trend by developing advanced, AI-specific silicon chips will secure a competitive edge and play a pivotal role in shaping the future landscape of AI-driven technologies and applications.

The author, Chetan Arvind Patil, is Senior Product Engineer at NXP USA Inc.