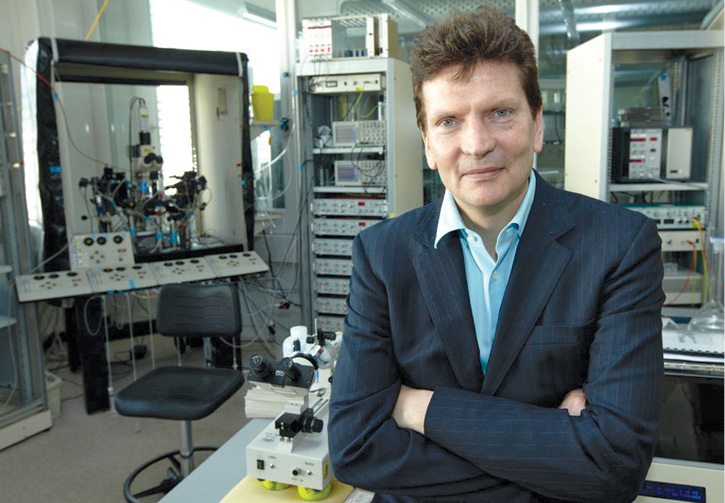

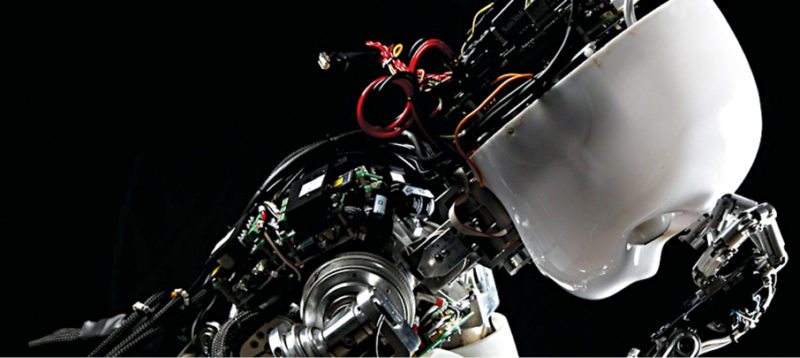

The human brain is the most remarkable creation of God and also the reason behind human intelligence. Blue Brain is the name of the worldís first virtual brainóa machine that works on the same lines as human brain. The Blue Brain System is an endeavour to reverse-engineer the human brain and rebuild it at the molecular level via computer simulation. The project was initiated in May 2005 by Prof. Henry Markram at Ecole Polytechnique FÈdÈrale de Lausanne (EPFL) in Lausanne, Switzerland.

IBM (fondly known as The Big Blue) and Swiss University team, associated with fabricating a custom-made supercomputer based on IBMís Blue Gene design, have been working together on Blue Brain Project. IBM brings to the table expertise in visualisation, simulations, algorithms, Blue Gene optimisations and development of innovative computational methods.

Blue Brain Project expects researchers to develop their own models of various brain areas in dissimilar species and at altered levels of detail using Blue Brain Software for simulation on Blue Gene. The objective is to collect these models in a central Internet database from which Blue Brain Software can mine and link models together to form brain regions. This would finally lead to the first whole brain simulation.

It is hoped that Blue Brain Project will enable building up the virtual brain, which will ultimately unravel the mysteries of the key facets of human cognition, such as perception, memory and, may be, consciousness, too. For the first time, we will be able to witness the electrical code our brains use to denote the world, in real-time basis. We also expect to gain an understanding of how certain failures of the brainís microcircuits lead to psychiatric disorders such as autism, schizophrenia and depression.

Reconstructing the enigmatic human brain using IT

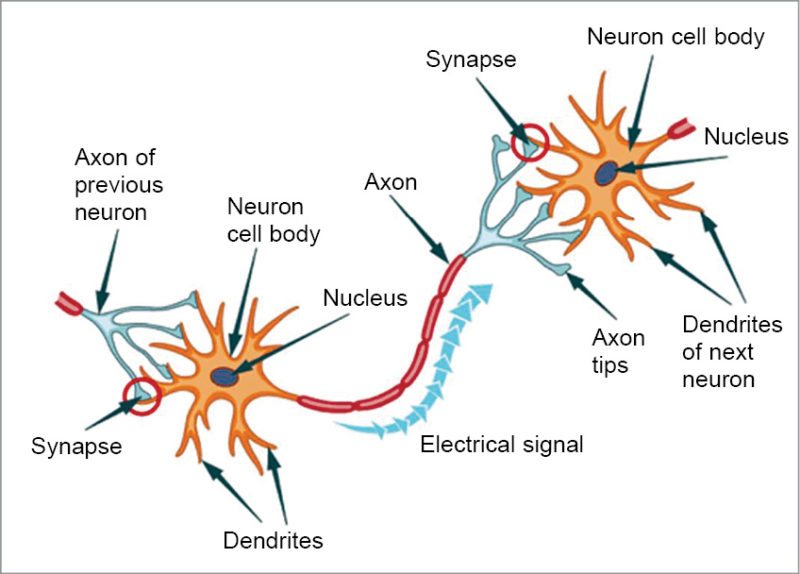

Typically, a human brain has over 100 billion neurons or brain cells and about 100 trillion synapses, thus making it a very complex multilevel system. The inter-neuron connections make up a hierarchy of circuits that range from local microcircuits to macro-circuits that form the entire brain. At the unit level, each neuron and synapse is a complex molecular machine in itself. It is the interactions between these levels that leads to human behaviour, human emotion and human cognition, as we know it.

Blue Brain Project aspires to develop wide-ranging digital reconstructions, that is, computer models of the brain including its diverse levels of organisation and interactions. Blue Brain Projectís reconstruction approach pinpoints interdependencies in experimental data; for example, dependencies between the size of neurons and neuron densities, dependencies between the shapes of neurons and the synapses these form, and dependencies between the number of boutons on axons and synapse numbers. It then uses these to coerce the reconstruction procedure. Multiple and intersecting constraints enable the project to develop the most accurate reconstructions possible from the scanty experimental data available, thus doing away with the need to measure everything.

Digital reconstructions of the brain tissue characterise a snapshot of the anatomy and physiology of the brain at a single moment in time. Blue Brain Project simulations use mathematical models of individual neurons and synapses to calculate the electrical activity of the network as it progresses over time. This demands a very high computational power that can only be delivered via large supercomputers. In fact, the larger the volume of tissue that needs to be simulated and higher the accuracy, the higher is the required computing power.

The range of experiments that can be enabled is proportional to the size and accuracy of digital reconstructions. Blue Brain Project is now constructing neuro-robotics tools wherein brain simulations are coupled to simulated robots and a simulated environment, in a closed loop. These novel tools enable replication of cognitive and behavioural experiments in animals, wherein the sensory organs capture and encode data about their environment, and their brain produces a motor response. The supercomputer based reconstructions and simulations put together by the project suggest a profoundly new strategy for comprehending the multilevel structure and function of the brain.

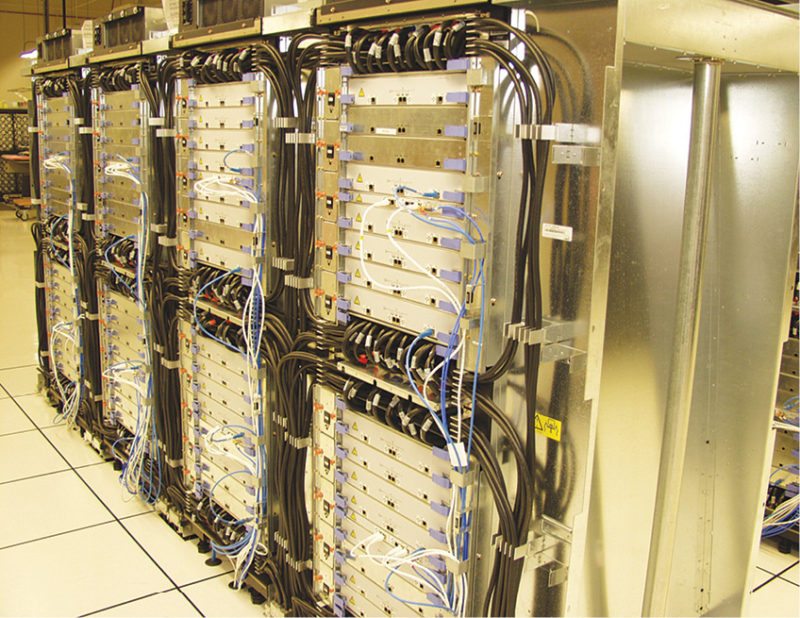

The Blue Gene Supercomputer

The Blue Brain workflow demands large-scale computing and data infrastructure. The hardware typically consists of the following configuration:

1. IBM 65,536-core BlueGene/Q supercomputer for modelling and simulation (hosted at CSCS), providing extended capabilities for data-intensive supercomputing (Blue Gene Active Storage)

2. Fourty-node analysis and visualisation cluster

3. OpenStack/Ceph private Cloud running in two regions

4. Different storage systems for data archiving and neuroinformatics

5. Modern continuous integration, collaborative software-development platform

State-of-the-art technology for acquisition of data on various brain levels of the brain organisation comprises multi-patch clamp set-ups for studies of the electrophysiological behaviour of neural circuits, multi-electrode arrays enabling the stimulation of and recording from brain slices, services for the formation and study of cell lines expressing specific ion channels, a multiplicity of imaging systems and systems for the 3D reconstruction of neural morphologies.

This infrastructure has been made available in partnership with EPFLís Laboratory for Neural Micro Circuitry. Accomplishment of Blue Brain Project hinges on very high volumes of standardised and high-quality experimental data that encompasses all likely levels of brain organisation. Data is sourced from the literature (via the projectís automatic information extraction tools), from Human Brain Project, from large data-acquisition initiatives outside the project, and from EPFLís Laboratory for Neural Micro Circuitry.

Blue Brain workflow generates a huge need for computational power that falls in high-performance computing arena. In Blue Brain cellular-level models, depiction of detailed electrophysiology and communication of one neuron is estimated to require as high as 20,000 differential equations. While there are modern multi-core workstations, it is very challenging to solve such a high number of equations in biological real time.

Blue Brain Projectís simulation of the neocortical column incorporates detailed representations of a minimum of 30,000 neurons. Generally, in order to facilitate valid boundary conditions, N times this number is required.

In early stages, IBM BlueGene/L supercomputer running on 8192 processors was being used. BlueGene/L system is a totally new approach in supercomputer design optimised for bandwidth, scalability and the ability to handle large amounts of data while consuming a fraction of power and floor space required by some of the leading supercomputing systems.

The system needs the floor space of about four large fridges, and has a peak processing speed of a minimum of 22.8 trillion floating-point operations per second (22.8 teraflops).

This means that the supercomputer can theoretically carry out 22.8 trillion calculations per second. By mapping one or two simulated brain neurons to each processor, the computer becomes a silicon replica of 10,000 neurons communicating back and forth.

BlueGene/L System was designed to simulate high-speed atomic interactions, which also provide the optimal architecture to simulating neural interactions. Simulations optimsed for clusters using MPI messaging can easily be ported to run on Blue Gene. BlueGene/L Prototype System allows parallel processing of virtually any number of processors to meet the memory and speed demands of a simulation. It can be scaled up enormously to meet further computational demand, and has provided the foundation for further development on BlueGene/P, the next-generation IBM supercomputer that constitutes a quantum leap in memory capacity, processing speed and whole-brain simulations.

Till recently, the project was powered by a 16,384-core IBM BlueGene/P supercomputer that had a memory about eight times more than the memory of IBM BlueGene/L. This makes IBM BlueGene/P capable of touching pet flops speeds and quadrillions of calculations per second.

Presently, IBM BlueGene/Q supercomputer with 65,536 cores and extended memory capabilities hosted by Swiss National Supercomputing Center in Lugano has been deployed.

The Blue Brain Software

The human neocortex region has millions of microcircuits called neocortical columns and, hence, it is important to create a molecular-level modelling of a neocortical column using sophisticated software. This software version is transformed into a hardware versionóa molecular-level neocortical column chipówhich can then be duplicated. While there are many software for simple/point neurons, there are no optimised software programs that can carry out very large-scale, that is, tens of thousands, simulations of morphologically-complex neurons. The software for such simulations consists of a hybrid between two powerful software approaches: one for large-scale neural network simulations called Neocortical Simulator and the other is a well-established program called NEURON.

Microcircuit databases are critical as Laboratory of Neural Micro Circuitry has attained a huge quantum of data on the composition and connectivity of the neocortical column. Microcircuit data from many other labs all over the globe will also be included. The new database, NEOBASE, is constructed on ROOT platform and modelled on the lines of CERNís innovative work that has facilitated thousands of researchers to work as one team on the same project.

The ultimate objectives are to enable full-scale researcher interactions through NEOBASE for further construction of microcircuit database, partnered visualisations and planned simulations. Microcircuit visualisation is possible since BlueBuilder has been built to design, upload and connect thousands of model neurons. BlueBuilder uploads Neurolucida files with complete morphological data.

In parallel, the model neurons need to be modelled in NEURON to include the physiological properties. The export from BlueBuilder references NEURON files for BlueColumn simulations, while BlueBuilder pulls out neurons directly from NEOBASE. Connections are formed according to established connectivity rules. Phenomenological and biophysical models of synapses are assigned to the connections within BlueBuilder. It generates two types of output files: first for simulations by NCS or Neurodamus on Blue Gene and second for visualisation in BlueVision.

Visualisation consists of diverse graphic formats ranging from neurolucida reconstructions, lines, triangles, particles to NURBS. Ultra-high-resolution graphics are designed by deploying interactive walk inside and navigation technologies. 2D, 3D and 3D immersive visualisation systems are also likely to be used.

BlueImage is a software module connected to BlueVision that permits in silico imaging of the activity produced in the neocortical column. All values that emerge as an output of the simulation can be imaged. Microcircuit analysis is possible as Blue Gene simulations can generate terabytes of data in a span of minutes of simulations.

BlueAnalysis is used to graphically display, analyse, discard and archive data at a speed as high as feasible. Microcircuit simulations are feasible since the neocortical microcircuit is simulated via NeoCortical Simulator, which is capable of large-scale simulations.

NeoCortical Simulator is optimised for parallel simulations with MPI messaging and permits easy expansion of the complexity of the simulation.

NeoCortical Simulator is deployed for all large-scale simulations using the least neuronal models. As many as ten compartmental models can be used in NeoCortical Simulator.

Detailed neuron simulations are conducted via NEURON developed and implemented for simulations on Blue Gene. A merged NeoCortical Simulator-NEURON simulator called Neurodamus carries out large-scale NEURON simulations. Neurodamus is likely to evolve further for optimal large-scale Blue Gene simulations of multi-compartmental neuron models.

BlueStim is a software interface to Neurodamus that enables mapping of external input into any one of the 100 million synapses in the column. Stimulus Generator permits connecting of columns with the external world or with other columns and other brain regions.

BlueRead, a software interface to NeoCortical Simulator and Neurodamus, enables us to define values that are to be read out of the simulator for visualisation, display and analysis.

What the future holds

The brain carries out several analogue operations that computers are not capable of performing and, in many cases, it manages to carry out hybrid digital-analogue computing. The biggest differentiating factor between the brain and computers is that the brain is constantly changing with time. Imagine, if components such as integrated circuits and transistors started changing, then a computer would actually end up becoming unusable.

You can imagine the brain as a dynamically-morphing computer, considerably different from other organs like the heart or lungs.

Understanding the brain is vital, not just to understand the biological mechanisms that give us our thoughts and emotions, and that make us human, but for practical reasons. Understanding how it processes information can make a fundamental contribution to the development of new computing technologiesóneurorobotics and neuromorphic computing. More important still, understanding the brain is essential to understand, diagnose and treat brain diseases that are imposing a rapidly-increasing burden on the worldís aging population.

The present configuration of Blue Gene, that is, BlueGene/Q, is capable of touching pet flops speeds and quadrillions of calculations per second. This supercomputer has as many as 65,536 cores and extended memory capabilities. The next generation of Blue Gene supercomputers is expected to be able to deliver an even higher level of computing power and, hence, be able to simulate even more neurons with significant complexity.

However, sky is the limit, as today scientists are thinking beyond all this and conducting challenging research studies. They are hoping to create an artificial brain that can think, respond, take decisions and store any type of data in its memory. Key objective is to upload a human brain into a machine, which will enable man to think and take decisions effortlessly.

After the death of the human body, the virtual brain will actually be able to act as the deceased. Hence, there will be no loss of knowledge, intelligence, personalities, feelings and memories of humans. In a way, man is on his way to becoming immortal.

Deepak Halan is associate professor at School of Management Sciences, Apeejay Stya University

The ERP for college offered by Purestudy is very easy to operate and makes the work for the college management, students and teachers quite simple, which will make it a favorite among them. Everybody would like to use it on a regular basis as it makes the work much simpler and streamlined.