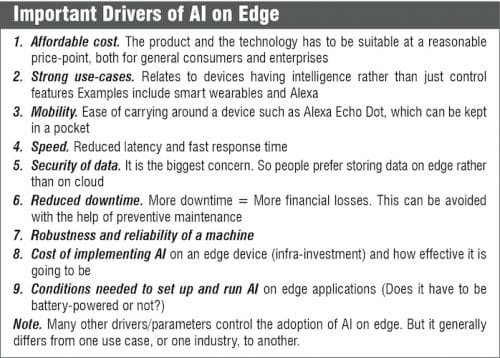

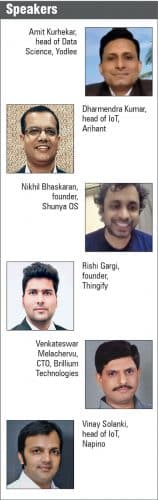

AI on Edge is not just a buzzword anymore. It holds value for the creators, enablers as well as the end-users. But what are some of the top use-cases, and what tech is available to empower creators for carving out AI on edge? This was discussed by the panel of experts on this subject in October during India Electronics Week. Their thoughts and key points have been compiled and presented below.

There has been a lot of hype around AI on edge. IoT and AI are not just buzzwords anymore. Consumers, businesses, and enterprises are all looking to adopt digital transformation in their processes and lives. It is important to consider the kind of experiences or values that can be given to the end-user, whether it is B2B or B2C use cases.

At the same time, it is not just enough to know how to build, but also what to build. A lot of techies build things that do not sell. Since these are critical times, there are not enough resources to waste.

The journey till date

Traditionally, AI has been used to identify patterns out of the collected data, saving the data, and making inferences with respect to a business case. It used to demand large computing resources and large datasets, which typically were housed in centralised data centres.

AI did not happen in last ten to fifteen years; it has been happening since the 1950s. But the sheer computing power required to run heavy compute loads became cost-effective about fifteen to twenty years back. It was then that cloud brought inexpensive computing and started giving way to AI and machine learning (ML) workloads to be run on the cloud.

As we all know, IoT technology refers to devices having the ability to talk to the Internet, push data to the cloud, and also have the ability to control or get controlled remotely. AI is a set of frameworks that makes these devices capable of taking their own decisions. Edge computing refers to when the devices can take self-decisions or have local servers through which data can be processed.

“As computing became cheaper, particularly with supercomputers in the form of smartphones and various IoT devices at our expense, the need to bring in that type of intelligence onto the edge became relevant,” as per Venkateswar Melachervu, CTO, Brillium Technologies. He says, “Edge is where data resides and is collected.”

AI on edge is a system that has AI capabilities on the device itself or at some local node. This innovative feature allows devices to make their own decisions based on their previous learnings of different situations and their outcomes.

For instance, devices such as smartphones, smartwatches—even a temperature sensor—can be classified as edge devices. It is to be understood that edge computing is needed not just for the industry but also for the consumer.

“A lot of data is driven from machines or IoT devices, and that data needs to be computed from the security and privacy point of view. Therefore we need to have a better understanding of the differences between IoT, cloud, and edge computing,” opines Dharmendra Kumar, head of IoT, Arihant.

Since intelligence on edge devices such as smartphones, smartwatches, and connected cars has been there for a long time, Rishi Gargi, founder, Thingify adds another important point, “A boundary needs to be established between what is AI on edge and what other technologies are, and how AI on edge is leveraging AI without having any Internet or making decisions on its own.”

Why go for AI on edge

The power of today’s computing is much more than what was available earlier. Even the latency and longevity of the batteries have improved. All these essentially have helped AI to be pushed for running on the edge, be it IoT devices, within the gateways, or the edge network.

Edge computing generally incorporates a processor and storage, as well as logic to process information. An example is Alexa, which uses natural language processing (NLP) at the edge to respond to queries from a human being. But AI on edge is more than just computing. “It is not just about processing the data, but also deriving trends and patterns or intelligence by applying machine learning algorithms to give immediate feedback to the user,” thinks Vinay Solanki, head of IoT, Napino.

An example could be of a smart camera that has been installed in a smart city application for monitoring vandalism. While capturing videos or images, it could decide locally without sending any feedback to the cloud and thus become smarter on its own.

Data security is the biggest concern

The real challenge for a customer is regarding data security. People do not want to save their data on the cloud. When running an algorithm in the cloud on a stored data, it should be properly safeguarded, both on the device from where it has been generated and in the network. Cloud can enforce certain other security protocols like firewall, some encryption-decryption logic, or public-private key for the handshake, and make it safer.

“Some users might not be willing to share their data on the cloud. So, this approach can transform the capabilities of such applications to store data on mobile as well as process it. Health-related applications are extremely sensitive, therefore it is important to process the data on the mobile,” believes Amit Kurhekar, head of Data Science, Yodlee.

“It may sound a little oxymoronic because when you have distributed computing resources in the edge network, you are exposed to data attack (for instance DDoS). But usually, AI-edge is considered more secure for your data,” as per Venkateswar Melachervu.

Nikhil Bhaskaran believes that since people do not want all of their private data to go on the cloud, the edge is therefore becoming popular.

The cost concern

Having AI on cloud vs AI on edge is an important question, because on the edge it is the responsibility of the device and the device designer to ensure that it is secured. If somebody hacks into the device, it should be tough for the person to extract the data. But that might result in more cost of the device and more processing power requirement.

“You have to look at the ROI (return on investment) of the application that you are thinking of. One should not apply IoT just anywhere. It should make sense from a use-case perspective. Same goes for AI on edge. If you want to make the edge smarter, then consider whether it is an ROI-friendly decision, because if you need to invest to make every device smarter, then with that there will be added complexity of making the solution secure. One needs to be clear whether any extra investment is going to justify the output or the ROI that will be derived from the solution. It all depends upon what kind of real-time decision you need to make at the edge, or whether sending the feed to the cloud or network is going to be costly, time-consuming, or not secure,” advises Vinay Solanki.

Amit Kuhrekar advises having sufficient whenever you are developing something. Consider the cost of development, cost of hardware, and how many such devices are going to be needed. At the end of the day, you will have to maintain that.

“Always factor in the cost of your solution in the design process itself,” opines Nikhil Bhaskaran, founder, Shunya OS.

In terms of cost-effectiveness, it is a matter of business decision for having an aggregated data model in a manufacturing unit. If you have twenty machines, then it is better to deploy sensors at each machine, which may be cost-effective (depending on the business need). The sensors could send data using machine-to-machine communication to a central node device, which is on the edge and has the capability to run ML algorithms or do predictive analytics in conjunction with the backend cloud.

There is cost involved but it is a tradeoff in terms of the outcomes and the ROI that you expect. If there is a proper balance of security, cost, and form factor, then the edge could be very secure.

According to Venkateswar Melachervu, there is no drastic change in hardware on the edge from what is implemented on the cloud; they are pretty much the same. Edge devices, particularly mobile devices, have to run one or two iterations. This generally causes the battery to drain very fast, which needs to be recharged frequently. This is a constraint in terms of battery life as the device has to run recursively and there is a lot of computational load.

Purpose-built AI on edge

The stack that defines edge could be divided into several parts: Hardware, AI-capable microcontrollers, AI cores (CNNs, BNNs, accelerators, and other neural network binary codes), whether it is open-source or from Google. And then there are software tools that could cross-compile high-level C++ or Java code onto these computing cores, and some reference designs and architectures and custom services. There are a lot of combinational architectures that Google and Azure are coming out with (both edge and hybrid).

Today, data storage is not a serious problem as gigabytes and terabytes space is available pretty much on every device. The battery life is also gradually improving in terms of longevity. But the biggest challenge would be to have optimised or purpose-built algorithms for edge devices, IoT devices, gateways etc.

This is where innovations are taking place. On the hardware level as an example, Google’s AI-edge stack has an edge TPU, called CoralEdge, and an IoT edge software pack that brings in the entire Tensor framework algorithmic pieces as lightweight into mobile devices.

The iPhone 12 has A14 chip that is purpose-built for AI and ML. Called Deep Fusion, it is hybrid and has 8-core neural engines, 6x faster matrix multiplication, one trillion hours per second on CPU, and 40 per cent more power efficiency, making the compute cycle and instruction execution efficient. NVIDIA is also not far behind. Facebook has PyTorch mobile.

In terms of difference, each is optimised for battery, storage, and other device needs.

The currently released GPUs are basically vector processing units built into the SoC itself. It is like integrating GPU inside the CPU, and giving a combined solution on the edge, which can do all of those calculations really fast.

Solving the latency issue

For the data, which is being produced at millisecond-level or even second-level, you need some kind of analytics on the edge so that you can prioritise and make immediate decisions, and choose to store aggregated data.

There are two dimensions to latency: propagation delay (the time taken for data to go and come back, which is decided by your IP routes and routing cables) and the time taken to run compute-intensive AI and ML algorithms like CNNs and neural networks. So, if you are running resources in a high-computing data centre with mainframes, then your time would be relatively less compared to a normal phone, which has a limited MIPS capability.

Companies like NVIDIA, Google, and even Apple have purpose-built AI-chips for running heavy computational loads with respect to AI and ML. Google’s TFUs and TPUs run at a much faster rate on par with mainframes and big machines. These tools are coming to the edge today as there is a need and value for businesses and people. It is essential to have hardware with good network and compute speed along with the technical stack such as TensorFlow.

Whenever designing or developing an AI or ML system, you should take into consideration the required response speed along with the expectation of the user. Depending on that, you will have to tweak your algorithms and data flow.

The applications aka use-cases

From the consumer side, there are many applications of doing AI or ML on edge—from voice assistant, face recognition to wearables. From a B2B perspective, it is Industrial IoT (IIoT).

“A French company, which manufactures components for aircraft, uses real-time location solution (RTLS) like RFID or BLE to track different parts. Since new parts keep coming in when old parts are consumed, the company was not able to understand the usage frequency of a particular component in a manufacturing line. Therefore they made gateways, which were deployed in the warehouse and started implementing ML on them. The gateway was power over ethernet (PoE), which has a good processor capability.

Through this, they were able to process the information locally and the operator on the floor got quickly informed about the outcome. This was done through multiple ML algorithms on data over a period of one year. This was not done real-time but based on predictions made by the algorithm. Ninety per cent of the time, the predictions came out to be true. If it was done real-time, it would have taken a lot of time to make a decision and give the output to the operator,” informs Vinay Solanki.

He further adds that his company, Napino, leverages AI on edge as robots are used for evaluation purposes. An in-house solution is also being built for social distancing and marking attendance of employees using IoT applications with beacons. This is not yet an AI implementation, but the application is going to have a lot of data on which ML will be implemented to find analytical insights. Another application that was worked upon was a smart shower, which monitored the amount of water consumed while taking a bath. That was achieved using a flow meter plus an IoT gateway to upload the data.

According to Dharmendra Kumar, a major application for AI on edge is industry asset monitoring. Machines need to be controlled and monitored for optimal data consumption and processing. If this happens on AI-edge, then data acquisition will be fast and happen inside edge only rather than data going to the cloud and then giving output. On the consumer side, home automation seems to be big.

Venkateswar Melachervu states a very unique use-case. A year or two ago, he was working on providing a telemedicine platform for pregnant women—right from conception to postpartum. Since a lot of doctor consultations and tests take place over a period of nine months, the idea was to offer these services at convenience with the help of an app. This was a noble step in timely addressing certain critical diseases that can manifest during the pregnancy such as gestational diabetes mellitus, which is characterised by sugar levels becoming high during pregnancy, affecting the health of both the baby and the mother.

Because of the computational intensity and data, it was chosen to run a model in the cloud, get the data and give the results. But with AI-edge coming in, a model can now be created on the edge itself, based on the data of 10,000 to 20,000 pregnant women. With sufficient data, it is possible to derive a linear prediction model to arrive at a risk percentage (between 0 and 1). It can help in predicting early-on gestational diabetes with the help of ML, which can be constantly updated as the results keep coming in.

He also states another use-case. Electricity meter reading is generally not done manually now. At times, this leads to inaccurate billing. With a little bit of built-in edge intelligence on a handheld device, a meter reader can go to homes and use that device to generate bills right then and there. This will make it possible to accurately measure the consumption units in a single day without any error, cutting down lots of problems for the consumers as well as the electricity board.

Amit Kuhrekar also shares his earlier experience of working on a problem to identify challenges for the sachet manufacturing process. In this work process, every machine is a high-speed machine. The sachets that we see in strips are initially formed in a mat, which is later cut. While this happens, the operator needs to stop the machine and check whether the sachet is leaking because of bad thermal sealing. There should not be any obstruction in between the seal for it to stay intact. Even if a single sachet is found to be leaking, the whole sachet batch is kept on hold and then everything needs to be tested manually. To identify if there is a sealing issue and predict and stop the machine, thermal cameras were installed. With the help of ML, thousands of images were generated and specific images were identified.

Further opportunities that AI on edge can provide

In the current Covid-19 scenario, startups can focus on providing augmented reality glasses having low latency and fast video processing that are equipped with thermal cameras. So, even if people are moving, their temperature can be scanned, identified, and an alert can be issued.

When people start returning to offices, there can be another application wherein face recognition technology can scan the identity of the incoming people, thereby removing the need to swipe a card.

So, is it worth putting AI on edge? “Yes!” says Dharmendra Kumar. “AI is capable of making a decision depending on the type of scenario and application. For example, for connected cars, AI can take a decision, but it should also come from the driver. For industrial, heavy-vehicle, railways, and ATC applications, AI can be implemented. On the manufacturing side, manufacturers can implement the intelligence in infra on AI-edge.”

Agreeing to that Rishi Gargi says, “It is not necessary to always allow devices to make decisions. Sometimes, it could be a hybrid model to suggest to the user the type of action to take. The device can decide if it has well-defined confidence or prompt the user to take a certain decision. It’s a reinforcement kind of situation.”

Venkateswar Melachervu points out that everything has a tradeoff. Nonetheless, in terms of being successful on the edge, it is critical to be driven by the utility that users can derive. For that, you could potentially look at the AI-driven CPUs and microcontrollers. Mobile devices these days are pretty much ready in terms of computing resources and storage. TensorFlow Lite provides stacks readily available that can run across platforms like Android and iOS. What you need to look at is whether to optimise on a device itself or do it in an aggregated way in a gateway device within the edge, or do a combination of these two and the cloud at the backend.

When designing the solution, always consider how to move across from one technology to another technology, and from one architecture to another architecture, so that you have enough flexibility and robustness.

Gong the AI on edge way?

Overall, both designers and industry leaders can leverage the huge potential that AI on edge offers. So, while the technology brings faster computing speed and intelligent data processing required for solving the modern-day demands, it is also essential to keep in mind the cost of developing it and the enhanced data security of user data.

“Fall in love with the problem and not the solution. If you fall in love with the problem, then you can always find a solution—whether it is on edge or cloud,” advises Amit Kurekar.

“There is a strong case for AI on edge. There are MPUs and bionic chips that are making this possible. At the very base level, the hardware is important for doing AI at the chip level. And since they are cheap, computers are also becoming cheap. At the same time, you have to be careful whether you want to put everything at the cloud level or aggregate it at the edge level. On top of it sits the OS that has been built to run AI. Along with this sits the framework layer, which allows you to run optimally on the edge. The programmer is offered a choice of the framework and to build an AI application. Further, on top, you can use Python or C++,” says Nikhil Bhaskaran.

The Akida 1000 neuromorphic processor on a chip from Brainchip Inc solves the cost, latency, security and power constraints mentioned in this article.