Internet enhances capabilities

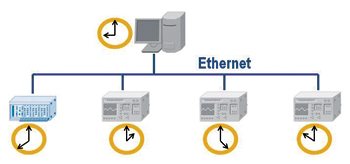

Internet capabilities have enhanced instrumentation capabilities in several areas. “LXI devices make remote data acquisition applications relatively simple, synchronisation between tests can be accomplished over the Ethernet using features like IEEE 1588, and remote diagnosis of problems helps minimise downtime,” lists Stasonis.

Dr Agrawal explains further that LXI is a recent technological standard that allows the user to plug-and-play test devices from different manufacturers and route the data on a local-area Ethernet network without worrying about the individual system unit’s bus structure, operating system or firmware. LXI-compliant equipment also allows a user to place test instrumentation close to the unit under test, thereby saving time and expense by not needing to lay out long wires to connect distant measurement instruments.

In short, a networking technology like LXI makes it possible to logically string together physically separated T&M instruments for remote data acquisition, processing, etc. But for remote test equipment to work together, time synchronisation is very important. That’s because in critical spaces like T&M, even a microsecond’s lapse in recording or reacting to a measurement can prove to be too costly. This is where IEEE 1588’s Precision Time Protocol (PTP) comes in. The PTP makes it possible to synchronise distributed clocks with an accuracy of less than one microsecond via Ethernet networks for the very first time. This makes it possible to apply LXI even for critical automation and testing tasks.

[stextbox id=”info”]Almost all of the testing industry has accepted the concept of virtual instrumentation, which equals or exceeds traditional instruments in terms of data rate, flexibility and scalability, with reduced system cost[/stextbox]

Rise of heterogeneous computing in test

Typically, an automated test system uses many types of instruments for measuring different parameters, because every instrument has unique capabilities best suited for specific measurements. The complex computations involved in the T&M process also require various specialised systems. For example, applications like RF spectrum monitoring require inline, custom signal processing and analysis that are not possible using a general-purpose central processing unit. Similarly, there might be other processes that require specialised computing units like graphical processing systems, FPGAs or even cloud computing to handle the heavy computations. This has resulted in the use of heterogeneous computing or multiple computing architectures in a single test system.

This year’s Automated Test Outlook report by National Instruments identifies this as a key trend: “The advent of modular test standards such as PXImc and AXIe that support multi-processing and the increased use of FPGAs as processing elements in modular test demonstrate the extent to which heterogeneous computing has penetrated test-system design.”

The heterogeneous computing trend has injected more power as well as more complexity into test systems—because using varied computing units means varied paradigms, platforms, architectures, programming languages, etc to deal with. However, greater interoperability and standardisation, as well as abstraction and high-level programming options are making heterogeneous computing easier.

To an extent, the heterogeneous computing trend is being adopted on the software side too. Test engineers are beginning to mix and match modules from different software environments like LabView, Matlab and Visual Studio, to form tool-chains that meet their specific requirement.

Testing on the cloud

Another trend in some ways linked to heterogeneity is the rise of cloud-based testing. In cases where testing requires heavy computations, testing teams are beginning to rent and use processing power and tools on the ‘cloud.’ However, this option is available only for test processes that do not require a real-time response. That’s because the processes involved in reliably and securely sending data back and forth across a network instils a bit of latency.

Use of design IPs in both devices and testers

For quite some time now, intellectual property (IP) modules have been used in the design and development of devices and software in order to speed-up development and ensure reuse of component designs. Now there is a similar trend in test software and I/O development as well. In many cases, IP components used in the design of devices are beginning to be included in the corresponding test equipment also.

Consider, for example, a wireless communications device that uses various IP units for data encoding and decoding, signal modulation and demodulation, data encryption and decryption, etc. A tester for the device would also need the same functions in order to validate the device. Instead of reinventing the wheel, same-design IP components used by the device can be reused by the design team also to reduce the time and cost involved in stages like design verification and validation, production test, fault detection and so on. The sharing of IP components also facilitates concurrent testing during design, production, etc to further reduce time-to-market.

According to industry reports, the availability of FPGAs on test modules is also facilitating this trend. Modules such as the Geotest GX3500, for example, has an FPGA for implementing custom logic and can support a mezzanine card that holds a custom interface, providing the flexibility needed to implement the design IP. Such modules, along with the use of common high-level design software, greatly speed up the implementation of design IP in test.

This whole concept of using design IP in test has the ability to integrate testing throughout the lifecycle, right from design to production. However, experts feel that in areas like semiconductor fabrication that rely largely on contract manufacturing, many challenges remain before validation and production test can be merged, because a lot of manufacturers still consider testing as an ‘evil’ process and do not cooperate or invest resources in advanced techniques.

That said, it is obvious that this is a ‘happening’ time in the world of T&M. The explosion of wireless standards and the meteoric developments in other technological arenas is putting a lot of pressure on the T&M industry to deliver its best. There is a lot of lateral thinking, experimentation, research and development leading to interesting products and techniques.

The author is a technically-qualified freelance writer, editor and hands-on mom based in Chennai