The era of data and information explosion is upon us. A 10.2cm (4-inch) iPhone today has 128GB of storage, which is far more storage than that of an average desktop computer from 15 years ago.

Sandisk, Samsung, Toshiba, Micron and SK Hynix are responsible for almost all NAND flash memory manufactured, including its many varieties—and, there are a lot of varieties. Storage requirements vary with each project and design constraint, and there is no one-size-fits-all solution here.

So what elements does a modern engineer have to consider while selecting memory? Let us find out.

Flash Memory is designed to be used up…”

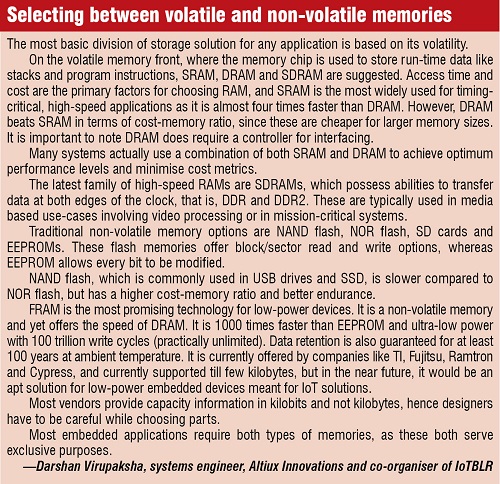

Flash memory has a limit to the number of times that it can be written on, or the number of write cycles that it can handle. This points to endurance, which is an important parameter for memory selection.

What level of endurance will be suitable for your project? You will need to figure out the life of your product in consumers’ hands in order to answer this question. “In the smartphone segment, for instance, typical usage is for three to four years. In such cases, triple-level cell (TLC) provides the most cost-effective kind of memory,” explains Vivek Tyagi, director, business development, OEM and enterprise, SanDisk India.

Single-level cell (SLC) technology has the highest endurance, in that it can be written up to 50,000 times. But because it can only store one bit of data per cell, it costs more per gigabyte than other technologies. Multi-level cells are cheaper and can store more memory in the same cell-size, with the drawback that you will have to work with reduced endurance. TLCs are the latest and hold three bits per cell.

However, if you are building an industrial project or a device that is expected to work for eight years or so, the engineer has to check the endurance of the memory and see whether it meets the requirements put forth by the customer or user of that design. In most cases, since the industrial project might not have to deal with multimedia files, it need not have huge amounts of capacity. Thus, this trade-off can be used to opt for SLC.

Typically, when you buy an eMMC flash, your vendor knows about endurance and will provide you with a slew of multiple choices based on your demand. If you are looking at TLCs, Samsung and SanDisk are the only vendors who have it as of now. Other vendors are expected to fall suit later this year.

Getting a hold on performance

Depending on your product design, you may have a set requirement for the time that your device can take to read or write onto flash. These are read-access and write-access times.

Whether you are using apps like WhatsApp, or imaging or recording videos, you are running all these on the flash memory of your smartphone. Because these activities are run simultaneously, it is very critical to have excellent read and write times on your device. In other applications, like the Internet of Things (IoT) gateway or a set-top box, it might not be so critical.

Playing just a movie might not be very demanding on the memory, but multiple usages, like imaging, music and other running applications, create a critical demand for the need for speed. Companies like SanDisk differentiate their product lines according to performance and endurance. Their iNAND products range from standard to ultra, and finally extreme for highest performing chips.

Understanding what capacity your project really needs

Industrial devices do not usually deal with memory-heavy multimedia files or videos, and hence can make do with cheaper lower-capacity TLC memory. Alternatively, in those cases where the industrial device requires higher performance, it can move on to get an SLC NAND memory instead of TLC.

On the other hand, customer requirements for smartphones and consumer electronics are growing to 128GB for smartphones launched this year. In these cases, TLC is the better choice over SLC because the designer can access higher capacities of memory with the same budget constraint. Moreover, the end-user will value the size of the memory on the device quite heavily while making a purchase decision.

Memory capacity requirements are also based on the kind of operating system (OS) that you intend to run on the device. Microkernels are designed to work using minimal system resources and can be used with limited memory capacity. On the other hand, a full-featured OS requires more memory and plentiful system resources.

For every embedded design, software considerations are very important. “However, the term embedded design is itself very broad, and so careful consideration has to be taken by the architect or system designer. The designer has to think on the amount of writing to be done. So, in field-deployed systems, we need to know what the system needs to do (application) versus what is demanded by the OS. Hence, there is a need to know what is written in storage,” explains Sambit Sengupta, demand creation manager, Avnet Electronics Marketing. RTOSes are always very application-optimized and in every embedded design where these are used, these use solid-state drives (SSDs). But in a custom OS, we have to be careful about bad-block management, and hence mostly, these use eMMC based memory.

Compatibility with platforms

Not all storage devices are compatible with all chipsets, field programmable gate arrays (FPGAs) or microcontroller families.

For example, the MT29F family of NAND flash by Micron is compatible with Freescale’s Kinetis K70 microcontroller units (MCUs), TI’s Sitara ARM9 processors and Xilinx’s Zynq-7000 all-programmable system-on-chips (SoCs), but not suited for Freescale’s Kinetis K50 MCUs and Xilinx’s Virtex FPGAs. Usually, storage solution vendors provide ready reckoners to check the compatibility of their product with different available platforms.

With higher data width comes faster data-transfer rate, provided there is data-line support available. This means that a memory with 32-bit data width will fetch more data in the same cycle as a memory with 16-bit data width, which will fetch more data than a memory with 8-bit data.

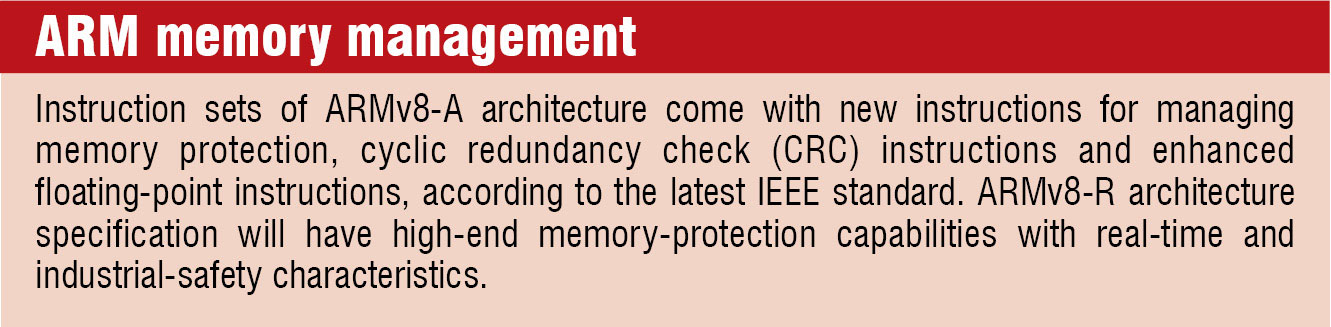

According to Guru Ganesan, managing director, ARM, “There is a lot of developer as well as application migration from 8- and 16-bit MCUs to 32-bit ones today.” “The chief reason being that typical modern-day or IoT type of applications require more memory (determined by the address bus size), as well as larger data buses for quicker and more efficient operation from a lay-persons standpoint,” he explains in an interview with EFY.

Evolving circumstances and the importance of supply

The first generation of engineers using NAND memory had to not only select the memory but also figure out how to manage it. “The NAND was manufactured on a thin small outline package (TSOP), and embedded design engineers had to write the code on how to manage read, write on this memory, as well as figure out error correction,” explains Vivek Tyagi.

Also Read: Interesting Electronics Projects

Moreover, since the NAND characteristic would change from one NAND supplier to the next, if a certain requirement (technical or cost) meant changing your supplier, then the engineer had to rewrite the software, managing the memory after understanding the data sheets of the new supplier’s solution. Not many engineers looked forward to this.

With embedded multimedia card (eMMC) technology, the controller and NAND flash were combined together. Now, since the entire hardware belonged to the supplier, they also wrote the software to manage the memory, making it easier for the engineer to switch suppliers.

Supply problems are not a rarity either. For instance, memory market research company DRAMeXchange predicted last year that NAND flash pricing will suffer because of a temporary shortage due to manufacturers switching to newer NAND production methods, as well as the release of next-generation iPhones and demand for already constrained LPDDR3 mobile DRAM.

Vivek adds, “Today most embedded NAND flash is made according to eMMC standard, whether it is for the memory being used for automotive solutions, point-of-sale devices or other embedded systems. This shift made it possible for vendors to make better decisions on selecting memory without worrying about rewriting the entire software.”

Bill O’Connell, key account manager, Swissbit, explained to us, in an interview at an industry event in Bengaluru, how it is very essential for the memory in medical and casino gaming electronics to be highly reliable, and those in strategic and industrial electronics to be additionally capable of running over a wider range of temperatures from -40°C to 85°C. Sourcing memory for these specifications could be tougher than those for the usual kind, so you might need specialised vendors to source these for you.

Costs can escalate quickly

At the end of the day, cost per unit of the memory device is almost always a major constraint for system architects in any choice of system components.

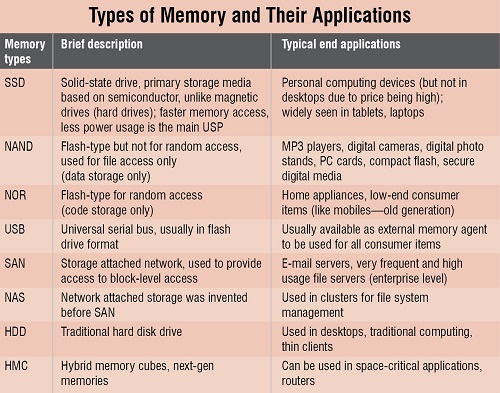

While some devices, like SSD and SRAM, exhibit large storage capacities, high speed and low-power consumption, their cost is very high. The designer may have to compromise a little on these factors and go for HDD or EEPROM, and DRAM instead. USBs and flash devices are typically cost-effective. But, flashes that come with added features, like wear levelling and bad-block management, are priced higher. The cost of remote storage depends on the number of devices sharing the memory.

“Even though people keep saying that their memory is unlimited, most systems are still designed for optimal resources (with respect to the hardware and software), and for optimal size and form factor. They are interested in finishing it with the bare minimum, as they have to pay for each bit of extra memory,” explains Shinto Joseph, operations and sales director, LDRA Technology Pvt Ltd, in an interview with EFY. While this amount might be negligible when you are looking at just one or two chips, it does add up to a lot when you consider the quantities involved in mass production and shipping of a consumer product.

Even if we talk about an infrastructure system, it requires high-end reliable memory of the kind used in a critical system and every gigabyte (GB) costs a lot. This will also cause designers to put a cap on the memory that can be used here.