NVIDIA and UC San Diego researchers have created AnyTeleop, a computer vision-powered teleoperation system for diverse applications.

Recent breakthroughs in robotics and artificial intelligence (AI) have expanded teleoperation possibilities, allowing remote tasks like museum tours or maintenance in inaccessible areas. However, most teleoperation systems are designed for specific settings and robots, limiting their broad adaptability.

Researchers at NVIDIA and UC San Diego have developed AnyTeleop, a teleoperation system driven by computer vision designed for broader application scenarios. Prior research emphasized how a human teleoperates or directs the robot. However, this methodology faces two challenges. Initially, training a top-tier model demands numerous demonstrations. Moreover, the configurations often involve expensive equipment or sensory hardware tailored solely for a specific robot or deployment setting.

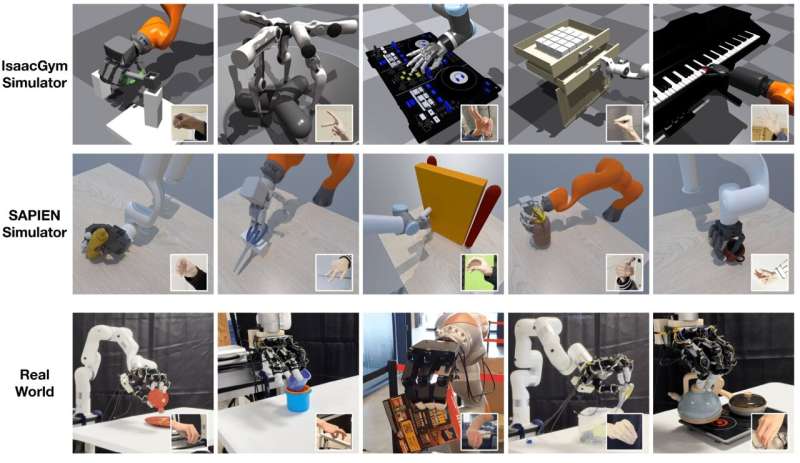

The team aimed to develop an affordable, simple teleoperation system, and versatile across various tasks, settings, and robots. To hone their system, they controlled virtual robots in simulations and real robots in physical settings, minimizing the necessity to buy and build numerous robots. Through single or multiple cameras, the system monitors human hand positions and adapts these to guide the movements of a robotic hand with multiple fingers. The wrist’s position drives the robot arm’s movement, facilitated by a CUDA-driven motion planner.

Unlike many previous teleoperation systems, AnyTeleop boasts versatility, compatible with various robot arms, hands, camera setups, and simulated and real-world environments. It’s suitable for both close-range and distant user scenarios. Furthermore, the AnyTeleop platform assists in gathering human demonstration data, which captures the nuances of human movements during specific tasks. This invaluable data can enhance the training of robots to perform tasks autonomously. In preliminary trials, AnyTeleop surpassed the performance of a specialized teleoperation system, even when used on the robot it was designed for. This underscores its potential to elevate teleoperation uses.

NVIDIA plans to launch an open-source variant of AnyTeleop, enabling global research teams to experiment with and adapt it for their robots. This emerging platform holds the potential to amplify teleoperation system deployment and aid in gathering training data for robotic manipulators.

Reference: Yuzhe Qin et al, AnyTeleop: A General Vision-Based Dexterous Robot Arm-Hand Teleoperation System, arXiv (2023). DOI: 10.48550/arxiv.2307.04577