Researchers at Seoul National University have introduced a new deep learning framework exclusively for enhancing the skills of a sketching robot.

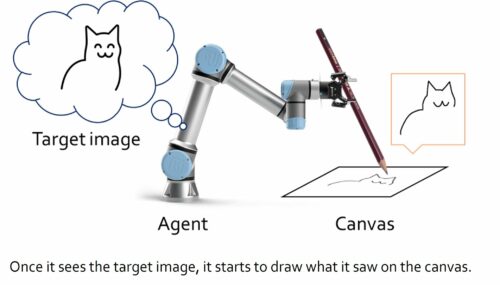

The newly developed framework enables a sketching robot to learn both motor control and stroke-based rendering simultaneously. The team created a framework that represents drawing as a sequential decision process instead of developing a generative model. This sequential decision process resembles human behavior when one would draw lines with a pen or pencil to create a sketch.

Other teams have developed a deep learning algorithms for ‘robot artists’. However, these models required immense training datasets which contained sketches. It also required an inverse kinematic approach to teach the robot to manipulate a pen and sketch on a paper.

On the other hand, Lee and his colleagues’ framework was not trained on real-time drawing. It was capable of developing its own drawing strategies over time through trial and error methods.

The model created by the team has two ‘virtual agents’ – upper class and lower class. The role of the upper class agent is to learn new drawing tricks, while the lower class agent learns the movement strategies.

These virtual agents were individually trained using reinforcement learning techniques and were coupled only after completing the training. The team tested their combined performance using a 6-DoF robotic arm with a 2D gripper. The results of the initial tests were encouraging as the algorithm enabled the robotic agent to produce better sketches of specific images.

This framework created by the team can be used to enhance the performance of the robotic sketching agents in the future. Also, the team is working on developing similar creative learning-based models.

“Recent deep learning techniques have shown astonishing results in the artistic area, but most of them are about generative models which yield whole pixel outcomes at once.”, said Ganghun Lee, the first author of the paper.