MIT researchers have used diffusion models, a type of generative AI, to create a unified policy that allows robots to adapt to diverse tasks across various settings.

To train a robot using tools like hammers and wrenches, you need a vast amount of diverse data, from colour images to tactile imprints, across various domains like simulations or human demos. However, efficiently merging this diverse data into a single machine-learning model is challenging. Consequently, robots often struggle to adapt to new tasks in unfamiliar environments when trained with limited, task-specific data.

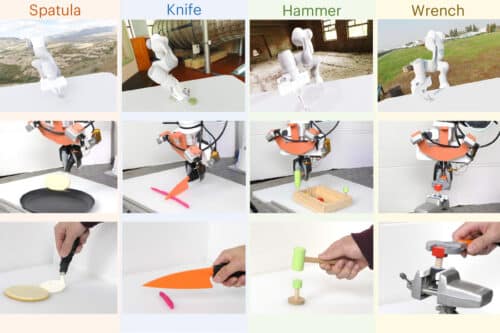

MIT researchers have developed a method using diffusion models and generative AI to merge data from various domains and tasks. They trained individual models on specific tasks to create distinct policies, which were then combined into a general policy that allows a robot to perform and adapt to multiple tasks across different settings. This approach, tested in simulations and real-world scenarios, enabled significant improvements in task performance, with robots handling new challenges effectively.

Combining disparate datasets

The researchers have refined a technique using diffusion models, a type of generative AI, to integrate smaller datasets from various sources like robotic warehouses. Instead of generating images, these models are trained to produce robot trajectories by adding noise and refining them. Policy Composition (PoCo) involves training different diffusion models on varied datasets, such as human video demonstrations or teleoperated robot manoeuvres. The individual policies are then combined through a weighted process, allowing the robot to generalise across multiple tasks.

Greater than the sum of its parts

The researchers found that by training diffusion policies separately, they could optimise task performance by selectively combining these policies. They also streamlined the integration of new data types by training additional models as needed rather than restarting from scratch. Their method, PoCo, was tested on robotic arms in simulations and real-world tasks like using a hammer and a spatula, leading to a 20 per cent performance improvement over traditional methods.

In the future, the researchers aim to apply this technique to complex tasks where a robot switches between tools and uses larger robotics datasets to enhance performance.