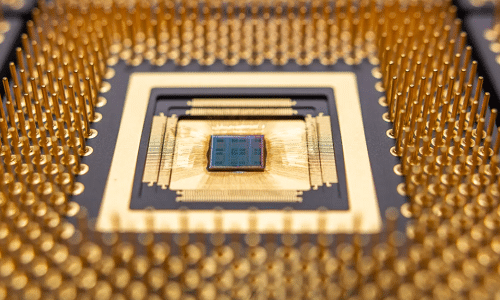

The EnCharge computing platform chip can offer 20x higher performance-per-watt compared to the existing AI accelerators.

The EnCharge chipset will thus drastically reduce cost, time and energy consumption in AI computations.

EnCharge’s new computing platform can achieve 150 TOPS/W. This enables the processor to run larger AI models with higher accuracy, and better resolution and process data from multiple camera channels simultaneously. The test chip can achieve about 20x higher performance-per-Watt with 14x higher performance per dollar relative to comparable AI accelerators, thus offering revolutionary gains, without time-consuming, cost-prohibitive workarounds. The new computing platform utilizes a technique called in-memory computing, the new design can store data and run computation all in the same place on the chip. The EnCharge chipset will thus drastically reduce cost, time and energy consumption in AI computations.

“EnCharge AI is unlocking the incredible capability that artificial intelligence can deliver by providing a platform that can match its computing needs, and performing AI computations close to where the data in the real world is being generated,” said company founder Naveen Verma, professor of electrical and computer engineering and director of the Keller Center for Innovation in Engineering Education at Princeton University.

According to the company, the EnCharge AI team designed a new kind of computer chip that allows AI to run locally, directly on the device. To achieve this, the company has designed their chip using highly precise circuits that can be considerably smaller and more energy-efficient than today’s digital computing engines, allowing the circuits to be integrated within the chip’s memory. This in-memory approach provides the high efficiency needed for modern AI-driven applications.

The chip could cut costs and improve performance for robots in large-scale warehouses, in retail automation such as self-checkout, safety and security operations, and in drones for delivery and industrial purposes. The chips are programmable, capable of working with different types of AI algorithms, and scalable, enabling applications to grow in complexity.