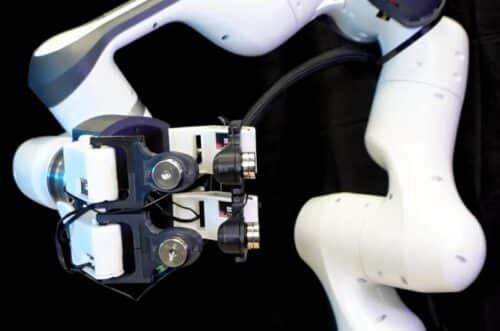

Researchers from Duke University have a system with a robotic hand that has microphones on each of its four fingertips, allowing robots to interact with things around them.

Imagine being in a movie theater, curious about how much soda is left in your cup. Without opening it, you shake the cup to hear the ice rattle, gauging if a refill is needed. As you put the drink down, you tap the armrest to check if it’s wood; the sound hints at plastic. We often use such vibrations to interpret our surroundings, a skill researchers are now adapting to robots, expanding their sensory abilities.

Researchers from Duke University have developed a system called SonicSense that enables robots to interact with their surroundings. SonicSense includes a robotic hand with four fingers, each with a microphone at the fingertip. These microphones capture vibrations when the robot taps, grasps, or shakes an object, allowing it to isolate these sounds from surrounding noise.

SonicSense analyzes frequency features from interactions and detected signals, using its knowledge and recent AI developments to determine the material and shape of an object. For unfamiliar objects, the system may need up to 20 interactions to identify them. For known objects, it can identify them with as few as four interactions.

Researchers have shown SonicSense’s capabilities. By shaking a box with dice, the system identifies the number and shape of the dice. Shaking a water bottle lets it measure the liquid’s volume. By tapping an object’s exterior, SonicSense can reconstruct its shape and identify its material.

While not the first to use such methods, SonicSense outperforms earlier technologies with a four-fingered hand, microphones that filter out noise, and advanced AI. This setup enables it to accurately identify objects with complex shapes, multiple materials, and those challenging for vision systems.

SonicSense offers a base for training robots to recognize objects in unpredictable environments. Its affordability, using commercial microphones, 3D printing, and other available parts.

The team is improving the system’s ability to manage multiple objects simultaneously by integrating object-tracking algorithms, helping robots navigate cluttered environments more effectively. They are also refining the robotic hand’s design.

Reference: Jiaxun Liu et al, SonicSense: Object Perception from In-Hand Acoustic Vibration, arXiv (2024). DOI: 10.48550/arxiv.2406.17932