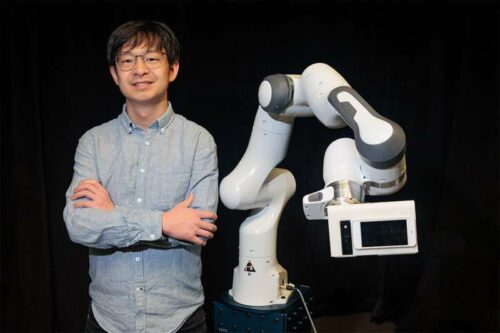

Researchers at the University of Toronto have developed Stargazer, an interactive camera robot for captivating tutorial videos by assisting instructors and content creators.

Robotic filming equipment holds significant consumer market potential, emphasizing the need for robots to understand humans for effective collaboration better. The robot’s role is to assist, not replace, humans.

Researchers at the University of Toronto have created Stargazer, an interactive camera robot designed to assist university instructors and content creators produce captivating tutorial videos showcasing physical skills. Stargazer enables capturing dynamic instructional videos, overcoming static camera limitations for those without a cameraperson.

Stargazer’s robot arm has a camera and seven motors. It autonomously tracks regions of interest by moving with the subject. Camera behaviors adapt to instructor cues like body movements, gestures, and speech detected by sensors. The instructor’s voice is recorded with a wireless microphone and sent to Microsoft Azure Speech-to-Text for speech recognition. The transcribed text and a custom prompt are sent to GPT-3, a language model that determines the camera’s intended angle and framing. The researchers say these instructor camera cues guide audience attention without disrupting instruction. For instance, pointing at tools prompts Stargazer to pan while instructing viewers to observe a specific action from above, resulting in a high-angle framing for better visibility.

The team aimed to find subtle signals for the interaction vocabulary, eliminating the need for separate communication with the robot. The goal is a seamless understanding of desired camera shots, integrating naturally into the tutorial experience. Stargazer’s capabilities were tested in a study with six instructors teaching various skills for dynamic tutorial videos. The robot facilitated subject tracking, camera framing, and angle combinations, enabling video production for tasks like skateboard maintenance, interactive sculpture-making, and virtual-reality headset setup. Participants practiced and completed tutorials in two takes. The researchers found that all participants successfully created videos using only the robotic camera controls and were content with the video quality. For larger environments and diverse angles, the team plans to explore camera drones and wheeled robots. Some participants tried using objects to trigger shots, which Stargazer doesn’t recognize yet.

Future research aims to detect subtle intentions by combining the instructor’s gaze, posture, and speech signals, progressing towards the team’s long-term goal. Stargazer is positioned as an alternative for those without professional film crews, but it currently depends on an expensive robot arm and external sensors. However, the researchers believe the Stargazer concept is not inherently restricted by costly technology.

Reference : Jiannan Li et al, Stargazer: An Interactive Camera Robot for Capturing How-To Videos Based on Subtle Instructor Cues, Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems (2023). DOI: 10.1145/3544548.3580896