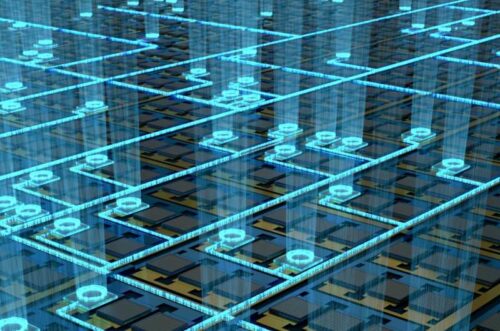

In-sensor image processors with multiple voltage-tunable photodiodes can filter images as they get captured.

Accidents happen in a blink of an eye. Considering the advancement from manual driving to autonomous driving, priorities for safety increases. Now, when it comes to the camera system in autonomous vehicles, processing time is critical. The time consumed for the system to snap an image and process it could mean the difference between avoiding an obstacle or getting into a major accident.

In-sensor image processing, which extracts important features from the raw data itself instead of a seperate microprocessor can pace up the visual processing. Researchers at the Harvard John A. Paulson School of Engineering and Applied Sciences (SEAS) have developed the first in-sensor processor that could be integrated into commercial silicon imaging sensor chips – CMOS image sensors.

“Our work can harness the mainstream semiconductor electronics industry to rapidly bring in-sensor computing to a wide variety of real-world applications,” said Donhee Ham, the Gordon McKay Professor of Electrical Engineering and Applied Physics at SEAS and senior author of the paper.

Researchers developed an electrostatically doped photodiode array. This means that the sensitivity of individual photodiodes, or pixels, to incoming light can be tuned by voltages. An array that connects multiple voltage-tunable photodiodes together can perform an analog version of multiplication and addition operations central to many image processing pipelines, extracting the relevant visual information as soon as the image is captured.

“These dynamic photodiodes can concurrently filter images as they are captured, allowing for the first stage of vision processing to be moved from the microprocessor to the sensor itself,” said Houk Jang, a postdoctoral fellow at SEAS. The array can be programmed into different image filters to remove unnecessary details or noise as per the applications.

“By replacing the standard non-programmable pixels in commercial silicon image sensors with the programmable ones developed here, imaging devices can intelligently trim out unneeded data, this could be made more efficient in both energy and bandwidth to address the demands of the next generation of sensory applications,” said Jang.