- Researchers aim to improve robot-assisted feeding comfort using new robotic algorithms for different food types.

- Three algorithms were developed for skewering with visual and haptic feedback, scooping with a push, and transferring food to the mouth.

Eating requires precise movements to pick up food with utensils, bring it to the mouth, and chew. This is done repeatedly for three meals daily without dropping or breaking food. Those with motor impairments can’t do this and need help, limiting independence and causing caregiver fatigue.

A research team in Dorsa Sadigh’s ILIAD lab aims to improve robot-assisted feeding. They created new robotic algorithms to assist with feeding different food types. One algorithm uses vision and haptics to insert the fork correctly; another uses a second robotic arm to push food on a spoon; and the third algorithm delivers food comfortably to the mouth.

Visual and haptic skewering

Using a camera and force sensor, researchers trained a robot arm to pick up foods of varying textures and fragility. The robot uses prior research to approach food at an optimal angle, probe it with the force sensor, and use visual feedback to determine fragility. It selects between two skewering strategies based on fragility. The study is the first to use vision and haptics to skewer various foods, outperforming non-haptic methods. However, delicate items like snow peas and salad leaves were still challenging.

Scooping with a push

Researchers used a bimanual robot with a spoon in one hand and a curved pusher in the other to pick up various foods, replacing the method of using a second arm to push peas onto a spoon. A computer vision system identifies the fragility of a food item as the two utensils approach it. If the thing is close to breaking, the utensils stop moving toward each other and begin to scoop upward. The pusher follows and rotates toward the spoon to prevent the food from moving.

Bite transfer

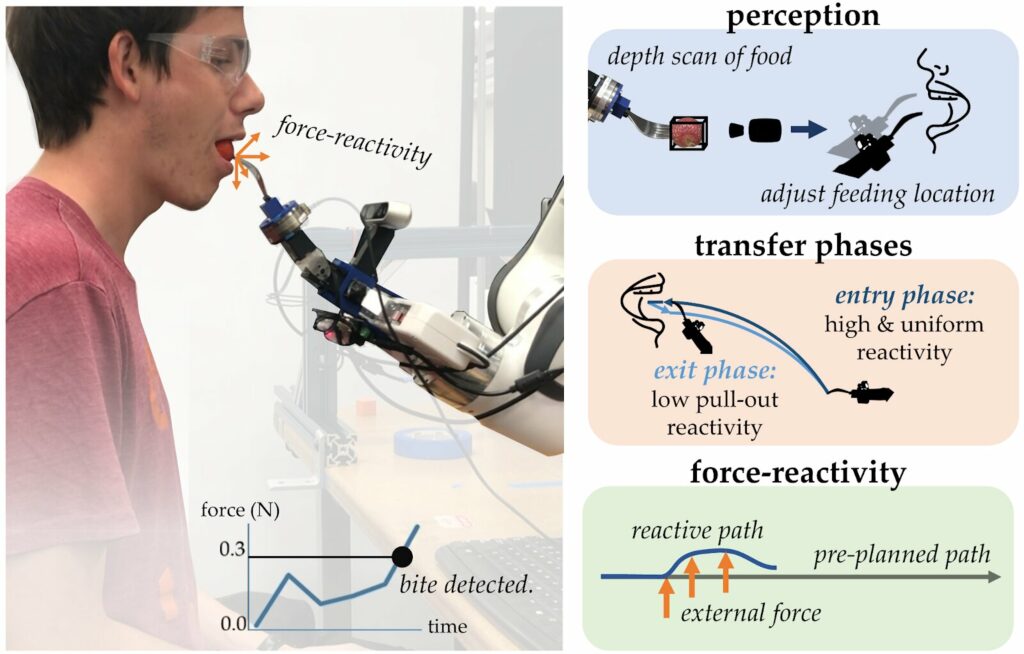

Researchers developed a robotic feeding system to bring food to a person’s mouth and detect when they take a bite. It uses wrist joint-like hardware for human-like movement, computer vision to detect and identify food, and a force sensor to ensure comfort. The system can switch between controllers and reactivity levels for each step.

AI-assistive feeding

More work is needed to perfect assistive-feeding robots, such as improving the ability to handle fragile or thin foods, cutting large items into bite-sized pieces, and handling finger foods. Communication between the robot and the user about food preferences also needs to be addressed, including whether the user should verbally request food or if the robot should learn their preferences over time.

Reference : Learning Visuo-Haptic Skewering Strategies for Robot-Assisted Feeding. openreview.net/forum?id=lLq09gVoaTE, Jennifer Grannen et al, Learning Bimanual Scooping Policies for Food Acquisition, arXiv (2022). DOI: 10.48550/arxiv.2211.14652, Lorenzo Shaikewitz et al, In-Mouth Robotic Bite Transfer with Visual and Haptic Sensing, arXiv (2022). DOI: 10.48550/arxiv.2211.12705