Each sensor in this network comprises two parallel photodiodes with opposing polarities, boasting a high temporal resolution of 5 microseconds.

Researchers from Hong Kong Polytechnic University, Huazhong University of Science and Technology, and Hong Kong University of Science and Technology have created a new type of neuromorphic event-based image sensors that are designed to efficiently capture and process dynamic motion in a scene. Unlike traditional sensors that capture the entire scene and then transfer data for motion recognition, which can lead to time delays and high power consumption, these new sensors operate differently.

These computational event-driven vision sensors are engineered to directly convert dynamic motion into sparsely generated, programmable, and informative spiking signals. This functionality allows them to be integrated into a spiking neural network for motion recognition.

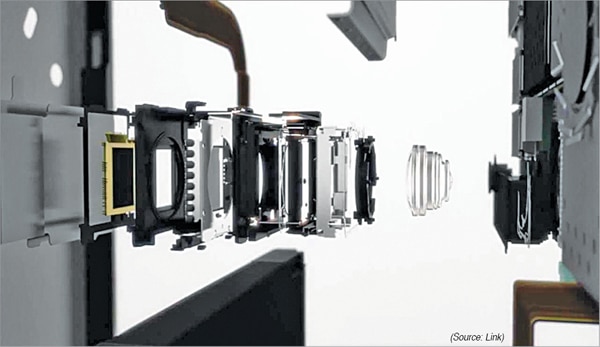

Each sensor in this network comprises two parallel photodiodes with opposing polarities, boasting a high temporal resolution of 5 microseconds. When these sensors detect changes in light intensity, they produce spiking signals. These signals vary in amplitude and polarity, and are tailored by electrically programming the photoresponsivity of each sensor.

A key feature of these sensors is their non-volatile and multilevel photoresponsivity, which can mimic synaptic weights in a neural network. This capability enables the creation of an in-sensor spiking neural network, effectively integrating sensing and computational functionalities.

This approach has the significant advantage of eliminating redundant data at the sensing stage, and also removes the necessity of transferring data between the sensors and computational units. This efficiency could lead to faster processing times and reduced power consumption, making it a promising development in the field of event-based vision sensing and neuromorphic computing.

“The combination of event-based sensors and spiking neural network (SNN) for motion analysis can effectively reduce the redundant data and efficiently recognize the motion,” Yang Chai, co-author of the study said. “Thus, we propose the hardware architecture with two-photodiode pixels with the functions of both event-based sensors and synapses that can achieve in-sensor SNN.”