Researchers have developed an Artificial Intelligence system that learns from images and video to identify objects and tasks.

Visual learning is considered a highly efficient way to understand a concept. We develop our conscience from what we see. And Artificial Intelligence(AI) is desired to work similarly. A system that has the ability to make decisions based on real time circumstances is what one expects from a smart system.

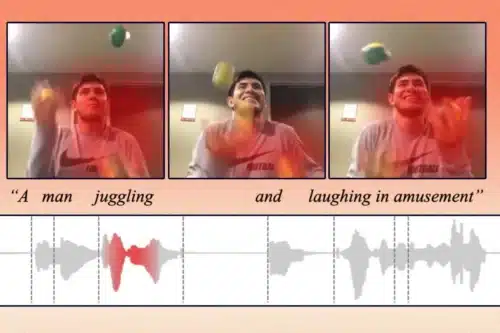

Researchers at the Computer Science and Artificial Intelligence Laboratory (CSAIL) have developed an artificial intelligence (AI) technique that allows machines to learn concepts shared between different modalities such as videos, audio clips, and images. This technique performs better than other machine-learning methods at cross-modal retrieval tasks, where data in one format (e.g. video) must be matched with a query in another format (e.g. spoken language). It also allows users to see the reasoning behind the machine’s decision-making.

Researchers have developed an artificial intelligence technique that learns to represent data in a way that captures concepts which are shared between visual and audio modalities. For instance, their method can learn that the action of a baby crying in a video is related to the spoken word “crying” in an audio clip. The system was observed to perform better than other machine-learning methods at cross-modal retrieval tasks, which involve finding a piece of data, like a video, that matches a user’s query given in another form, like spoken language. Their model also makes it easier for users to see why the machine thinks the video it retrieved matches their query.

The researchers focus their work on representation learning, which is a form of machine learning that seeks to transform input data to make it easier to perform a task like classification or prediction. The model is constrained to only use 1,000 words to label vectors. It can decide which actions or concepts it wants to encode into a single vector, but it can only use 1,000 vectors. The model chooses the words it thinks best represent the data.

The model still has some limitations they hope to address in future work. For one, their research focused on data from two modalities at a time, but in the real world humans encounter many data modalities simultaneously.

Reference: “Cross-Modal Discrete Representation Learning” by Alexander H. Liu, SouYoung Jin, Cheng-I Jeff Lai, Andrew Rouditchenko, Aude Oliva and James Glass, 10 June 2021, Computer Science > Computer Vision and Pattern Recognition.

arXiv:2106.05438