Researchers have developed silicon-based memristors, energy-efficient devices that can both store and process information.

Researchers around the world have been working on developing hardware models that could meet high computational loads. Researchers at Technion–Israel Institute of Technology and the Peng Cheng Laboratory have recently created a new neuromorphic computing system supporting deep belief neural networks (DBNs), a generative and graphical class of deep learning models. This model is based on silicon-based memristors, energy-efficient devices that can both store and process information.

One unique feature of memristor is that as we regulate electrical current through it, it remembers the charge that passes through it. Their capabilities and structure resemble those of synapses in the human brain more closely than conventional memories and processing units. This makes it suitable for running AI models.

Usually, memristors are used to perform analog computations. It is known that there are two main limitations in the neuromorphic field—one is the memristive technology that is still not widely available. The second is the high cost of converters that are required to convert the analog computation to the digital data and vice versa.

Researchers in their model tried to overcome these problems. As memristors are not widely available, they decided to instead use a commercially available Flash technology developed by Tower Semiconductor, engineering it to behave like a memristor. In addition, they specifically tested their system with a newly designed DBN, as this particular model does not require data conversions i.e., its input and output data are binary and inherently digital.

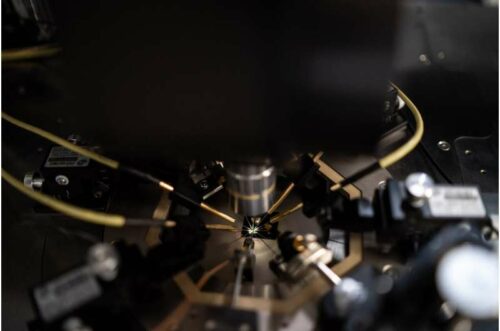

Researchers fabricated artificial synapses using commercial complementary-metal-oxide-semiconductor (CMOS) processes. These memristive, silicon-based synapses have numerous advantageous features, including analog tunability, high endurance, long retention time, predictable cycling degradation, and moderate variability across different devices.

They tested the device by training a type of DBN, known as a restricted Boltzmann machine, on a pattern recognition task. To train this model (a 19x 8 memristive restricted Boltzmann machine), they used two 12 x 8 arrays of the memristors they engineered. The results showed that even though DBN are simple to implement (due to their binary nature), we can reach high accuracy (>97% accurate recognition of handwritten digits) when using Y-Flash based memristors.

Reference : Wei Wang et al, A memristive deep belief neural network based on silicon synapses, Nature Electronics (2022). DOI: 10.1038/s41928-022-00878-9