MIT researchers unveils a simulation engine capable of constructing realistic environments for deployable training and testing of autonomous vehicles.

Hyper-realistic virtual worlds have been heralded as the best driving schools for autonomous vehicles (AVs) as they’ve proven to be productive test beds for safely trying out dangerous driving scenarios. Several AV companies rely on these for testing their products and mimicking dangerous scenarios.

Scientists from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) created “VISTA 2.0”. It is a data driven simulation engine where vehicles can learn to drive in the real world and recover from near-crash scenarios.In addition to that, the company plans to make all of the code open source for the public.

“Today, only companies have software like the type of simulation environments and capabilities of VISTA 2.0, and this software is proprietary. With this release, the research community will have access to a powerful new tool for accelerating the research and development of adaptive robust control for autonomous driving,” says the senior author of a paper about the research, MIT Professor and CSAIL Director Daniela Rus.

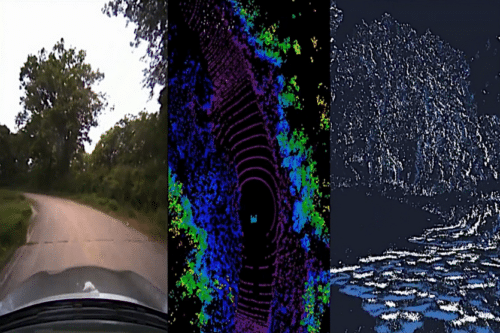

This simulator is built using photorealistic rendering of real-world data — thereby enabling direct transfer to reality. The initial iteration only supported single car lane-following with one camera sensor. This simulator is a data driven system that can simulate complex sensor types and massively interactive scenarios and intersections at scale.

“VISTA 2.0 demonstrates the ability to simulate sensor data far beyond 2D RGB cameras, but also extremely high dimensional 3D lidars with millions of points, irregularly timed event-based cameras, and even interactive and dynamic scenarios with other vehicles as well.” said Alexander Amini, CSAIL PhD student.

Researchers were able to scale the complexity of the driving tasks like overtaking, following, and negotiating, including multiagent scenarios in highly photorealistic environments. Researchers believe the technology can be developed to point where one can customise and train vehicles AI for different terrain and conditions as well.