Neurology scientists and robotics engineers have developed a robot model that incorporates deep learning to mimic the brain

The human brain is a fascinating organ that allows us to perceive and navigate the surrounding world. Information generated from all six human senses is effortlessly combined in the brain, which is a wonderful aspect of cognition even the most advanced AI systems struggle to replicate.

In order to understand the neural mechanisms behind this, a team of cognitive neuroscientists, computational modellers and roboticists from the EBRAINS Research Infrastructure have developed a robot that mimics the brain. The Human Brain Project (HBP), one of the largest research projects in the world has seen several interdisciplinary contributions to create complex neural network architectures.

“We believe that robots can be improved through the use of knowledge about the brain. But at the same time, this can also help us better understand the brain”, says Cyriel Pennartz, a Professor of Cognition and Systems Neurosciences at the University of Amsterdam.

A model named ‘MultiPrednet’ was created based on real-life perception data acquired from rats and consists of modules for visual and tactile input.

“What we were able to replicate for the first time, is that the brain makes predictions across different senses”, Pennartz explains. “So you can predict how something will feel from looking at it, and vice versa”.

Robot Based On Rodent Behaviour

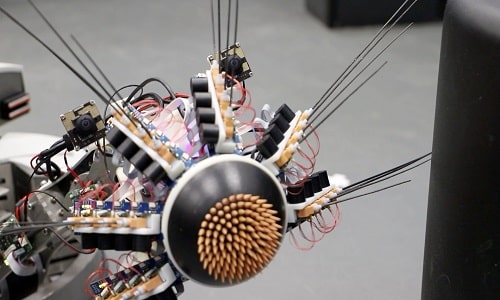

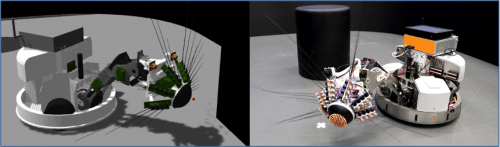

To test the performance of MultiPrednet, the model was integrated into Whiskeye, a rodent-like robot that autonomously explores its environment with the help of head-mounted cameras that serve as eyes and 24 artificial whiskers that gather tactile information.

“The control architecture of Whiskeye contains models of the brain stem, cerebellum, basal ganglia and superior colliculus, which combine to direct the attention and movement of the whiskers and the robot towards salient point of interest in the environment,” said Martin Pearson, Senior Research Fellow at Bristol Robotics Laboratory, University of the West of England.

By leveraging Simultaneous Localisation And Mapping (SLAM), the robot maps an object or environment using touch, enabling any relative self-motion to be accommodated into the internal representation. The Whiskeye robot’s placement on the Neurorobotics Platform allows doing long duration or even parallel experiments under controlled conditions. Thus, these networks get trained by constantly generating predictions about the world, comparing them to actual sensory inputs and then adapting the network to avoid future error signals.

The researchers observed that the brain-based model has an edge over traditional deep learning systems, especially when it comes to navigation and recognition of familiar scenes.

The team now investigates further. “We also plan to use the High Performance and Neuromorphic Computing Platforms for much more detailed models of control and perception in the future,” said Martin Pearson.

Neurological Model

Based on the above technology, the robot learns to recognise objects and places in its environment by incorporating tactile and vision modules. These are then merged in a multisensory module so that visual inputs can be transformed into a tactile representation or the other way around. In the human brain, this merging occurs in the Perirhinal Cortex.

“This is like imagining an apple that you can touch. If you close your eyes, then you can predict what it will look like. On the other way around, you can look at the apple without touching it and your vision can guide your hands towards grabbing the apple,” explained Cyriel Pennartz.

On the prospects of collaboration between robotics and neurology, Katrin Amunts, Scientific Research Director of the HBP says, “To understand cognition, we will need to explore how the brain acts as part of the body in an environment. Cognitive neuroscience and robotics have much to gain from each other in this respect.”

Pawel Swieboda, CEO of EBRAINS and Director General of the HBP, comments, “The robots of the future will benefit from innovations that connect insights from brain science to AI and robotics.”

All code and analysis tools of the work are open on EBRAINS, so that researchers can run their own experiments.