Harnessing the power of neural networks and the finesse of time-delayed signals, an “acoustic swarm” of shape-shifting speakers emerges as a game-changer in audio technology. With precision once deemed science fiction, these robots distinguish individual voices amidst noise, all without visual cues. They’re not just speakers; they’re autonomous agents of clarity, capable of muting irrelevant noise or segregating simultaneous conversations—a plus for anyone who’s struggled to follow a discussion in a noisy room.

Picture this: You’re in a bustling café, attempting to record a crucial meeting. You place a sleek platform on the center of the table. Suddenly, like a scene from a high-tech ballet, seven tiny robot speakers scatter in an array, their movements guided by algorithms and sound itself. Each robot is a guardian of sound, positioned to capture the spoken word with exceptional accuracy.

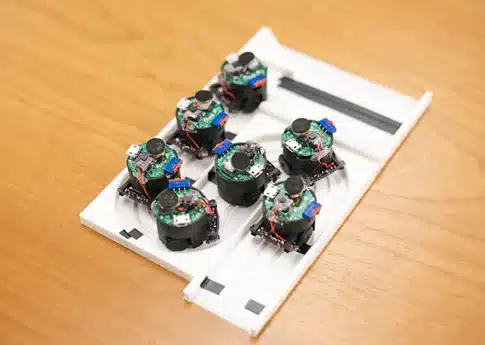

At the University of Washington (UW), a team of visionaries has turned chaos into order. They’ve engineered a fleet of microphones that, unassisted by cameras or GPS, swim through the airwaves of a room, creating “speech zones” that isolate voices participating in a particular conversation. Each robot is a self-sufficient navigator, charting its course on tabletops and beyond, ensuring your voice isn’t just heard; it’s understood (Figure 1).

Here’s the magic behind the curtain: the robots embrace space, spreading to maximize their auditory reach. Moreover, they’re resilient, unphased by collisions, and they self-localize with an exactness that rivals GPS precision. As their energy levels decrease, their self-sustaining design automatically returns them to the base for recharging.

These aren’t your average bots; they’re miniature go-getters, each the size of a grape and equipped like a secret agent vehicle from the future. They glide away from their docks on a mission to deliver crystal-clear audio, their paths illuminated by sonic landmarks invisible to the human ear. In their wake, each voice leaves a trail of soundscapes against the muted backdrop of the world’s hustle and bustle.

Previous efforts into this territory stumbled over the challenges of scale and privacy. External setups like cameras and drones, once deemed necessary for spatial understanding, introduced new problems rather than solutions. These methods fell short in accurately pinpointing each robot’s location in a group, where this acoustic swarm works in concert to detect positions for precise localization. Furthermore, this new type of robotic speaker maintains privacy by using high-frequency sounds to guide its movement, eliminating the need to visually record other people within its surroundings.

How They Work

These acoustic robots are Bluetooth enabled with built-in sensors for navigation, including gyroscopes and accelerometers. They move on tiny wheels propelled by micro motors, and their sound capabilities come from a pair of microphones and a speaker with a digital amplifier. They’re designed to prevent falls using proximity sensors for edge detection.

For power, the robots use a unique charging system with aluminum balls that connect to a circuit inside each robot, which aligns with conductive rails on a base station. The station has an entry ramp, an exit ramp, and grooved tracks with conductive tape enabling simultaneous charging for all robots docked on the platform.

Data processing is managed wirelessly, with the robots streaming audio to a host computer that processes the recordings for speech separation. Despite the bandwidth limits of Bluetooth, the system effectively compresses the audio data for live streaming, though not fast enough for real-time video conferencing.

Here is a prototype overview of these acoustic swarms:

- Consists of seven compact, roving robots.

- Each robot is equipped with speakers and microphones.

- Size: 3.0cm x 2.6cm x 3.0cm, small enough for easy deployment.

- Navigation: Emit high-frequency sounds for obstacle avoidance and optimal placement.

- Purpose: Autonomously adjust positions for crystal clear sound capture in noisy settings.

During tests in various settings, the robots distinguished different voices close to each other with a 90 percent success rate.

Manipulating Acoustics

The acoustic swarm uniquely achieves cooperative navigation with centimeter precision solely through sound, bypassing the need for cameras and external setups. Forming a self-spreading microphone array and utilizing an attention-focused neural network can distinguish and locate simultaneous speakers in a 2D environment, which constitutes the speech zone. This allows for mute zones, active zones, and the ability to separate multiple conversations while recognizing their locations.

Is the Technology Scalable?

When lead author of the UW research project Malek Itani and co-author Tuochao Chen were asked what it would take to scale this technology for use in concert halls, sports arenas, or even smart cities, they explained, “There would be an initial setup or calibration stage that would be cumbersome. You would need to physically place microphones in different locations and hand-measure distances or design a system that does that automatically. In our small system, self-localizing to centimeter-level is a bit easier. The sound the robots emit during localization would be almost undetectable if the robots were hundreds of feet away from each other, making localizing a challenge.”

According to the duo, if localization were solved, you would need a way to send and process data—a wireless infrastructure that supports sending the data over far distances. While Bluetooth LE reaches up to hundreds of meters, you would need to add redundancy to reduce packet losses. The number of microphones would also need to increase for a larger area, making it challenging to send the data quickly enough through the network.

As with any burgeoning technology, its promise brings both excitement and obstacles that typically take a while to overcome. In the meantime, you may not only see this acoustic swarm technology in a noisy restaurant, as the researchers also expect it to make its way into smart home applications.

Sources

- Itani, Malek, Tuochao Chen, Takuya Yoshioka, and Shyamnath Gollakota. “Creating speech zones with self-distributing acoustic swarms.” Nature Communications 14, September 21, 2023.

- Malek Itani and co-author Tuochao Chen, interview by author, October 2023.

- Milne, Stefan. “UW team’s shape-changing smart speaker lets users mute different areas of a room.” UW News, September 21, 2023.