Offers solution for data congestion issues of AI and big data by adding computational functions to semiconductor memory

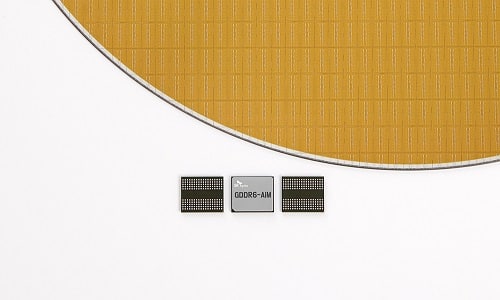

The Processing In Memory(PIM) is a next-generation memory chip from SK hynix that has great computing capabilities. It offers a solution for data congestion issues of AI and big data by adding computational functions to semiconductor memory.

The product, known as GDDR6-AiM (Accelerator in memory) adds computational functions to GDDR6 memory chips, which process data at 16Gbps. A combination of GDDR6-AiM with CPU or GPU instead of a typical DRAM makes certain computation speeds 16 times faster. GDDR6-AiM is widely expected to be adopted for machine learning, high-performance computing, and big data computation and storage.

GDDR6-AiM runs on 1.25V, lower than the existing product’s operating voltage of 1.35V. In addition, the PIM reduces data movement to the CPU and GPU, reducing power consumption by 80%. This, accordingly, helps the reduction of carbon emissions of the devices that adopt this product.

The company expects continued efforts for innovation of this technology to bring memory-centric computing, in which semiconductor memory plays a central role, a step closer to the reality in devices such as smartphones.

“SK hynix will build a new memory solution ecosystem using GDDR6-AiM, which has its own computing function,” said Ahn Hyun, Head of Solution Development at SK hynix. He added that the company will continue to evolve its business model and the direction for technology development.

SK hynix also plans to introduce a technology that combines GDDR6-AiM with AI chips in collaboration with SAPEON Inc., an AI chip company that recently spun off from SK Telecom.

“The use of artificial neural network data has increased rapidly recently, requiring computing technology optimised for computational characteristics,” said Ryu Soo-jung, CEO of SAPEON Inc. “We aim to maximise efficiency in data calculation, costs, and energy use by combining technologies from the two companies.”