The sharp spike in interest and research in artificial intelligence (AI) is predominantly positive, but it is causing some scary fallouts, such as financial scams and plagiarism. Interestingly, AI also offers the means to prevent such frauds.

Addressing a joint session of the US Congress in June this year, India’s Prime Minister Narendra Modi said, “In the past few years, there have been many advances in AI—

Artificial Intelligence. At the same time, there have been even more momentous developments in another Al—America and India.” The statement went down so well with the audience that the American President Joe Biden gifted Modi a T-shirt with the text, The Future is AI—America and India. During his visit to the US, the Prime Minister also met the heads of leading tech companies at the Hi-Tech Handshake mega event. This included Google’s Sundar Pichai, Apple’s Tim Cook, OpenAI’s Sam Altman, and Microsoft’s Satya Nadella. They discussed topics ranging from semiconductor manufacturing and space exploration to AI, and some of these tech giants committed to various degrees of investment in India.

Satya Nadella shared some valuable insights about the power of AI to transform the lives of people in India. In May, Microsoft launched Jugalbandi, a generative AI-driven mobile chatbot for government assistance, in India. Users can speak or type questions in their local language. Jugalbandi will retrieve information on relevant programmes, usually available in English or Hindi, and present it to the users in their local language. Jugalbandi uses language models from AI4Bharat, a government-backed initiative, and reasoning models from Microsoft Azure OpenAI Service. AI4Bharat is an open source language AI centre based at the Indian Institute of Technology Madras (IIT-M). “We saw Jugalbandi as a kind of chatbot plus plus because it is like a personalised agent. It understands your exact problem in your language and then tries to deliver the right information reliably and cheaply, even if that exists in some other language in a database somewhere,” remarked Abhigyan Raman, a project officer at AI4Bharat, in a press release from Microsoft.

During the trials, Microsoft found rural Indians using the chatbot for various purposes like discovering scholarships, applying for pensions, figuring out the status of someone’s government assistance payments, and so on.

| Generative AI and the future of education |

| A recent UNESCO research paper titled Generative AI and the future of education is a must-read for anyone in the field of education. While it does recognise the potential of AI chatbots to mount language barriers, it clearly warns that generative AI is quite different from the AI tech that fuels search engines. Here, the AI chatbot gives an independent response, learnt from disparate sources, but not attributable to any single person. This is a cause of concern. Plus, when students start getting answers (right or wrong) from a chatbot, how will it affect human relationships? Will it undermine the role of teachers in the education ecosystem? How can institutions and nations enforce proper regulations to prevent malpractices? These and more such important issues, related to the use of generative AI in education, are discussed in the report. “The speed at which generative AI technologies are being integrated into education systems in the absence of checks, rules or regulations, is astonishing… In fact, AI utilities often required no validation at all. They have been ‘dropped’ into the public sphere without discussion or review. I can think of few other technologies that are rolled out to children and young people around the world just weeks after their development… The Internet and mobile phones were not immediately welcomed into schools and for use with children upon their invention. We discovered productive ways to integrate them, but it was not an overnight process. Education, given its function to protect as well as facilitate development and learning, has a special obligation to be finely attuned to the risks of AI—both the known risks and those only just coming into view. But too often we are ignoring the risks,” writes Stefania Giannini, Assistant Director-General for Education, UNESCO, in her July 2023 paper. |

Call from your business partner? Are you sure?

While such developments are heartening, the number of scams happening with inexpensive AI tools as an ally is alarming!

A few months ago, an elderly couple in the US got a call from their grandson, who seemed grief-stricken. He said he was in jail and needed to be bailed out with a huge sum of money. The couple frantically set about withdrawing money from their bank accounts. Seeing this, a bank manager took them aside and enquired what the problem was. He smelled something fishy and asked them to call their grandson back on his personal number. When they called him, they found out that he was safe and sound! The earlier call was from an imposter—and obviously from an unknown number, as he claimed he was in jail and calling from the police station! What was more, the imposter sounded exactly like the grandson they had been speaking to for decades, and the couple hardly found anything amiss. Who was his partner in crime? A cheap AI tool which, when fed a few samples of audio recordings by a person, can replicate their voice, accent, tone and even word usages quite precisely! You can type in what you want it to say, and it will say it exactly like the person you wish to mimic.

These kinds of scams using deepfake voices are not new. Way back in 2020, a bank manager in Hong Kong authorised a transfer of HK$35 million, duped by a phone call from someone who sounded exactly like one of the company’s directors! An energy company in UK lost a huge sum when an employee transferred money to an account, purportedly of a Hungarian supplier, following orders from his boss (a voice clone!) over the phone.

At that point of time, it took time and resources to create a deepfake, but today, voice synthesising AI tools like that from ElevenLabs require just a 30-second audio clip to replicate a voice. The scammer just needs to grab a small audio clipping of yours—could be from a video posted on social media, a recorded lecture, or a conversation with some customer care service (apparently recorded for training purposes!) And voila, he is all set to fake your voice!

Such scams are on the rise worldwide. In banking circles, these scams, where fraudsters impersonate a known person or entity and convince people to transfer money to them, are known as authorised push payment frauds or APP frauds. According to reports, it now accounts for 40% of UK bank fraud losses and could cost US$4.6 billion in the US and the UK alone by 2026. According to data from the Federal Trade Commission (FTC), there were over 36,000 reports last year in the US of people being swindled by imposters. Of these, 5,100 happened over phone and accounted for over US$11 million in losses. In Singapore too, the number of reported scam cases rose by 32.6% in 2022 compared to 2021, with losses totalling SG$660 million. According to the Australian Competition and Consumer Commission (ACCC), people in Australia have lost more than AU$3.1 billion to scammers in the past year, which is more than an 80% increase from the year before. While not all these scams can be directly attributed to AI, officials fear the noticeable correlation between the rise of generative AI and such scams.

Fighting AI scams using AI (and a bit of the real stuff as well)

The Singapore Police Force, Europol, and FTC have been sharing their concerns about the potential misuse of generative AI to deceive the public. Tracking down voice scammers can be particularly difficult because they could be calling from any part of the world. And it would be near impossible to get the money back because such cases cannot be proved to your insurance guys either. So, financial institutions as well as consumers must be wary of the situation and try to prevent or avert fraud as far as possible. Say, if a loved one or a business partner calls you out of the blue and asks to transfer money to them, put the call on hold and call the person’s direct line to confirm if it is true before you rush to their rescue.

Banks are also doing their bit to try and prevent scams, account takeovers, etc. Data analytics company FICO found that 50% more scam transactions were detected by financial institutions using targeted profiling of customer behaviour. That is, banks use models to examine typical customer behaviours and flag anything suspicious, like adding a suspicious account as a new payee or preparing to send the new payee an unusually large amount of money. The concerned bank employee could intervene to reconfirm such flagged actions.

Like the serum for snake bite is made of the same snake’s venom, AI itself is essential to the detection and prevention of AI frauds! This year, Mastercard rolled out a new AI-powered tool to help banks predict and prevent scams in real time, before money leaves the victim’s account. Mastercard’s Consumer Fraud Risk tool applies the power of AI to the company’s unique network view of account-to-account payments and large-scale payments data.

The press release explains that organised criminals move ‘scammed’ funds through a series of ‘mule’ accounts to disguise them. For the past five years, Mastercard has been helping banks counter this by helping them follow the flow of funds through these accounts, and then close them down. Now, by overlaying insights from this tracing activity with specific analysis factors such as account names, payment values, payer and payee history, and the payee’s links to accounts associated with scams, the new AI-based Consumer Fraud Risk tool provides banks with the intelligence necessary to intervene in real time and stop a payment before funds are lost. UK banks, such as TSB, which were early adopters of Mastercard’s tool, claim that the benefits are already visible. Mastercard will soon be offering the service in other geographies, including India.

In response to rising criticism and concerns, makers of generative AI tools like chatbots and voice synthesisers have also taken to Twitter to announce that they are putting in checks to prevent misuse. ChatGPT claims it has restrictions against the generation of malicious content, but officials feel that a smart scammer can easily bypass these. After all, when you make a scam call, you are not going to speak foul language or malicious content. It is going to be as normal a conversation as can be! So, how effective would these checks be?

In a very interesting post titled Chatbots, deepfakes, and voice clones: AI deception for sale, Michael Atleson, Attorney, FTC Division of Advertising Practices, asks companies to think twice before they create any kind of AI-based synthesising tool. “If you develop or offer a synthetic media or generative AI product, consider at the design stage and thereafter the reasonably foreseeable—and often obvious—ways it could be misused for fraud or cause other harm. Then ask yourself whether such risks are high enough that you should not offer the product at all. It has become a meme, but here we will paraphrase Dr Ian Malcolm, the Jeff Goldblum character in Jurassic Park, who admonished executives for being so preoccupied with whether they could build something that they did not stop to think if they should.”

Who wrote that assignment?

AI is turning out to be a headache for educational institutions too! In a recent survey of 1,000 university students conducted by Intelligent.com, 30% admitted to using ChatGPT to complete their written assignments! If this goes on, we will end up with professionals who do not know their job. No wonder, universities across the world are exploring ways and means to curtail the use of ChatGPT to complete assignments.

Startups like Winston AI, Content at Scale, and Turnitin are offering subscription-based AI tools that can help teachers detect AI involvement in work submitted by students. Teachers can quickly run their students’ work through a web-based tool, and receive a score that grades the probability of AI involvement in the assignment. Experts believe that there are always a few clues that give away AI-generated content, such as the overuse of the article ‘the,’ absolute lack of typos and spelling errors, a clean and predictable style quite unlike how humans would write, and so on. Large language models can be used to detect AI-generated content, by retraining them using human-created content and machine-generated content, and teaching them to differentiate between the two. Once more a case of AI helping counter an evil of AI!

The problem does not end with students making AI do assignments. It is about morality. In the real world, it leads to problems like misinformation and copyright infringement. And when such issues arise, people do not even know whom to sue!

Take the case of image generation with AI. It is now possible to create life-like images using AI platforms like Midjourney. While this creates a slew of opportunities for various industries such as advertising and video game production, it also leads to varied issues, including piracy and privacy. Usually, in the case of an image, the copyright rests with the human artist. When an AI platform creates the image, whom does the copyright rest with? And suppose the image infringes on someone else’s copyright, whom will the artist sue? In one of his articles in mainstream media, Anshul Rustaggi, Founder, Totality Corp., alerts that with AI generating hyper-realistic images and deep fakes, businesses must address the risks of misuse, personal privacy infringements, and the spread of disinformation. He further adds that distinguishing between real and AI-generated content could become increasingly challenging, creating potential avenues for misinformation and manipulation.

In April this year, members of the European Parliament agreed to push the draft of the AI Act to the trilogue stage, wherein EU lawmakers and member states will work out the final details of the bill. According to the early EU agreement, AI tools will be classified according to their perceived risk level, taking into account risks like biometric surveillance, spreading misinformation, or discriminatory language. Those using high-risk tools will need to be highly transparent in their operations. Companies deploying generative AI tools, such as ChatGPT or Midjourney, will also have to disclose any copyrighted material used to develop their systems. According to a Reuters report, some committee members initially proposed banning copyrighted material being used to train generative AI models altogether, but this was abandoned in favour of a transparency requirement.

Speaking of misinformation reminds us of two recent episodes that left the world wondering who is to be sued if ChatGPT generates wrong information! In April this year, Australian mayor Brian Hood threatened to sue OpenAI, which spread false information that he had served time in jail for his involvement in a bribery scandal.

Elsewhere in the world, a man collided with a serving cart and hurt his knee on a flight to New York. He sued the airline, Avianca, for the mishap. In his brief, the man’s lawyer cited ten case precedents suggested by ChatGPT, but when the airline’s lawyers and the judge verified them, it turned out that not even one of the cases existed! The lawyer who created the brief pleaded mercy, stating in his affidavit that he had done his legal research using AI, “a source that has revealed itself to be unreliable.”

India and AI: Innovative uses and ambitious plans

Well, there is no denying that this is turning out to be the year of AI. It is spreading its wings like never before—at a speed that many are uncomfortable with. True, there are some fearsome misuses that we must contend with. But hopefully, as countries take a more balanced view and put regulations in place, things will improve. So, we will end this month’s AI update on a more positive note, because that is the dominant tone in India as of now. The country is upbeat about the opportunities that AI opens, and raring to face the challenges that come with it.

We keep reading about AI tech being born in India’s startups, about the smart use of AI by enterprises, government initiatives to promote AI, and much more. Recently, our Union Ministry of Communications conducted an AI-powered study of 878.5 million mobile connections across India, and found that 4.087 million numbers were obtained using fake documents. Many of these were in sensitive geographies like Kashmir, and were promptly deactivated by the respective service providers. If that is not impactful, then what is!

Soon after the Prime Minister’s awe-inspiring talks in the US, the Uttar Pradesh government announced plans to develop five major cities—Lucknow, Kanpur, Gautam Buddha Nagar (Noida), Varanasi, and Prayagraj (Allahabad) as future hubs of AI, information technology (IT), and IT enabled services. Lucknow is likely to emerge as India’s first ‘AI City.’

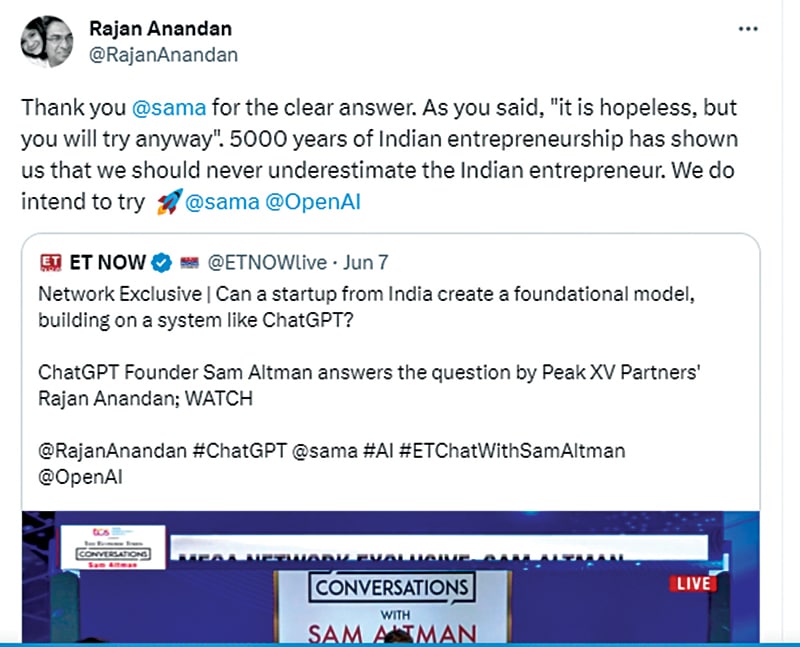

On another note, Sam Altman of OpenAI created quite a stir in social media after his unthoughtful comments at an event in India, during his visit in June. Venture capitalist Rajan Anandan, a former Google India head, asked Altman for a spot of guidance for Indian startups—on building foundational AI models, how should we think about it, where should a team from India start, to build something truly substantial, and so on. Altman replied, “The way this works is, we’re going to tell you, it’s totally hopeless to compete with us on training foundation models, you shouldn’t try, and it’s your job to like try anyway. And I believe both of those things. I think it is pretty hopeless.” He did try to mop up the mess by saying the statements were taken out of context and that the question was wrong, but what ensued on social media was nothing short of a riot! He elicited quite a few interesting replies, one of the best being by CP Gurnani of Tech Mahindra: “OpenAI founder Sam Altman said it’s pretty hopeless for Indian companies to try and compete with them. Dear @sama, from one CEO to another… CHALLENGE ACCEPTED.”

According to a NASSCOM research published in February this year, India has the second biggest pool of highly qualified AI, machine learning, and big data expertise behind the United States. It produces 16% of the world’s AI talent pool, putting it in the top three talent marketplaces with the United States and China. No doubt, our Indian minds are contributing to some of the major AI breakthroughs all over the world. Put to good use, perhaps we can even prove Sam Altman of OpenAI wrong!

Janani G. Vikram is a freelance writer based in Chennai, who loves to write on emerging technologies and Indian culture. She believes in relishing every moment of life, as happy memories are the best savings for the future