COM-HPC server addresses the entire range of edge servers as the new server-on-modules has a maximum power consumption of 300 watts. These modules can currently support up to one terabyte RAM on up to eight SO-DIMM sockets.

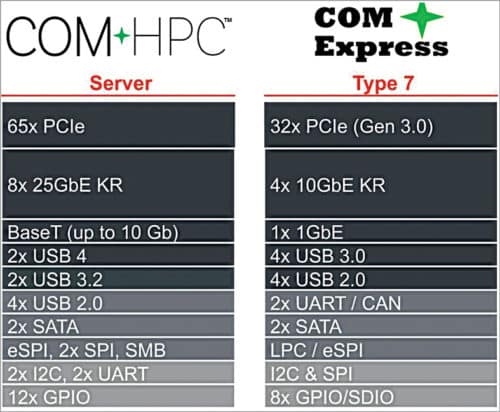

The PCI Industrial Computer Manufacturers Group (PICMG) will soon officially adopt a computer-on-module for high-performance computing (COM-HPC) standard. A part of the new standard is the COM-HPC server specification. It was developed for high-end edge server computing, which eagerly awaits new high-speed interfaces such as PCI Express 4.0 and 5.0 as well as 25 gigabit Ethernet. Compared to established COM Express Type 7-based server-on-modules, the new specification has almost twice the interface bandwidth with its 800 signal lines and therefore provides a significantly greater range of functions besides the performance boost.

The COM-HPC server addresses the entire range of edge servers as the new server-on-modules has a maximum power consumption of 300 watts and can therefore use the powerful Intel Xeon and AMD EPYC server processors. However, the first modules are not expected to become available until the launch of the next Intel embedded server processors.

COM-HPC modules can currently support up to one terabyte RAM on up to eight SO-DIMM sockets. The memory hosted on the modules cannot be made directly available to other parallel processors via Intel Ultra Path Interconnect (UPI) or AMD Infinity Fabric Inter socket. Instead, it is exclusively available to each individual COM-HPC module. Dual-die/single-socket Cascade Lake processor technology with up to twelve-socket RAM is also not feasible with COM-HPC.

Master/slave function for artificial intelligence

The roadmap nevertheless includes multi-module installations on carrier boards since COM-HPC allows master/slave configurations. However, this feature is not so much designed for dual-processor carrier boards, where two or more COM-HPC server modules could be operated in master/slave mode. Rather it aims to enable communication between COM-HPC server modules and COM-HPC FPGA and/or COM-HPC GPGPU modules—a third application area of the COM-HPC standard besides COM-HPC server and COM-HPC Client modules.

The master/slave function, available for the first time with computer-on-modules, was primarily implemented to make flat GPGPU or FPGA modules available in a form factor that is significantly more powerful than MXM. Hence, the COM-HPC standard also stands for the integration of functions such as artificial intelligence, deep learning, and digital signal processing (DSP), which is important for telecommunications. With COM-HPC, all these functions can be made available in a robust, space-saving, and scalable manner. Since COM-HPC server-on-modules are generally not implemented in dual-processor boards, the standard positions itself in the mid-performance segment of server technology, which is required in many diverse configurations at the edge.

5G infrastructure servers and connected edge systems

Much of the demand for robust server-class performance comes from edge applications that reside directly in and at the network and communication infrastructures. Examples are outdoor small cells on 5G radio masts for tactile broadband Internet, or carrier-grade edge server designs with a 50.8cm (20-inch) installation depth, which are currently found in 3G/4G edge server rooms where the installation depth of racks is just 600mm. In both the cases, the systems are often enriched with GPU or FPGA boards for future 5G designs. COM-HPC server modules are, of course, also attractive for the related 5G measurement technology.

OCCERA server with full NFV and SDN support

Another application area for COM-HPC server modules is robust edge server designs that are based on the Open Compute Carrier-grade Edge Reference Architecture (OCCERA) and built-in rack depths of 1000mm or 1200mm. Due to increasing virtualisation and support for NFV and SDN, dedicated infrastructure equipment is being implemented on such open standard platforms in growing numbers. This includes firewalls, intrusion detection systems, traffic shaping, content filters, deep packet inspection, unified threat management, and antivirus applications. Here too, users benefit from on-demand scalability and cost-effective performance upgrades for the next generation of servers.

From Industry 4.0 to workload consolidation

Ultimately, edge equipment also includes all the fog computers close to the applications, that is, redundant clouds at the edge. In the harsh industrial environment, these are required for Industry 4.0 communication and/or to implement new business models and comprehensive predictive maintenance using Big Data analytics at the edge. Another application area is opening up the possibility to consolidate the computing performance on a single edge server instead of distributed computers, for instance, to control a manufacturing cell with several robots.

Energy grids, autonomous vehicles, and rail transport

Similar edge servers are also needed in smart energy networks to reconcile energy generation and consumption in microgrids and virtual energy networks in real time. In such distributed applications, real-time 5G plays a great role since the required high bandwidths along the infrastructure paths can be provisioned easily.

Autonomous vehicles will benefit from 5G, too. These also need COM-HPC server performance in the cockpit as these have to process high bandwidths onboard to analyse data from hundreds of sensors in fractions of a second. Further applications can be found in transportation, namely in the rail sector, to provide a full range of services—from security technology to streaming services for infotainment. Mobile broadcasting systems and streaming servers, as well as equipment for non-public mobile terrestrial radio networks for use by security authorities and organisations, are further application areas for COM-HPC server modules.

Smart vision systems

A considerable number of COM-HPC server applications can also be found in medical backend systems, industrial inspection systems, and video surveillance solutions at the edge for increased public safety, whereas with autonomous vehicles, massive video and imaging data have to be processed in parallel. However, a significant share of these applications will not rely on COM-HPC GPGPU but on using even more powerful FPGA extensions or PEG graphics.

Modular motherboard designs

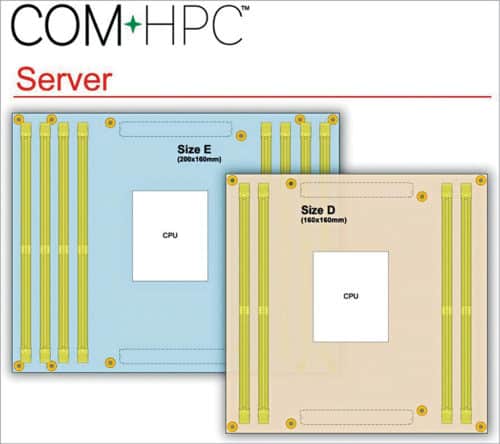

In addition to the often dedicated system designs for the application areas described so far, COM-HPC modules will also be deployed on robust standard motherboards, which are long-term available and ideal for the design of smaller servers. Used mostly in rackmount, workstation, or tower designs, these boards range from the µATX format (244mm×244mm) to the extended ATX form factor (305mm×244mm). EEB mainboards (305mm×330mm) will also be able to use COM-HPC.

As some of the latter feature two processors, these will only be able to conquer part of this form factor market. However, these will bring previously unimaginable scalability to this market, coupled with the option to customise the I/O interfaces extremely cost-effectively and efficiently. This is an enormous advantage for edge applications as the industrial communication landscape is extremely heterogeneous in the direction of the process and field level. So, in summary, COM-HPC server modules are suitable for an extremely wide range of edge and fog server applications in harsh environments, whereby the focus tends to be clearly on the processor.

Why use COM-HPC server modules?

The COM-HPC server specification offers edge servers the full advantages of standardised server-on-modules, which we are familiar with from the computer-on-module market, that is, the performance can be tailored to the specific needs of the application. In a rack with identical server slots, it is therefore possible to adapt the physical performance through the design of each individual module, thereby optimising the price/performance ratio for the application. This is a particular advantage for real-time applications where it’s not an option to simply scale the load balancing regardless of the real physical resources. Rather it must be possible to allocate real resources in a dedicated way.

When performance upgrades are implemented on the basis of existing system design, the costs for moving to the next generation can be reduced to about fifty per cent of the initial investment because, in majority of the cases, it is enough to simply replace the processor module. This significantly reduces the total cost of ownership (TCO) and accelerates return on investment (ROI). Manufacturer-independent standardisation also ensures second-source strategies, competitive prices, and high long-term availability. Finally, server-on-modules is also more sustainable, ultimately contributing to a lower CO2 footprint of server technology in production, which—despite all the energy consumed in continuous operation—significantly lowers the environmental impact.

Advanced server management interfaces

Another advantage of the new specification is the availability of a dedicated remote management interface. This interface is currently being defined by the PICMG Remote Management Subcommittee. The aim is to make part of the function set specified in the intelligent platform management interface (IPMI) available for remote edge server module management. Similar to the slave function, COM-HPC will also provide extended remote management functions to communicate with the modules. This feature guarantees OEMs and users the same reliability, availability, maintainability, and security (RAMS) as is common in servers. For individual needs, this function can be expanded via the board management controller to be implemented on the carrier board. This provides OEMs with a uniform basis for remote management that can be adapted to specific requirements.

Unbeatable custom interface design and performance upgrades

All in all, COM-HPC server offers many advantages. Whenever edge servers need a very specific mix of interfaces, server-on-modules is the best option. In such cases, a carrier board design can be implemented much faster and more cost-effectively than a full-custom design. Up to eighty per cent of the NRE costs, sometimes even more, can be saved this way. What’s more?

COM-HPC server modules are also extremely likely to find widespread use in standard industrial servers as the cost advantages of performance scaling for the next generation are unmatched. The use of modules will further be strengthened by the fact that server performance will increasingly be provided as-a-service so that the investment will be borne by the service provider instead of the user.

COM-HPC: One standard, three application fields

The PICMG COM-HPC standard specifies overall three variants: First, the so-called COM-HPC server modules, which are positioned above the COM Express Type 7 headless specification, and second the COM-HPC client modules, which are traded as successors of the COM Express Type 6 specification.

A third application field for the specification is FPGA/GPGPU modules for which suitable master/slave functions have been reserved in the standard. While the COM-HPC server and FPGA/GPGPU modules are mainly perceived as innovations, COM-HPC client modules give rise to concerns about the disruptive effect on the popular COM Express Type 6.

Users of these modules are therefore a little more skeptical about the COM-HPC standard. They want to protect existing COM Express investments and may ask themselves: How long will COM Express be offered, and do I have to switch to COM-HPC now? What are the advantages for my customers? They primarily want to know what the COM-HPC client modules have to offer and how they differ from COM Express.

When referring to computer-on-modules instead of client modules, users quickly grasp the new concept. They are familiar with the advantages of these products since the ETX computer-on-module standard was published at the beginning of the millennium.

COM Express computer-on-modules will never disappear quickly as thousands of OEMs use them in their industrial applications, relying on the long-term availability promises of embedded processor and embedded form factor standards. So, you can safely stick with your COM Express designs. There is definitely no need for change as long as the given interface specification suits your requirements.

On the other side, moving from COM Express to COM-HPC designs is definitely recommended for those developers who wish to benefit from the new features such as PCIe Gen 4.0, more than 32 PCIe lanes for massive I/Os, four video interfaces, USB 4.0, 25-Gbit Ethernet, and advanced remote management.

But unless you fall into this group, there is no reason for a rushed change. So, relax and continue to rely on the established open standards.

Christian Eder is the marketing director of congatec AG and chairman of the PICMG COM-HPC subcommittee