With multiple options like CPUs, GPUs, and TPUs, choosing the right computing platform for AI models can be difficult sometimes. They each offer varying performance, cost, and efficiency for different applications, which must be considered carefully before making the right choice.

Table of Contents

An artificial intelligence (AI) enthusiast or professional often comes across a common question: “What kind of computing platform do I need to train my AI models on?”

Students and researchers are frequently at a crossroads when it comes to choosing the best computing platform for running AI models. Can these models be run on laptop computers? Or do they need to invest in GPUs or rent a cloud computing platform for different AI applications?

With a plethora of computing platforms like graphics process units (GPUs), central processing units (CPUs), tensor processing units (TPUs), application-specific integrated circuits (ASICs), field-programmable gate arrays (FPGAs), hybrid chips available in the market, it is relatively common to choose the wrong platform and burn a hole in one’s schedule and wallet.

But how can one efficiently choose the right hardware platform best suited for their application? There are many major ones that can be used to run various AI models.

The AI Revolution

It will not be an exaggeration to say that we are in the midst of a new industrial revolution. Thanks to AI, we have witnessed firsthand how numerous industries have changed and how technology is advancing by leaps and bounds like never before.

So much so that, most large tech companies like Microsoft, Google, Meta, and Tesla are calling themselves AI-first companies.

And things are only getting bigger, according to leading estimates, AI is purported to become a trillion-dollar industry by the end of this decade. To meet the computational needs of AI and ML, specialised hardware is essential.

Thankfully, the semiconductor industry has grown hand in hand with the AI revolution and has churned out a galaxy of computing platforms that can be used to run AI workloads.

Some of the most common ones include CPUs, GPUs and TPUs. Additionally, FPGAs, ASICs, hybrid chips, neuromorphic and quantum computers have also proved to be reliable workhorses while running AI workloads.

The CPUs

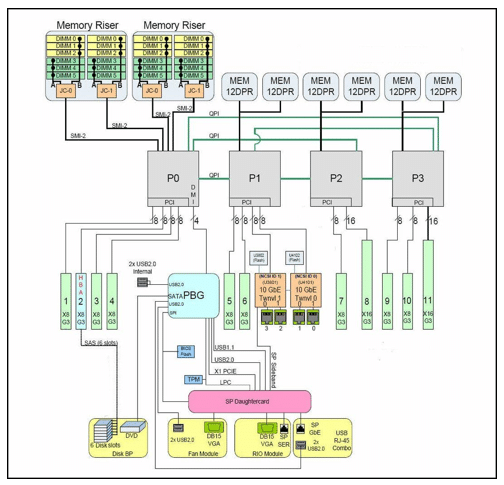

CPUs are the powerhouses of modern computing, designed to handle a broad spectrum of tasks. CPU’s architecture excels at sequential processing, focusing on single-threaded or multi-threaded performance and low latency across multiple cores.

This versatility makes CPUs integral to various computing systems, from personal computers to large servers.

One of the key strengths of CPUs is their generality, supporting a wide range of frameworks and software to run on them. CPUs are generally not preferred for core AI applications and have been found useful in tasks that aid AI, like data pre-processing, control logic, and running the host operating system.

When it comes to core AI workloads, CPUs are suitable for developing smaller models and prototypes, where the cost overhead of specialised hardware may not be justified.

Therefore, despite its versatility, CPUs are not very well suited to the highly parallel nature of AI algorithms. Tasks like training large-scale artificial neural networks (ANNs) can be inefficient on CPUs, leading to very long training times compared to other specialised platforms.

Examples of popular CPUs include the Intel Core i9, i7, Intel Celeron, and AMD Ryzen 9.

The GPUs

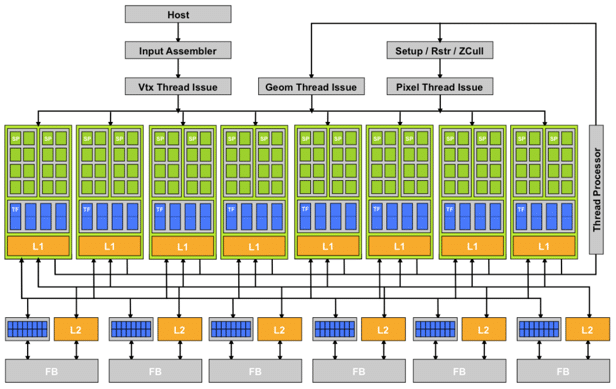

The recent explosion of AI can be fully attributed to the prevalence of GPUs. Originally designed for rendering graphics in gaming and video applications, GPUs have come a long way and are now the de facto platforms for running AI workloads, thanks to their ability to perform parallel computations efficiently.

GPU’s architecture consists of thousands of smaller cores, enabling them to perform multiple calculations simultaneously, which forms the bedrock for deep learning tasks.

This is one of the biggest factors that differentiates a GPU from a CPU. GPUs excel at parallel processing, making them ideal for matrix operations and extensive computations required in almost all AI workloads.

Popular AI frameworks like TensorFlow and PyTorch are even optimised to leverage the capabilities of GPUs, enhancing their performance in AI tasks.

While GPUs offer substantial performance benefits, they are extremely power-hungry and are typically more expensive than CPUs. These factors are to be considered during large-scale deployments where cost and energy efficiency can be critical.

GPUs are the preferred choice for training deep learning models, image and video processing, and high-performance inference tasks. Their ability to handle large-scale, parallelisable computations makes them indispensable in these areas.

Examples of leading GPUs include the NVIDIA A100, B100, Blackwell and the AMD Radeon Instinct MI100.

The TPUs

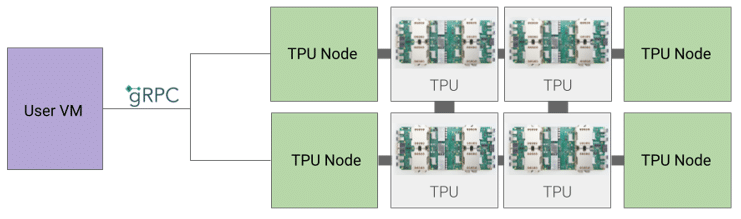

TPUs are custom-built ASICs developed by Google specifically to accelerate AI workloads. These processors are optimised for tensor operations, which are fundamental to many AI algorithms, providing high throughput for matrix multiplications.

TPUs offer superior performance and efficiency for both training and inference of large-scale neural networks. They are highly optimised for Google’s TensorFlow framework, making them a powerful tool for AI tasks that require significant computational resources.

Their efficiency also makes them suitable for deployment in data centres and cloud environments.

However, the primary limitation of TPUs is their specialisation. They are mainly optimised for TensorFlow, which can limit their compatibility with other AI frameworks. Additionally, direct access to TPU hardware is often available only through Google Cloud, which can be a barrier for some users.

TPUs can be used for AI workloads that involve TensorFlow, Keras, or Pytorch.

Performance and Efficiency

CPUs are versatile but less efficient for large-scale parallel computations.

GPUs provide high performance for deep learning and parallelizable tasks, balancing flexibility and power consumption.

TPUs offer TensorFlow-optimised workloads’ highest performance and efficiency, but are less flexible with other AI frameworks.

Cost and Power Consumption

CPUs are less expensive and consume less power for general tasks, but they are not practical for large-scale AI workloads.

While more expensive and power-intensive, GPUs provide good, effective value for performance in AI training and inference.

TPUs, despite their high initial costs, can be cost-effective for large-scale deployments in cloud environments due to their efficiency.

Use Cases and Applications

CPUs are ideal for prototyping, small-scale models, data preprocessing, and control logic in AI pipelines.

GPUs are best for training deep learning models, image and video processing, and high-performance inference tasks.

TPUs are optimal for large-scale deep learning, NLP, and recommendation systems, particularly in cloud-based environments.

Thus, choosing the right processor for AI workloads involves balancing performance, cost, and efficiency based on the specific requirements of the task. Understanding their differences is equally crucial for optimising AI applications.