‘More for less’ seems to be the trend for data acquisition (DAQ) and logging systems. “In the recent years, we have witnessed a migration to more affordable and compact products for data-logging applications,” says Shayan Ushani, business developer, Analog Arts. “The new products offer more functionalities and recording length, and are most often supported by powerful application software,” he adds.

The enabling factors

Progress in analogue signal processing and signal detection is a significant reason for development of more powerful data-logging products, feels Ushani. No wonder then that mixed-signal data acquisition (DAQ) and data-processing capabilities are features integrated within these devices. “In the instrumentation area, the most important aspect is how you convert physical signals into electrical signals,” says Vinod Mathews, founder and CEO, Captronics Systems Pvt Ltd.

Ushani reiterates, “Data-logging applications are usually concentrated around monitoring the output of a sensor. The sensor can be an ordinary thermocouple sensor or a sophisticated earthquake detector.” Powerful application software, easy-to-adapt equipment and automation seem to be the dominating features in the latest range of data acquisition (DAQ) and data-logging products.

Mathews feels that the change is not in the sensor, its accuracy or calibration, but in the treatment of signals. He says, “Today, you are digitising at source and sending the digital signal; so you are not worried about interference by motors and pumps, or other electromagnetic interference.”

Smartly exploiting the digital signal

“The idea is how to get more data into a smaller frequency range,” continues Mathews. He explains that the allotted spectrum is fixed, and adding more data to it will only introduce high density and very low fidelity. Some of the traditional methods for wideband communication are amplitude-shift keying, phase-shift keying, amplitude- and phase-shift keying, frequency-shift keying, and analogue modulation techniques like amplitude modulation and frequency modulation. “Today, we are moving towards orthogonal frequency-division multiplexing (OFDM),” he adds.

Mathews feels that the main advantage offered by OFDM is the ability to encode digital data on multiple carrier frequencies. “This is already being used in 4G, wireless and regular radio frequency applications, during acquisition. Basically, OFDM has multiple carriers spread over a large area and low symbol rate to get higher data rate than that carried by a single signal. In other words, we get a wider band,” he elaborates.

Make way for better processing…

How best to convert the available analogue data to a digital signal? We now have Sigma-Delta analogue-to-digital converters (ADCs) that work with 24-bit data, which demand over-sampling. Filters onboard the ADC or its card strengthen the signal to make it suitable for long-distance transmission.

This method is slowly making its mark in the industry, owing to high measurement accuracy. It is also useful when transferring high bit-count low-frequency digital signals into lower bit-count higher-frequency digital signals, during the reverse process. “New signal-processing components offer high resolution at extremely-low power consumption,” Ushani says.

… Analyse better and store better

Better the processing, better the analysis. Naturally, companies are vying with one another to introduce advanced processing techniques. “Today, most data acquisition (DAQ) companies have front-end analysis as part of the large data tools being sold. National Instruments has Diadem, a third-party software available to pull up data and look at graphs, or pre-measure to see the effects of one on top of the other. These are in an easy-to-use format and do not demand prior knowledge of programming. You can just open up the sample in the software. It pulls in the data and you can throw it around,” comments Mathews.

In addition to grabbing maximum data from the logged data, the newer range of algorithms allow for better data compression and storage. With these algorithms being built into data acquisition (DAQ) systems, there is more storage space for data and, more importantly, more data can be stored in lesser space, in Mathews’ view.

Why worry about space when you have the cloud

Although cloud processing has not caught on much when it comes to data acquisition (DAQ), there is a practice of storing data in the cloud. It avoids storage-and-memory problems. Mathews opines, “Data is digitised and we can have a buffer of a few GB onboard, which makes it easy, and I can then transfer whatever I want to send to a server. Basically, the cloud is used for the back-end. The person who is using the data need not be sitting on the factory floor. Today, he or she can walk through the factory with a tablet and get all the information that is required, because they are all connected through Wi-Fi.”

However, more connected the system is, more is the lurking danger. “Data privacy and security are becoming extremely important factors of the new generation of data acquisition (DAQ) products,” mentions Ushani.

“The reason for this new-found importance is data sharing through the Internet. In addition to this, remote control of these devices for some applications demands a high degree of security,” he adds. Strictly following standards and protocols seems to be the best way to keep the data safe.

Interesting developments

With all these developments, allowing for equipment to be configurable creates the much-needed flexibility for user applications. Varun Manwani, director, Sahasra Electronics, whose company is the exclusive distributor of Labjack products, says, “Devices from Labjack are plug-and-play that do not need a whole lot of time to understand, install and use. These work on open source software and are compatible with Visual Basic, C++ and others. These also come with Wi-Fi, universal serial bus (USB), RS232 and Ethernet connectivity so that consumers can use any protocol to connect their devices to the applicable environment.”

Captronics is looking at introducing global positioning system, Wi-Fi and wireless capabilities in their latest range of equipment. “Captronics now has radio frequency recording and playback signals,” says Mathews. The task does not end with just DAQ. It is important to make sure that the signal is de-modulated and intelligence is captured correctly.

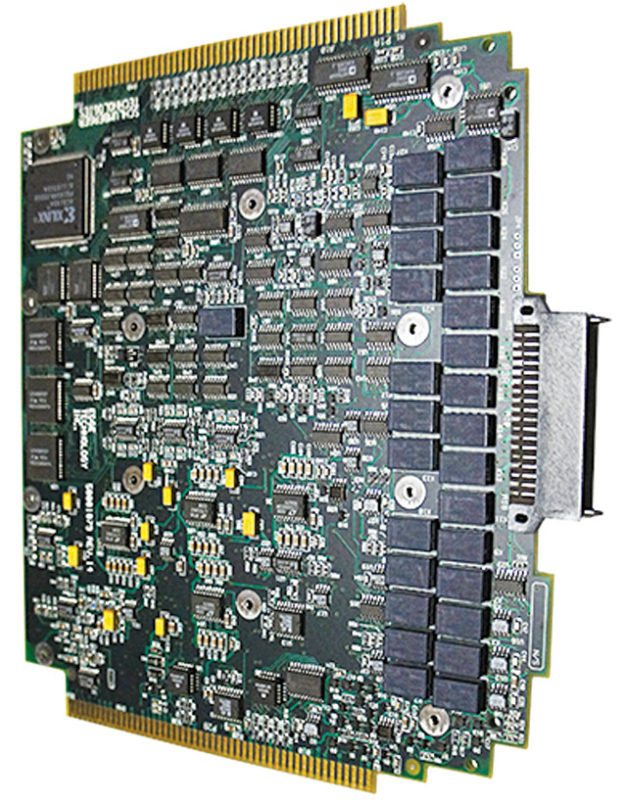

FPGA: From luxury to necessity

Another noteworthy change is the acceptance of field-programmable gate arrays (FPGAs) and software code as integral parts of data acquisitions (DAQs). Many things that have so far been executed via hardware are now moving towards software. FPGAs introduced into data acquisition (DAQ) systems have added benefits for improved functionality. The trend has caught on so much today that these components are taken for granted.

Ushani feels that what works for FPGAs is the low-cost, low-power, high-density and mixed-signal capability, which, in turn, make the data-logging system more efficient and affordable.

The footprint is getting smaller, processing faster and the amount of data bigger, according to Mathews.

A new look, new expectations

Keeping all these in mind, there is a shift in the way DAQ systems are being built. Traditional factors such as accuracy, stability, resolution, speed and memory size are most important, but are no longer the distinguishing factors of these components, feels Ushani.

Manwani feels that it is most important to use technologically-advanced as well as in-production microcontrollers and other key components while designing a system.

Ushani explains that it is a product’s flexibility, ease of use, support for applications and data security that are key influencers for popular DAQ systems.

Do not leave out affordability, as this seems to win the way for the best equipment out there.

Do you like this article, you may also like Make Your Own USB Data Acquisition System

Priya Ravindran was working as a technical journalist at EFY until recently

Shanosh Kumar is working as media consultant at EFY