Read Part 1

Helping everyday Tom Cruises

John Underkoffler is the now-famous man who invented g-speak Spatial Operating Environment, the hands-free interface that Tom Cruise used in the iconic movie Minority Report, which always comes to mind when one thinks of gesture based interfaces.

Underkoffler then founded Oblong Industries to make real-world products using g-speak. Guess what? He figured that everyday workplace problem solving with Big Data is as challenging as the fantastic problems faced by the actor in the movie.

The result is Mezzanine, an immersive collaboration tool that brings together physical environments, people, resources, much like what you witnessed in Minority Report.

Mezzanine is based on Underkoffler’s belief that a gestural computing system does more than just allow users to point and gesture at the computer to give instructions.

According to the company, a gestural computing environment is a spatial, networked, multi-user, multi-screen, multi-device computing environment. With Mezzanine, all walls turn into screens, pulling in people and workspaces from across the globe. A rack-mountable Mezzanine appliance acts as the hub for the collaborative work session. There is a 3D tracking system that handles in-room gestural-control aspects. This consists of a ceiling-mounted sensor array, a base radio and wireless wand-control devices.

The wand is a spatially-tracked handheld device that provides direct pointing control within Mezzanine. Apart from the many screens and cameras, there is a special whiteboard camera, which, coupled with the wand, enables easy snapshots of a traditional or digital whiteboard surface. Multiple-edge devices in and out of the room can be combined using a Web app. The resulting experience is apparently seamless, easy to configure and use, and quite close to a real-world collaborative experience.

Mezzanine makes use of g-speak, a spatial operating environment in which every graphical and input object has real-world spatial identity and position. This makes it possible for any number of users to move both 2D and 3D data around between any number of screens. g-speak is agnostic of screen size, which means a person working on a laptop in a café can work just as effectively as somebody participating from a conference room. Different components of this environment such as g-speak software development kit and runtime libraries, plasma multi-user networking environment, glove based gestural systems and so on can be licensed by customers to fit into their own solutions.

Garnishing education with gestures

Gestures can make education much more effective, claim researchers across the world. Embodied cognition, or learning with the involvement of the whole body, helps understand and assimilate concepts better. Research shows that it reduces cognitive-processing load on the working memory and provides new relations with objects or environments (a psychological concept known as affordances), which are not possible while a person is using only the brain.

For some years now, education researchers have been proposing the use of mixed reality for teaching children difficult concepts. Mixed reality is the merging of real and virtual environments. For example, to learn about the movement of planets, you could have a virtual environment representing the Sun and orbits, while children move around the orbits like planets. Quite recently, schools have started experimenting with the actual use of embodied cognition and mixed reality, using gesture based interfaces like Kinect, Nintendo Wii and Leap Motion.

When a good tale begins, even the clouds stop and listen. Storytelling is one of the oldest forms of teaching. When an effective storyteller narrates a story, children drop what they are doing and sit around to listen. If just a one-way story narration can be so effective, imagine how much one can learn through interactive storytelling.

A group of researchers from various universities in China recently got together to develop a gesture based puppetry-assisted storytelling system for young children. The system uses depth-motion-sensing technology to implement a gesture based interface, which enables children to manipulate virtual puppets intuitively using hand movements and other gestures.

What is novel about this system is that it enables multiple players to narrate and to interact with other characters and interactive objects in the virtual story environment. A cloud based network connection is used to achieve this multi-player narration in a virtual environment with low latency.

A user study conducted by the team shows that this system has great potential to stimulate the learning abilities of young children, by promoting creativity, collaboration and intimacy among young children.

Underwater helpers

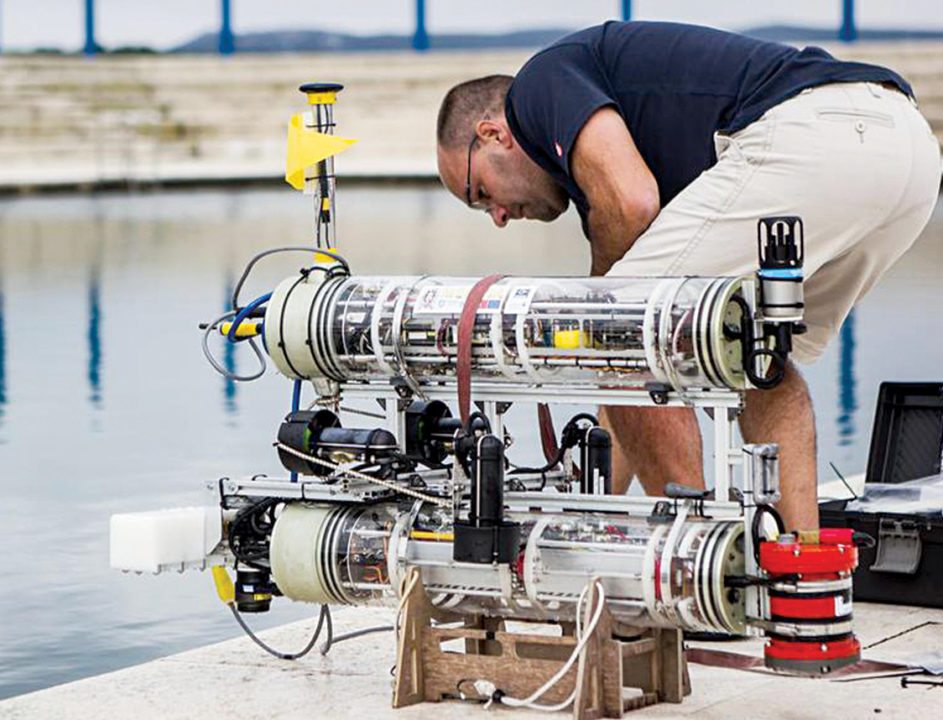

When you are in deep waters, you would love company. Well, divers, swimmers and explorers would like some help, too. If you are exploring a sunken ship, for example, a robotic helper that can move heavy chunks on your way or take pictures as instructed could be very useful. European Union project CADDY, or Cognitive Autonomous Diving buddy, is a heavily-funded attempt to build the perfect underwater companion robot to support and monitor human operations and activities during a dive.

CADDY’s success depends on the reliability of communication between the diver and the robot. However, underwater environment poses constraints that limit the effectiveness of traditional HMIs. This has inspired many research teams to explore gesture based solutions to communicate with CADDY underwater.

Researchers from National Research Council – Institute of Studies on Intelligent Systems for Automation, Genova, Italy, propose to overcome this problem by developing a communication language based on consolidated and standardised diver gestures, commonly employed during professional and recreational dives. This has lead to the definition of a CADDY language called CADDIAN and a communication protocol. They have published a research paper that describes the alphabet, syntax and semantics of the language, and are now working on the practical aspects of gesture recognition. Read all about the proposed solution at www.tinyurl.com/j8td6km

Jacobs Robotics Group of Jacobs University in Bremen, Germany, is another group that is researching within CADDY to develop similar solutions for 3D underwater perception to recognise a diver’s gestures. Their self-contained stereo camera system with its own processing unit to recognise and interpret gestures was recently tested on Artu, a robot developed by Roman CADDY partner CNR.

Artu is a remotely-operated vehicle that used to be steered via a cable from a ship or from the shore. When Jacobs Robotics Group’s gesture-recognition system was mounted on the underwater robot and integrated into its control system, divers could directly control the robot underwater using sign language.

Gestures are vaguer than 0s and 1s

As useful as gestures are, these are not easy to implement in an HMI. In an ideal system that does not require wearables or controlled environments, and also does away with the support of touchscreens and other devices, the possibility of achieving unambiguous inputs is very bleak. Developing a simple system like that could involve immense efforts to not just develop the hardware but also to define an ideal set of gestures suitable to the user group. Because when a dictionary of accepted gestures is not defined, communication could be too vague and only another human would be capable of understanding it.

One example of such efforts comes from a group at IIT Guwahati, Assam, which aimed to standardise seven gestures to represent computational commands like Select, Pause, Resume, Help, Activate Menu, Next and Previous. The gestures were to be used by pregnant women watching a televised maternal health information programme in rural Assam. Participants belonged to the low socio-economic strata and most had poor literacy levels.

A participative study deduced 49 different gestures that participants performed to represent the seven computational functions. According to the team, they selected seven body gestures based on frequency of use, logical suitability to represent functions, decreased possibility that the gestures would be accidently performed (false positives) and ease of detection for the chosen technology (technical limitations).

If such a simple application requires such long research to arrive at the right gestures, you can imagine how much more complex the situation gets when a large and varied user base is expected, special audiences like disabled people or young children are involved, or when difficult environments like mines or oceans are involved.

Indeed, gesture based computing is a difficult dream to achieve, but it could take computing to several people who really need it. In a book totally unrelated to computers, American author Libba Bray wrote an oft-quoted line, “That is how change happens. One gesture. One person. One moment at a time.”

This holds true for intuitive gesture interfaces, too. One gesture could save a man from drowning. One gesture could help a paralysed person live independently. One gesture could help a child learn about planets. One gesture could forge a business deal. One gesture could change the world!

Janani Gopalakrishnan Vikram is a technically-qualified freelance writer, editor and hands-on mom based in Chennai