Ever since tablets gained market share, design firms have been creating different types of tab-lets to ensure that they do not lose out on this lucrative market. Soon PC manufacturers too joined the race and dumped their own ARM-based tablets into the market. What these firms failed to realise, or perhaps ignored, was that the growing tablet market was also cannibalising their PC sales.

During the first-quarter earnings call in 2013, Apple CFO Peter Oppenheimer said, “The International Data Corporation (IDC) estimates that the global personal computer market contracted by 6 per cent during the last December quarter.” Intel’s shares went down from $29.18 in May 2012 to $19.51 in December 2012. At the time of writing, these were at $20.96.

Intel was not going to sit and watch the show. They started pushing out really robust chips that not only perform well but also consume lesser power than the ones before. However, does x86 have what it takes to counter ARM on power consumption front? That would be a game fought on the competitor’s turf.

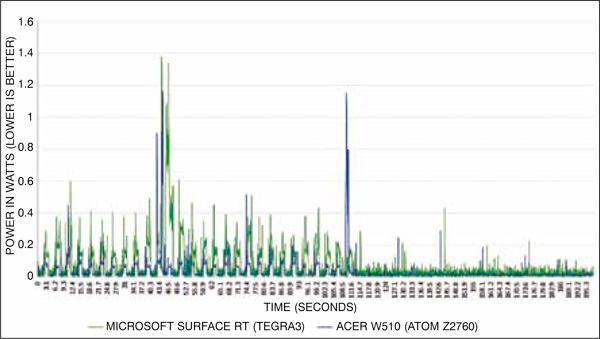

Fig. 1: Power consumption at the CPU and GPU level while the device is idle for a long time

(Courtesy: anandtech.com)

Bring on the competition

Recent advances in ARM architecture with the new 64-bit design, and the growth of applications powered by it, have prompted more vendors to join in and have their own slice. Microsoft has already made its operating system compatible with both x86 and ARM. On the other hand, Advanced Micro Devices (AMD) has tied up with the UK’s ARM and is likely to announce its first ARM-based chip in 2014.

The reason why the 64-bit design is eagerly awaited is that it can help create processors that could handle more random-access memory (RAM) and thus perform memory-intensive tasks, like those in data centres, faster. The weakest link in an RISC-based ARM processor is that it requires far more memory than a CISC-based processor—the 64-bit processor would help to tackle this issue.

So how does this rivalry between x86 and ARM architecture based chip vendors help you as a design engineer or a firm? A quote by David Sarnoff, founder of Radio Corporation of America, gives the answer: “Competition brings out the best in products, and the worst in people.”

X86 can get really low on power consumption

Now that Samsung, Apple and Qualcomm have really powerful ARM-based chips that need very little power to do their work, Intel has started unleashing its own lowpower processors. It is not that Intel did not have them before, but the latest ones are really lowpower enough to take on ARM chips on their home ground.

Fig. 1 shows power consumption at the CPU and GPU level while the device is lying idle for a long time. The processors in comparison are x86-based Intel Atom Z2760 (based on 32nm lithography) and last-gen ARM-based Tegra 3 (based on 40nm lithography).

“A look at the CPU chart gives us some more granularity, with Tegra 3 ramping up to higher peak power consumption during all of the periods of activity,” explains the analyst.

Designers were previously con-strained to use ARM-based solutions if they wanted to design a device that consumed really low power while being powerful enough to handle WiFi, imaging and other simple stuff that we see on a smart phone or a tablet. This limited software options for them as x86-based applications would not run on these systems.

However, now that Intel has risen to the occasion and begun producing chips that offer comparable performance for even better battery life, the choice is now open to base your next product on either an x86-based processor or an ARM-based SoC.

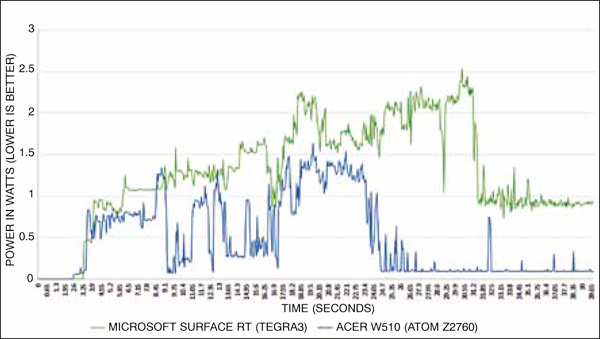

Fig. 2: CPU power consumption during cold boot (Courtesy: anandtech.com)

In Fig. 2, the difference shown in average CPU power consumption while doing a cold boot is significant enough to show improvement in x86. Tegra 3 pulls around 1.29W on an average compared to 0.48W by Atom.

“Atom also finishes the boot process quicker, which helps it get to sleep quicker and also contributes to improved power consumption,” adds Shimpi in his analysis.

Obviously, Tegra 3 is not the only ARM SoC out there. NVIDIA has already released the latest Tegra 4 processor at CES 2013, which is based on 28nm process node compared to 40nm process node on its predecessor. The process node itself should lead to a significant reduction in power consumption on the new SoC. Moreover, it uses an ARM Cortex A15-based 4-PLUS-1 quad-core CPU this time. It also features higher clock speeds, a 72-core GPU and 32-bit dual-channel LPDDR3 memory.

The chart in Fig. 3 shows how ARM-based A6 in iPhone 5 is a seriously competitive chip when it comes to power consumption—it is the lowest power consumer in the group. It is not sluggish in performance either, as you will see later on in this article.

Fig. 3: Device power consumption vs time—Kraken benchmark 2 (Courtesy: anandtech.com)

Who performs better?

The answer is not straightforward because we are talking about two different things—complex instruction set computing (CISC) and reduced instruction set computing (RISC). Basically, the difference here is that CISC focuses on completing a function in just one instruction, while a RISC processor would use a couple of simple uniformly formatted instructions to do the same. At a glance, it might appear that RISC is less efficient. However, what you need to keep in mind is that the simplistic nature of an RISC instruction allows it to be processed in usually just one clock cycle.

A negative impact of RISC with all these instructions is that it uses far more RAM than CISC-based processors, which could be why CISC-based processors were all the rage until now. With the availability of inexpensive and fast RAM, this disadvantage has disappeared since the last half decade. Moreover, as mentioned earlier, the availability of 64-bit ARM solutions will enable the use of even more RAM, closing the gap further.

Even with the availability of competitive x86-based processors, most product vendors are still using ARM-based solutions to power their flagship devices. In performance, though, Windows 8 tablets based on Intel processors are more powerful than ARM-based Microsoft Surface tablets running Windows RT, for example. However, ARM still wins by powering the most popular devices.

Vishal Dhupar, managing director-Asia South, NVIDIA, explains: “Although x86-based SoCs have been recently introduced in the mobile market with the promise of higher performance and lower power, most popular and widely used mobile devices such as iPad, HTC One X+, Microsoft Surface, ASUS Transformer Prime and Samsung Galaxy S3 are using ARM-based SoCs. This indicates that ARM CPU architecture has both the performance and power efficiency to meet the demands of mobile applications.”

System-level optimisation

Since RISC architecture is simpler, developers are able to squeeze out all the performance of a RISC-based processor. Moreover, it uses far fewer transistors, which allows it to remain cool under stressful loads, thus providing consistent performance. This is one of the reasons why Apple’s software combined with their own hardware is able to perform very smoothly. Developers know exactly what they are getting into when they develop for this ecosystem and are thus able to use every iota of power in the device for their application.

Senior engineers agree that system-level optimisation wins—no matter how much money you spend on improving the implementation. Software engineers learn this early in their careers, when they understand that algorithms with a lower order of complexity often perform better than algorithms with a higher order of complexity—especially on more complex or larger problems.

“A poorly implemented algorithm that has a complexity of O(nlogn) will outperform the best implemented algorithm with a complexity of O(n2) on large problems. Unfortunately, this simple logic is hard to understand for some of the largest hardware companies. There may still be time for a company as strong as Intel to strike back,” explains an experienced industry veteran from this field.

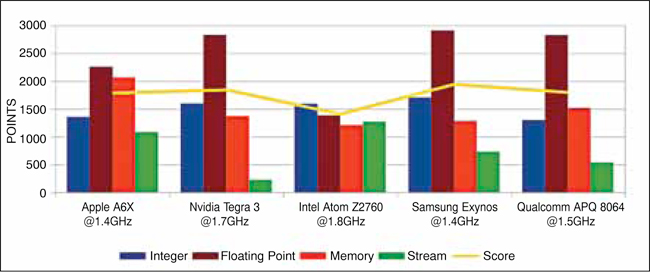

As observed in Fig. 4, when it comes to performance, the Intel Atom seems to be equally balanced among different scores. It is the floating-point performance of Samsung and Qualcomm chips that boosts them to the top of the chart. What is questionable, however, is whether it is the floating-point or memory and stream scores that could point to better real-life performance when these SoCs are used in consumer devices.

“The x86 core is better in some areas like mostly single-threaded tasks. For example, JavaScript execution and website rendering would stand out. However, in applications like audio and video playback, ARM chips have dedicated hardware codecs, so the difference is negligible,” explains T. Anand, MD, Knewron.

Fig. 4: Geekbench score (Courtesy: primatelabs.com/geekbench)

Go for an alternative architecture

We continue to see rapid growth of computational horsepower in mobile silicon enabling more and more advanced use cases.

“Smartphones and tablets are fast becoming our most frequently used computing devices for numerous activities (Web surfing, e-mail, social media, photography, casual gaming, etc), and we will see continued advancements in areas such as user-interface design and responsiveness, complex multitasking, realistic looking games, higher-quality screens and video processing, computational photography (such as HDR photos/videos using Tegra 4), improved context-sensitive speech processing, more intelligent location-aware computing, rich-media pattern recognition to quickly find/isolate video and audio information, and even complex transaction processing,” adds Dhupar.

However, there seems to be another addition to silicon options. ARM-based SoCs are themselves modifiable and can be designed to a specific embedded application, and this is how firms like Samsung and Apple use their ARM licence to design and develop specific SoCs that have a little of the firm’s own twists thrown in. Of course, this is not possible with Intel, which is where the trouble started for them. What if there were another technology that went one step further?

“Processor cores offered by ARM are not necessarily easy to modify themselves, even though the chip on which they are used, i.e., system-on-chip, can be architected for a specific embedded application and the overall SoC better optimised than a standard chip from a company like Intel,” adds another industry veteran.

He explains that some of the latest emerging technologies in processor and system design allow one or more processors and the entire SoC, or even an entire system of systems, to be optimised together for a specific application. Even more interesting is the fact that these technologies enable a whole new class of SoC architectures (hardware equivalent of software algorithms), called direct-connect architectures, to be automatically synthesised from high-level architectural models.

One such example can be seen in recent design platforms such as Sankhya Teraptor, which offers a platform for agile system development with models enabling Indian companies like Dreamchip Electronics to create SoCs optimised for specific applications such as tablets. These SoCs have already been announced, and use a proprietary multi-core 32-bit RISC and DSP processor architecture as well as a unique optimised direct-connect architecture.

So who wins?

Intel has already announced its x86-based Bay Trail atom processor, which is slated for launch in Q3 2013. Compared to Clover Trail, which runs on 32nm, Bay Trail is known to be built using 22nm lithography. So while Intel might not have the biggest market share right now, its future depends on the performance of its next-generation chips.

ARM, on the other hand, currently holds a high ground, with most devices selling with ARM on the board.

“Intel offers processors, while ARM offers processor cores. That is the fundamental difference in their technologies that enables ARM to offer a product that can be integrated into a full SOC optimised for use in a specific application—a simple and important competitive advantage that Intel has tried but failed to overcome through expensive process-level improvements,” explains a senior engineer from the field of chip design.

With even previously x86 focused firms like AMD planning to bring out ARM chips soon, ARM is surely on top right now.

There is also talk of high-end devices featuring x86, while the rest would rely on ARM. However, what we mean by high-end would decide finer details of choice.

“If we want high-end devices to perform telemetry and data transmission over the Internet for critical applications, x86 would still be equally qualified (or rather better). On the other hand, if high-end implies only video and audio capabilities, ARM is the straight choice. We have often talked about smartphones being used as mainstream computing devices in the future; if that starts happening in the near future, ARM could gain advantage over x86,” adds Anand.

Or, we could see a new disruptive technology hitting the market if the underdog AMD finally starts producing both x86- and ARM-based processors, in addition to the cool GPUs that the ATi acquisition brought in. Or perhaps MIPS would edge in on the market since it also is an RISC-based architecture like ARM. Future prospects surely look interesting.

So with all this in mind, what chip will your next product be based on?