As augmented reality (AR) continues to develop and achieve new technological advancements, you can expect to see more of it in the communication industry. AR has a strong use-case for communication and is bringing about immersive ways to connect people

Augmented reality (AR) has brought about a paradigm shift in the mode of communication and medium of expression. Among other things, AR is turning out to be an extremely riveting tool in communication that bridges virtual reality (VR) and the real world.

It is the process of layering virtual elements over the real-life environment. There are many different cases where AR is making communication for individuals much more immersive.

At companies like Scanta, 3D avatars are being used to understand and react to voice commands on a real-time basis using machine learning.

Artificial intelligence (AI), which has machine learning as its backbone, can identify emotions of a user based on spoken words and deliver the 3D avatar that mimics the same emotions along with body movements.

What entails 3D animation

3D animation is the process of creating moving pictures in a digital environment that is three dimensional. Through careful manipulation of objects (3D models) within 3D software, picture sequences can be exported, which give the illusion of movement (animation) based on how objects are manipulated.

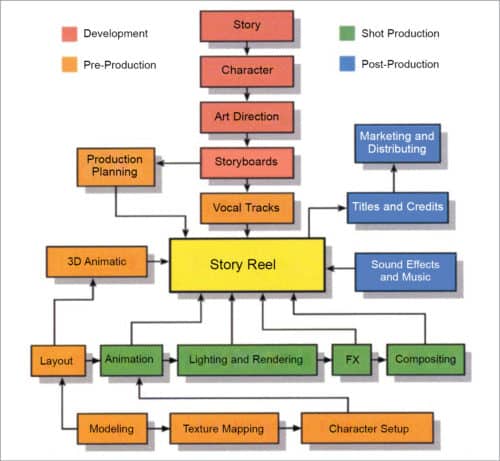

The 3D animation process is a system where tasks are completed at predetermined stages before moving to the next step. It is like an assembly line for animated film production.

The process is broken down into three major phases: pre-production, production and post-production. These phases incorporate tasks that need to be completed before moving on to the next one. Each step builds on previous tasks that were completed.

Phases of 3D animation

Steps involved in creating a 3D animation are explained below.

Pre-production

The first step in the process of creating a 3D animation is conceptualisation of an idea and translation of said idea into visual form. Pre-production has two dimensions; the first is deciding what will happen first, next and last.

The second is interaction, which involves deciding how the voiceover will interact with images, how visual transitions and effects will help tie images together and how voiceovers will interact with the musical soundtrack.

3D modelling

3D modelling is a technique in computer graphics for producing a 3D digital representation of any object or surface. A 3D object can be generated automatically or created manually by deforming the mesh or manipulating vertices.

A typical way of creating a 3D model is to take a simple object, called primitive, and extend or grow it into a shape that can be refined and detailed. A primitive can be anything from a single point (called vertex), a two-dimensional line (edge), a curve (spline) or a 3D object (face or polygon).

Texturing

The next step is shading and texturing. In this phase, textures, materials and colours are added to the 3D model. Every component of the model receives a different shader material to give it a specific look.

Realistic materials

If the object is made of plastic, it is given a reflective, glossy shader. If it is made of glass, the material is partially transparent and refracts light like real-world glass.

Rigging

Rigging is what makes deforming a character possible. It includes creating a skeleton to deform the character and creating animation controls to enable easy posing of the character. Rigging usually involves adding bones, calculating and implementing skin weights, and adding muscles to create natural movements.

Animation

This is a process of taking a 3D object and making it move. It is up to the animator to make a 3D object feel like it is alive and breathing.

Rigged for motion

A 3D character is controlled using a virtual skeleton or rigging, which allows the animator to control the model’s arms, legs, facial expressions and postures.

Pose-to-pose

Animation is typically completed pose-by-pose.

Baking

Before an animation can be used in Unity software, it must first be imported to the project. Unity can import native Maya (.mb or .ma) and generic FBX files, which can be exported from most animation packages.

FBX files include models, bones and animations made in 3D software. There are different texture files (jpeg/png) that are included at the time of exporting project from Maya to Unity.

Unity software

Unity is a cross-platform game engine. Steps involved in creating a 3D animation using Unity are given below.

Importing FBX files and textures in Unity

Assets are imported to Unity Editor and all their textures are extracted. Importing these files comes with two things, namely, materials and textures. Materials refer to plastic or glass.

Making prefab of 3D model

Unity allows users to intuitively create a duplicate, called prefab. Prefab allows storing an asset with all its properties inside the prefab. It acts as a template that designers can use to create new instances of the same object in the scene.

Customising prefab according to requirement

This involves:

- Adding events according to frame rate

- Adding audio to animation

- Adding shading and lights to animation

Adding assets to folder and naming it

An asset is a representation of any item a designer can use in a game or project. It may come from a file created outside Unity, such as a 3D model, audio file, image or any other file type that Unity supports.

Every communication device or platform takes advantage of this technology and integrates it into services for users to enjoy. Apple took its first shot at this technology and created Animojis.

iPhone X features face detection that allows users to unlock their phone with their face instead of using their fingerprint. This face detection feature is also used to apply Animojis over users’ face by detecting facial structures.

A few years ago, Snapchat introduced face filters, which became a popular way for people to chat with their friends. The filters also recognised facial features and followed their movements. The app later developed World Lens, which works in a similar fashion, but instead of adding augmented objects to the face, it adds them to the surrounding environment.

Snapchat expanded its lenses and partnered with Bitmoji to provide users with customisable AR characters. Previously, Bitmoji was a popular 2D personalised character.

Now, the company is allowing users to augment unique characters that can dance, wave and do a variety of movements that their 2D counterparts could not.

Scanta has created AR avatars, called Pikamoji, which is an app with over a hundred unique AR avatars that can be augmented and interacted with through a mobile camera. These interactions can be saved to the library and transferred to preferred social media platforms.

The company is now taking the next step and using machine learning technology to create intelligent 3D characters. These 3D characters will be able to analyse voice commands and break these down through sentiment analysis.

AR can provide an immersive and fun way for consumers to learn or be directed to a product or brand. It has started to drive brand awareness and sales by grabbing the attention of consumers in ways not seen before.

Currently, employees use voice-based communication, including video chats. The problem with this is that it lacks authenticity or a layer of emotion that people get from a live face-to-face interaction. AR has the ability to bring authenticity to the workforce.

Instead of video-conferencing, users can use AR glasses to augment and see each other in real time. They can walk around and interact with each other instead of being confined to a computer or phone screen.

As AR continues to develop and exceed technological advancements, you can expect to see more of it in the communication industry. AR has a strong use-case for communication and is bringing about immersive ways to connect people.

Chaitanya Hiremath is founder of Scanta