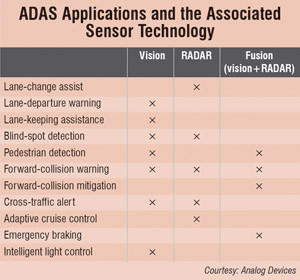

At Analog Devices, ADAS developments for vision and radar systems are a focus. Commenting briefly on the challenges faced while coming up with such a system, Somshubhro (Som) Pal Choudhury, managing director, Analog Devices India (ADI) says, “An interesting challenge that we faced recently came from a dual-core DSP chip, developed for an ADAS—a high-performance pipeline video processor, which receives feeds or multiple frames from the camera, processes and extracts information and intelligently takes decisions.”

Why people should adopt ADAS

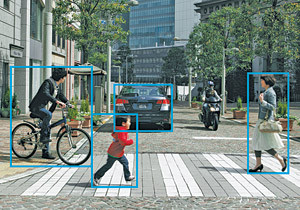

According to a study, every year, 65 million new vehicles are manufactured and 1.2 million people are killed in vehicle accidents. Hence, vision-based safety systems aim to reduce accidents by warning drivers when they are travelling too close to the vehicle ahead, when there is a pedestrian or cyclist in the path of the vehicle, when there is unintentional lane departure and when drowsiness impacts driving.

detection system

Embedded vision technology, when incorporated within an automobile, has the ability to boost efficiency, enhance safety and simplify usability. It upgrades what machines ‘know’ about the physical world and how they interact with it.

Typically, innovative features from the manufacturers are offered on high-end cars as options and eventually trickle down to less-expensive vehicles as costs decline, awareness increases and demand grows.

When asked whether this trend would also be seen in the low-priced vehicles, Somshubhro (Som) Pal Choudhury says, “Currently, this is for the high-end automobiles but as we have seen, technology moves very quickly from high-end cars to the mid-segment, and then to the low-cost vehicles. Going back a couple of years, the air-bag system in Indian cars was almost non-existent but now even the low-end cars have air-bags.”

The momentum behind embedded vision applications is growing at an astounding rate and industry collaboration is needed to enable the technology’s smooth adoption in new markets. “With Web 3.0 and the car becoming part of the Internet, there are possibilities beyond one’s imagination to combine images around the car, and connect from car to car (C2C) and car to infrastructure (C2I),” says Shenoy.

Internet experts think the extensive use of Web 3.0 will be akin to having a personal assistant who knows practically everything about you and can access all the information on the Internet to answer any question you may pose.

In the near future

Research is still on for autonomous braking. Many accidents are caused by late braking or braking with insufficient force. For instance, when driving at sunset, visibility is impaired to a certain extent, making it difficult to respond fast enough when the driver ahead brakes unexpectedly. Most people are not used to dealing with such critical situations and do not apply enough braking force to avoid a crash. Several manufacturers have developed technologies that can help the driver to avoid such accidents or, at least, to reduce their severity. The systems they have developed can be categorised as follows:

Autonomous. The system acts independent of the driver to avoid or mitigate the accident.

Emergency. The system will intervene only in a critical situation.

Braking. The system tries to avoid the accident by applying the brakes.

Eventually, in the future, such technologies could lead to cars with self-driving capabilities—Google is already testing such prototypes. But Google says, it still needs to do ‘millions of miles’ of testing before it is ready to offer a self-driving car system for common use. If self-driving vehicles become a reality, the implications would also be profoundly disruptive for almost every stakeholder in the automotive ecosystem. Self-driving cars have the potential to significantly increase driving safety, according to a Google statement.

“Whilst self-driving or driverless cars are still at an experimental stage, and mass adoption is years into the future, smart vision in cars is here today with ADAS. Collision avoidance, traffic sign recognition, pedestrian detection and other driver assistance applications will significantly make our roads safer not only by timely alerting the driver but also taking several preventive actions,” believes Choudhury.

However, many in the automotive industry believe that the goal of embedded vision in vehicles is not necessarily to eliminate the driving experience but just to make it safer.

The author is a senior technical correspondent at EFY, Bengaluru