Resiliency is the ability of a server, network, storage system, or an entire data centre to recover quickly and continue operations even after an equipment failure, power outage, or any other disruption such as a cyberattack, data theft, disaster, or human error. It is a planned part in storage solutions associated with disaster planning and recovery considerations.

Every business-critical application requires reliable storage solutions. Today, it is challenging for IT organisations to safeguard their data. There are a variety of solutions with specialised features, capabilities, strengths, and limitations. Choosing the right vendor and solutions may be a complicated but important step for an organisation. It is more like in-depth research that may lead you to more than just the solution and technical prospects.

Data resilience

“If it were measured as a country, then cybercrime—which is predicted to inflict damages totaling $6 trillion globally in 2021—would be the world’s third-largest economy after the US and China,” says a new report by US based firm Cybersecurity Ventures. This is an important statistic to consider when working on security.

A couple of thoughts might cause a reconsideration of the approach to storage, and also introduce some innovative new solutions that can help protect business, says Ron Riffe, Program Director, IBM Global Storage. All the quotes below are his.

According to the World Economic Forum, from 2008 to 2016, over 20 million people a year have been forced from their homes by extreme weather such as floods, storms, wildfires, and hotter temperatures. These disasters had an impact on business as well. In addition to these disasters, cyberattacks are now one of the top ten long-term risks, high up on the list of both likelihood and impact.

Bad actors are using AI to engineer more potent cyberattacks, and one in four organisations is forecast to experience a data breach in the next two years. A large majority of the World Economic Forum survey respondents expected the risk of cyberattacks to only increase, leading to theft of money and data and breakdowns in information infrastructure.

Interpol (The International Criminal Police Organisation) agrees. It says that in the last year, the target of cybercrime has significantly shifted from individuals to small businesses to major corporations, governments, and critical infrastructure.

Financial Times reported double extortion ransomware attacks—where hackers steal and encrypt on-site sensitive information and threaten to release that data—have increased by 200 percent in 2020. While the median victim size by revenue was $40 million, more than a hundred victim organisations had annual revenues in excess of one billion US dollars. Riffe says, cybercrime has become big business.

5 important data resilience questions

With cyber attackers on the loose, data often becomes infected, but the malware can take an average of 197 days (almost six months) to discover.

In order to recover from a situation like this, it is needed that a backup copy is maintained for a long time, such that a clean uninfected copy is always available. Certainly, the first order of business is to prevent bad actors from gaining access to your data in the first place. It’s equally important to be able to recover when the inevitable happens.

Commonly, security solutions are designed to stop breaches before they happen. But when the attackers do manage to get through, you want to be prepared to quickly respond. And that gives rise to a number of important questions about data resilience.

1. Is primary storage resilient?

Perhaps the first point to consider here is the place the primary data is stored.

In a traditional recovery scenario, the most recent backup copies are often the ideal ones to restore. That makes it a natural place to start in response to a cyberattack. But remember, cyberattacks present a new wrinkle; the attack that has just been discovered might have happened on an average of six months ago. Hence examining quite a few progressively older backup copies to find the most recent clean uninfected copy can take extensive work.

Ideally, an organisation can maintain snapshot copies on primary storage for near instant recovery, such that a quick access to the inventory is given for instant mounting of inspection. However, organisations do not maintain months of snapshots on their primary storage. “There are also copies maintained on the secondary storage and you want the same kind of inventory and instant mount capability there, making the job of finding the latest clean copy easier.”

2. Is the data copy isolated?

Proper isolation of a backup copy is a major factor that can help retrieve and properly protect the data. When attackers gain access to primary data, often by infecting applications, it is better to keep a copy of the data isolated. It will be beneficial to ensure that an air gap is always there between the application data and its copy. The attacker cannot cross this gap, which keeps copies uninfected.

3. Is the data copy immutable?

If a sophisticated attacker manages to gain access to uninfected copies, a second level of protection can be used to store that data in an unchangeable form. Write Once and Read Many (WORM) technologies can help here.

4. Is the copy discoverable?

“If you learn that you are a victim of an attack, it is important to react quickly.” In order to do this, a copy of the data should always have been taken before the attacker infects it.

Attackers often penetrate systems and stay. So, when a user realises that he has been attacked, a lot of detective work is put in to find a clean uninfected copy. Traditionally, this has meant repeatedly mounting and inspecting progressively older backup copies.

“New systems make the copies searchable, or having e-discovery tools can aid you, so you mount the right recovery copy the first time. Choose tools that make the discovery process easy.”

5. Can data be restored fast?

Discovering an attack takes a long time, which likely means the initial local snapshots would have migrated off to a secondary storage like an immutable object stored in the cloud, or a WORM tape. Hence, the most isolated copy might not be the fastest to restore.

“There is always a balance, and every organisation makes their own trade-off choices with a variety of storage media options that can be configured as isolated and immutable. You will have to consider the best option for your organisation.”

Characteristics of resilient primary storage

“Ensuring your data stays available to your applications is the primary function of storage.” So, what are the characteristics of resilient, primary storage that can help?

Remember, in a hybrid cloud reality, organisations are working with multiple locations, potentially multiple hardware vendors on premises and multiple public cloud providers. A resilient storage solution provides flexibility and helps in leveraging the infrastructure vendors and locations to create operational resiliency. This helps in achieving data resilience in the data centre and across virtualised, containerised, and hybrid cloud environments.

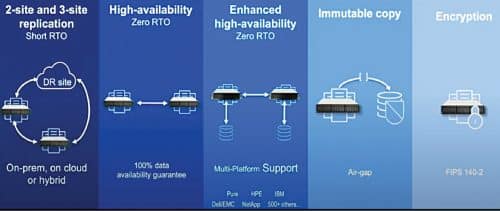

It ideally should be capable of traditional two-site and three-site replication configurations, including public cloud using a choice whether to have synchronous or asynchronous data communication. “This gives you confidence that your data can survive a localised disaster with very little or no data loss, also known as a recovery point objective or RPO.”

Resilient primary storage is also about gaining access to data quickly, in some cases immediately. This is known as a recovery time objective or RTO.

Resilient storage has options for immediate failover access to data at remote locations. “Not only does your data survive a localised disaster, but your applications have immediate access to alternate copies as if nothing ever happened.”

It is important to note that RPO and RTO options should be available regardless of the choice of primary storage hardware vendors, or public cloud providers—meaning multi-platform support. Resilient primary storage is also concerned with protection of data from bad actors or hackers. Encryption guards against prying eyes or outright data theft.

In the event of a primary copy of data becoming infected, resilient storage should also provide for making copies that are logically air-gapped from the primary data and further making that copy unchangeable or immutable.

Ensuring data resilience

Many organisations have a mix of different on-premise storage vendors or have acquired storage capacity over time, which means that they have different generations of storage systems. Throw in some cloud storage for a hybrid environment and you may find it quite difficult to deliver a consistent approach to data resilience. “The first step is modernising the storage infrastructure you already have.”

To conclude, the resiliency techniques can vary with the importance of the respective organistion’s workloads and resources to run the solution. It is wise for organisations with mission-critical workloads to utilise more resilient techniques at more levels, as the cost of not preserving the data would outweigh expenses during a prolonged outage.

The article is based on a talk by Ron Riffe, Program Director, IBM Global Storage at SODACON 2021. It has been prepared by Abbinaya Kuzhanthaivel, Assistant Editor at EFY.