All the smartness that this world needs cannot be handled by the cloud alone. It needs a team of helpers—at the edge.

Edge computing is not a new term. It has been around since the 1990s, when a group of researchers from the Massachusetts Institute of Technology (MIT) invented a content delivery network (CDN) that could serve web and video content from edge servers deployed close to users, to save bandwidth. This prototype, developed by the MIT spin-off company Akamai, is considered one of the earliest examples of edge computing.

Since then, there has been a lot of discussion around edge computing and the Internet of Things (IoT). However, the technology has really come of age now, thanks mainly to the rising interest in artificial intelligence (AI) and, of course, a much-needed fillip from fast 5G networks and powerful mobile processors.

Edge computing is basically a distributed computing framework that moves processing and storage to the edge of the network—that is, as close as possible to the users and sources of data, such as the Internet of Things (IoT) or local edge servers. So, why would someone want to do that? Because, speed matters! Today, everyone wants instant answers and solutions, and the cloud alone cannot handle this crowd. This is true not just for futuristic or high-stake applications like self-driving cars and military operations but also for common ones like shopping and marketing campaigns.

Delays are costly

From industries to automobiles and traffic signals, every device is racing to become smart. Relying solely on the cloud to accommodate this exponential growth of users would result in increased latency—an intolerable outcome. And, it can be more serious than a customer getting irritated by a delay in connecting to a local restaurant’s menu. A millisecond’s delay in decision-making in an autonomous car could cause an accident, or lack of connectivity in a surveillance drone could mean not spotting an impending missile attack! In today’s scenario, reducing latency is of utmost importance. On-device processing, in tandem with the cloud and on-premise data centres, can handle this situation very well. It also brings with it other perks like creating personalised customer experiences and improved security.

No wonder there is such a surge in interest in edge computing, and tech giants including Google, Microsoft and, Qualcomm have been upgrading their offerings to empower developers to scale and enable AI at the edge. Proper edge computing requires a seamless link between cloud, core, and edge. With thousands of hardware nodes to be managed, it is believed that DevOps will play an important role in this transition.

“There have been significant advances in edge computing. Many of the leading tech giants have brought in hardware architectural innovations, which provide increased computation, storage, and overall processing at the edge. Some of the hardware development boards are increasingly accessible from a cost perspective. Also, better connectivity solutions enabled by 5G bring faster communications between edge devices and enterprise systems,” says Ajay Chaudhary, Associate Vice President – Engineering, GlobalLogic. “5G provides an average data transfer speed that is ten times faster than 4G, making it a key enabler of edge-based systems. This enhanced connectivity enables edge devices to communicate more quickly with enterprise systems, resulting in faster decision-making based on the data collected at the edge.”

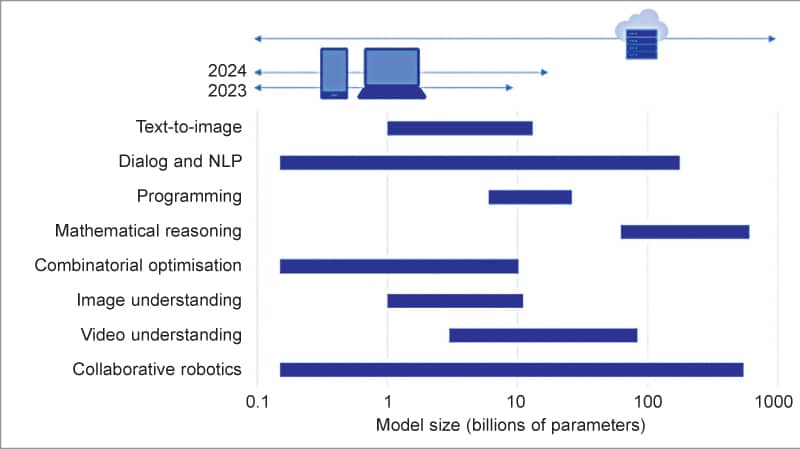

(Source: Qualcomm Whitepaper “The Future of AI is Hybrid”)

Many a change, here and there

Please register to view this article or log in below. Tip: Please subscribe to EFY Prime to read the Prime articles.