Like the ‘horse of a different colour’ in Wizard of Oz, field-programmable gate array (FPGA) and customisable system-on-chip (cSoC) integrated circuits are the chameleon workhorses of the electronics world. Available in a variety of different sizes and arrangements, these can be programmed to perform almost any logic function from small to large. The tightly coupled general-purpose microprocessor and associated sub-system of the cSoC can be programmed with firmware to perform tasks best suited to sequential execution. The ever expanding number of surrounding dedicated logic blocks, memories and interfaces such as math-blocks, SRAM, non-volatile memory and high-speed serialiser-deserialiser (SERDES) interfaces complete this almost infinitely versatile device. But, with all this flexibility, can a high standard of security be maintained?

Before answering that question, let us first take up the question: What security objectives are we most likely interested about? Traditionally, FPGA and cSoC security is broken down into two distinct concepts: design security and data security.

Design security

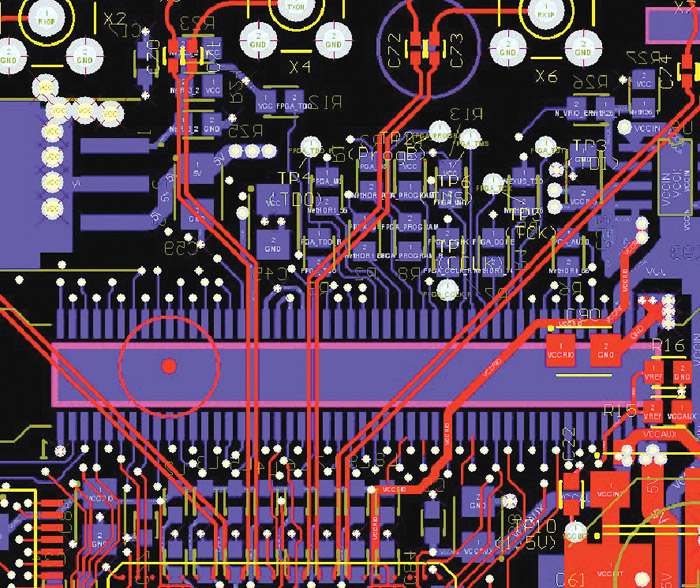

In design security, the objective is to protect the interests of the IP owner—usually the original equipment manufacturer (OEM)—whose engineers designed the soft logic and firmware used to configure the device at the board- or system-level manufacturing step. Because of the investment in creating the FPGA or cSoC soft IP, the OEM wishes to keep its design confidential so that it can’t be copied or cloned.

Possibly worse would be when the logic or firmware design is reverse-engineered, and the OEM’s technology stolen and adapted for competitive products. When this happens, the value of the company may be slashed to a fraction of its pre-theft value, as happened to one US company when its main customer stopped placing orders and started making the systems themselves, allegedly by stealing its design. In this example, the value of the US company’s stock dropped 84 per cent.

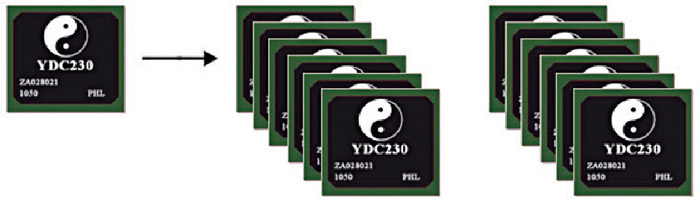

Another design security concern is overbuilding. Overbuilding is where an unscrupulous contract manufacturer or his employees build more systems than the number authorised or received by the OEM, and then sell the excess systems for their own profit. Aside from depressing the market and profits for the legitimate manufacturer, the OEM may also find customers of the often substandard illicit units coming to them for warranty repairs. The nightmare scenario is being sued for defects in a system that you didn’t build but has your name written on it!

While it may be easy for these unscrupulous contract manufacturers to easily procure additional quantities of most components (including the FPGAs) in the system, because of the controls put in place by certain FPGA manufacturers to prevent overbuilding, and the expense in legitimately creating a comparable FPGA design from scratch, FPGAs can actually help reduce the occurrence of overbuilding.

An ever-increasing design security concern is the use of counterfeit or untrusted components. Due to the high cost of developing FPGAs, and the controls in place at the FPGA foundries, the chance of getting a functioning FPGA manufactured with an unauthorised mask set is fairly small. Also, since FPGA and cSoC ICs are unique in that a very large part of the IP is programmed into the device by the OEM, the risk of untrusted IP is largely moved to the configuration step rather than the foundry step.

But, a very real risk is the entry into the supply chain of used parts, or parts that are re-marked from authentic but less expensive versions to more expensive devices that should be designed or screened to faster speed grades, high temperature ranges or higher reliability levels. These parts may even work, part of the time. Certainly, the customer is not receiving the value that was paid for and is potentially risking system failures.

Data security

Data security moves the focus from the OEM’s IP to the data processed by the device. This is typically data owned by the OEM’s customer, or the customer’s customer, rather than the IP owned by the OEM. This is now into the realm of information assurance, i.e., protecting the data stored, processed or communicated by the device during run-time. Often, cryptographic methods are used to protect the confidentiality of this data, or to ensure its integrity and authenticity.

Design security concepts: Protection against overbuilding (Courtesy: www.actel.com)

An FPGA or cSoC without solid design security features is not a good candidate for data security applications. In many projects data security is only a minor concern, but in some applications, such as financial payment and military communication systems, data security is paramount. Data security requirements are becoming more prevalent with the explosion of the ‘Internet of Things,’ i.e., ‘things’ other than conventional personal computers and servers attached to the Internet. These include industrial control sensors and systems, medical devices, smart electric meters and appliances, and a myriad of wireless devices.

Tampering

Tampering can occur at the device level or system level. This is where an attacker tries to gain some advantage by finding out information to which he shouldn’t have access. This may be by eavesdropping on signals that were intended to be confidential, to more active attacks involving changing signals or the state of a system by various means. Often the goal of attackers is to reverse-engineer the system so that they can find other security weaknesses, or to accelerate their own development of similar systems.

Network attacks rightly get a lot of attention since poorly designed protocols or network protections such as firewalls may allow an adversary to steal information from the comfort of an Internet café half-way around the world. We read almost every day about thousands of poorly protected credit card numbers or account login credentials being stolen from Web servers. However, threats may be closer to home, such as a crooked contract manufacturer or a company insider stealing the company’s IP assets.