While electronics R&D labs do undeniably innovate, path-breaking findings coming from the pure sciences—new materials, chemical or physical properties, methods learnt from biological systems, etc—often open up game-changing alternatives with the potential to disrupt the smooth flow of technological progress.

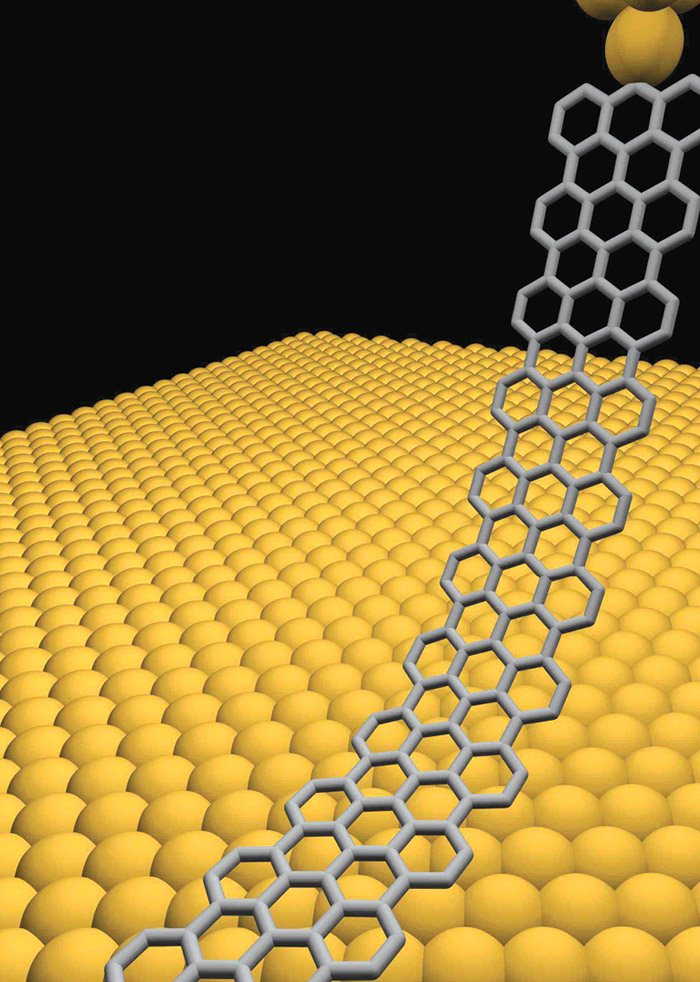

Graphene nanoribbons (Image courtesy: naukoved.ru/news/view/280/)

Telecommunications expert and faculty at the Illinois Institute of Technology, Dr Suresh Borkar says, “The primary focus in electronics and communications is miniaturisation, performance, universal access and power savings. The applications encompass almost all of human endeavour including communications, transportation, health management and entertainment. For continued progress, cross-disciplinary advances are needed in many areas including materials sciences, physical sciences, chemical sciences, biological sciences and mathematics.”

In the wake of the New Year, we decided to celebrate the contributions of science to the fields of electronics, computing and telecommunications, by discussing how pure science research could transform technology in the future. Here are some recent examples from a wide, unending pool.

[stextbox id=”info” caption=”1. Intelligent self-learning systems”]

Research areas: Neuroscience, mathematics

Applications: Neural networks; self-learning systems for use in image recognition, speech systems, natural language modelling

Intelligence is always defined and benchmarked against man’s sixth sense. Soft computing based on fuzzy logic and neural networks, uncertainty analysis and computational theory are all a result of extensive scientific research.

Neuroscientists across the world are trying hard to further understand the working of the human brain, quantify and design it, so it can be used to make smarter computing systems. Developments in this space will have great ramifications in image recognition, speech systems and natural language modelling.

There are several examples of futuristic research in this space, Google’s self-taught neural network being the recent one.

In June this year, Google announced results of one of its abstract researches. Several years ago, a group of Google scientists started working on a simulation of the human brain. They connected over 16,000 processors to make one of the world’s largest neural networks, with over a billion connections. They then fed it thumbnail images extracted from over ten million randomly-selected YouTube videos. The software-based neural network appeared to closely mirror theories developed by biologists that suggest individual neurons are trained inside the brain to detect significant objects. No instructions were given to the system to identify images as cats, dogs or humans. The data and the system were simply left to interact.

Over time, they were surprised to find a neuron in their system displaying familiarity to the images of cats. The system seemed to have learned to identify cats—all by itself, without any instruction, without any label.

According to a comment by a Google scientist, the Google brain assembled a dreamlike digital image of a cat by employing a hierarchy of memory locations to successively cull out general features after being exposed to millions of images. This is similar to what neuroscientists informally call the ‘grandmother neuron’—specialised cells in the brain that fire when exposed repeatedly or trained to recognise the face of an individual.

While a synthetic network with a billion connections is tiny compared to a hundred trillion connections in the human brain, this science-inspired development is indeed notable.

[/stextbox]