There are thousands of models of cell phones that have hit the streets, all with varying specialties and features. Today, mobile phones are the most widely used and must-have electronic gadgets. In recent years, we have also seen considerable progress in terms of how users can interact with mobile phones evolving all the way from QWERTY keyboards to touch screens. What could be next?

The evolution of user interfaces

We’ve come a long way in terms of both – the evolution of mobile phones as well as methods to use and control these electronic devices, which have always been some kind of a challenge.

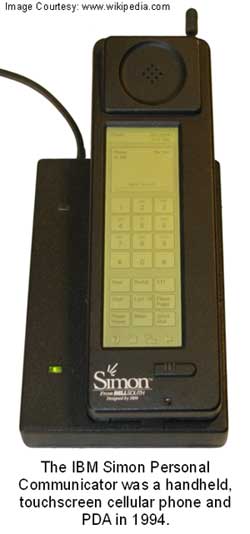

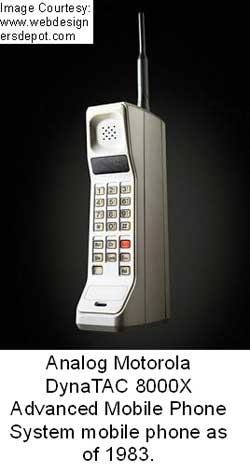

It started out with those bulky phones, which incorporated long antennas, physical switches and buttons; for example the Analog Motorola Dyna 8000X. Following this, the next round of innovation pushed touch-sensitive interaction with track-pads like the IBM Simon for example and currently its the touch-screen interface – the most dominating and familiar interface, for cellular phone users around the world. In the meantime, there was work being done to create voice-interaction with these electronics devices, which as a matter of fact has also come a long way, but is still not robust enough and can’t cover all of the needs we have.

Jumping from touch-screens to Gesture Control Technology

Over the past two years, we have seen smartphones explode in their use and capabilities. From entertainment, to work or even to family duties, we now rely on these devices in almost every aspect of our lives. There is, however, still room for our experience with them to be enhanced. Touch was the last great user-interface advancement and hand gesture recognition for mobile phones will be the next.

Gesture recognition is perceived today as the natural evolution of intuitive user interfaces. Since the creation of touch screens, gestures have reigned in an entirely new aspect as to how we interact with our devices. Gestures allow users to perform specific tasks in an extremely efficient and more dynamic manner.

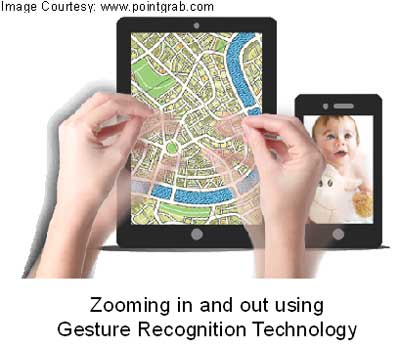

Some of the most used gestures are swipe to unlock, pinch to zoom and pull to refresh. While those are relatively basic by most means, gestures have evolved greatly. There are a number of different companies trying to push forward with touch-free gesture controls. Third party developers have began to truly utilise the potential that multi-touch displays hold, all within their apps. Gestures can offer an intuitive way to interact with a mobile phone. It is almost similar in concept to Microsoft’s Xbox Kinect but on a much smaller scale.

Adding a whole new dimension to multimedia

By adding touch-free functionality to smartphones, there’s a brand new dimension to mobile games and applications. We can now take advantage of the Z-axis by using simple hand shapes to enable reality-like experiences. A few examples of supported actions in games include bowling, throwing darts, playing rock-paper-scissors and more.

Other scenarios made easier with hand gesture recognition are controlling the phone with dirty or wet hands, managing calls while driving or working and interacting with a smartphone when wearing gloves. Mobile operating systems like Android also find their way to the big screen like TV and with that, this new experience will be offered to a broader audience on a wider range of devices, controlling you TV with your hands with no need for a remote control or an external 3D camera is the kind of freedom consumers are looking for.

“Interacting with consumer electronics is about to take another giant leap forward as hand gesture recognition is increasingly incorporated into our smartphones, tablets, laptops, all-in-ones and more,” said Assaf Gad, vice president of marketing for PointGrab. “It’s an exciting time for both OEMs and end-users, as our devices are becoming even more intuitive and entertaining.”

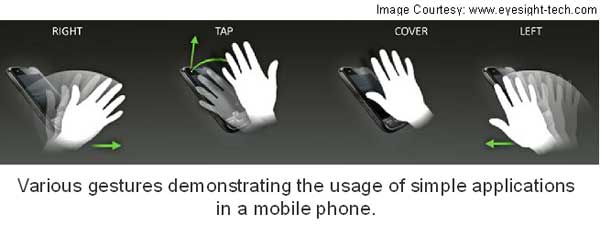

Korean phone maker Pantech, best known in the U.S. for low-end Android and messaging phones, has added gesture-based controls to its Vega LTE handsets to use Kinect-Like Gesture Recognition in Android Phones. By incorporating this recognition software, it allows users to control the handset with gestures. The technology, which comes from Israel’s eyeSight Mobile Technologies, is a part of the Vega LTE line of phones.

According to eyeSight, gestures are useful at times when touch input is impractical such as when driving or wearing gloves. Using the front-facing camera and eyeSight’s software help smartphone owners to control their phones by waving their hand. Among the functions able to be done via gesture will be answering calls and playing music.

“Natural hand gesture recognition is an upcoming user interface capability. Along with natural language recognition such as Siri or Google Now, we are seeing a major user interface evolution similar to the one which occurred when touch screens were introduced in mobile devices,” said Amnon Shenfeld, eyeSight’s VP R&D.

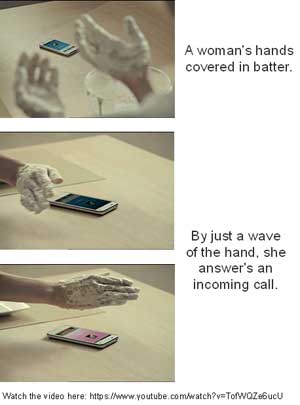

A YouTube video shows this technology in action, where a woman’s hands are covered in batter and with the help of gesture recognition technology, she answers the incoming call. Snapshots from the video can be seen below.

Applications of Gesture Recognition Technology in Today’s Mobile Phones

Few examples of cases, which are enhancing the user experience compared to what is available today are:

1. Call control- answer an incoming call (speaker-ON) with a wave of the hand while driving.

2. Skip tracks or control volume on your media player using simple hand motions- lean back, and with no need to shift to the device- control what you watch/ listen to.

3. Scroll Web Pages, or within an eBook with simple left and right hand gestures, this is ideal when touching the device is a barrier such as wet hands are wet, with gloves, dirty etc. (we all are familiar with the annoying smudges on the screen from touching).

4. Another interesting use case is when using the smartphone as a media hub, a user can dock the device to the TV and watch content from the device- while controlling the content in a touch-free manner from afar.

To Sum It All Up

Touch is still a key user interface but with all the sensors in our smartphones, we can expect to see more of this technology as devices begin to adopt what can be called the “invisible interface”. Gesture recognition might seem a mismatch with phones since people tend to keep them close at hand, but it is believed there are plenty of reasons people would want to manage their handsets with motions.

For example, once a phone is positioned on a car dashboard, a driver could utilise gestures to answer or ignore an incoming call, activate voice recognition features, zoom in or out on a map and adjust the device’s volume. The exact gestures would vary based on phone model or personal preference but would likely include a series of waves, swoops, pats and flicks.

However all is not simple…

There are a lot of people who question the need for touch less interaction on a device that’s designed to be in your hand at all times! The use case for touch-less gestures on a mobile device are incredibly few and far between. The only reason to ever have to use touch less gestures on smartphone or tablets would fall into just one category: touch-free means smudge-free.

If your hands are dirty or a person hates smudges, touch-free controls are a benefit, but beyond that, there is little reason to need touch-less gesture controls. Hands are designed to touch and manipulate things. Moreover, a key limitation to this technology is that it only recognises motions, such as a hand flick or circular movement, within a six-inch range. Power consumption is a key issue for battery-powered devices.

Several challenges remain for gesture recognition technology for mobile devices, including effectiveness of the technology in adverse light conditions, variations in the background, and high power consumption. However, it is believed these problems can be overcome with different tracking solutions and new technologies.

The author is a tech correspondent at EFY Bengaluru.