The mobility landscape in India has been increasingly dynamic. But while most are focusing on electric mobility, a young engineering student is pioneering to build the first-ever fully autonomous vehicle in the country. Rahul Chopra and Siddha Dhar spoke to Gagandeep Reehal, co-founder of Minus Zero, who along with co-founder Gursimran Kalra is striving to develop self-driving technology for the unruly Indian roads. Here’s what we learnt.

Q. Why the name ‘Minus Zero’? What does it signify?

A. Philosophically, minus zero implies that nothing is left behind, signifying our aim for perfection. 100% minus 0 is still 100%. Mathematically, we know that negative zero doesn’t exist, or neither does negative time. But if you actually go deeper, there are chances that in computer science itself, negative zero might have a lot of significance that we as humans are yet to comprehend.

And ultimately, it was also a catchy name and was good for brand building because it made people wonder since it is so peculiar, and thus stays in their mind.

Q. What are you and your team trying to achieve with Minus Zero?

A. Autonomous vehicles like self-driving cars have been around for some time. For the last 6-7 years, these have been gaining a lot of traction in the automotive industry and have also been a hot topic in the tech community. But contrary to the promises made, it’s still seemingly a far-fetched goal due to capex and time-extensive technological bottlenecks in the current approaches to self-driving.

The traffic in countries like the US or the UK, or any such developed country, is very structured. If you compare that to India, the traffic infrastructure here is haywire. Anything can happen at any time. So, the same technology, which is highly data-dependent AI, that works elsewhere won’t be a viable or safe solution for the traffic found in emerging markets, which becomes a major challenge.

Our vision is to build a more affordable and safer future of mobility for emerging markets with driverless taxis that can handle the tricky ODDs (operational design domains) found here.

Q. What is the definition of Level 5 autonomy?

A. There are basically five levels of autonomy. Level 0 is where the driver is completely responsible for driving the car. Levels 1, 2, and 3 are driver-assistance systems, which means that if for any reason the driver loses focus that might cause him to lose control over the vehicle, the vehicle is capable of driving itself for a couple of seconds. This is where carmakers like Tesla are.

Level 4 autonomy is achieved when an autonomous vehicle can operate without any driver in geo-fenced areas or ODD, say urban cities with proper roads.

A vehicle with Level 5 autonomy can drive itself anywhere around the world, on-road or off-road, without the need for human intervention to supervise or take over in case of any anomaly. A car with Level 5 autonomy can handle any kind of traffic, whether it is a mountainous area or a jam-packed urban space like New Delhi’s Sadar Bazaar. In fact, it can drive better than a human.

Q. But a Tesla car can drive itself from one location to another and even follows traffic signals on the US roads. Wouldn’t that be at least a Level 4?

A. No, because Tesla says that a driver must be there on the seat and keep his eyes on the road. In Level 4, the driver is missing but the geographical locations are limited. So, Tesla can operate on Level 4, but it is not safe on that level. Even Tesla calls itself level 3 because the driver has to be there; they are still developing their technology.

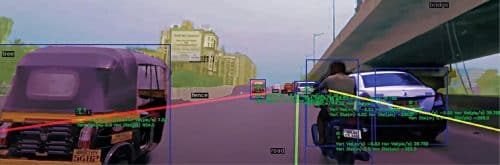

Q. In your video, where you demonstrated your tech on a 3-wheeler, nobody is sitting on the driver’s seat in the 3-wheeler, but someone is sitting at the back with a laptop with wires connected to the 3-wheeler. So, was it really autonomous?

A. It was for safety and backup as we are still developing our tech. The biggest challenge when you’re driving on a public road is that you have to ensure the safety of everyone around you. There could be chances of a camera or something failing, so the person sitting at the back had to be there as a precaution. The wires were connected to the braking mechanism so that we could disengage the autonomy system and apply the brake immediately in case of any anomaly. Fortunately, the driver did not have to intervene at any point and the prototype was able to handle itself properly.

Q. How many trial runs have been done so far on public roads?

A. We have tested it numerous times in private testing areas like open grounds or private campuses. However, as of now, we could test it in limited capacity on a public road because, since there are no self-driving regulations in India right now, we are not legally allowed to do that.

Q. What does the government need to do for startups like yours to provide you access to a testing environment?

A. It’s a bit of a catch-22 situation right now. Since there are currently no self-driving cars in the country, there are no regulations regarding the same. Hence, it’s become challenging to develop and test autonomous vehicles on the road. This is a chicken-and-egg problem.

There are three aspects to the laws that need to be formulated, some of which have already been passed in the US states like Arizona, Phoenix, and California, and also in Singapore and Germany.

The first important aspect is how do they define the safety standards. Safety standards for standard vehicles include requiring airbags in the cars. Similar safety standards need to be set for self-driving cars as well.

Second comes the liability. For instance, if there is an accident involving the car, then who will be liable. Usually, in India, the owner of the vehicle is liable. But in self-driving cars, who will be liable: the passenger, the manufacturer, or the service provider?

And finally, we need laws on privacy, because self-driving cars often collect data while it is being driven. We can collect any amount of data during testing, but a privacy law pertaining to autonomous cars would determine what data we can collect. Some companies even collect the full video data while the car is being driven. But does that not infringe on the privacy of others? In a nutshell, the government needs to form regulations or guidelines for safety, liability, and privacy.

India is way behind in terms of passing such rules. If you take developed and developing countries, India is still in the last or second last position in terms of legal regulations, whereas countries where very few self-driving vehicle industries are established, like Singapore, have already passed rules for autonomous vehicles.

Q. How do you see the landscape of autonomous vehicles? What’s being done by other major OEMs?

A. Leading brands like Mercedes and others who have their vehicles in India are focusing on ADAS systems, like rear parking sensors, which fall under Level 1 autonomy. Nobody is targeting Level 4+ autonomy in India right now as per public sources, although there are a couple of startups and other corporates who want to explore this field. But the major challenge that even companies like Tesla face is the traffic in India, which is so dynamic and random that their systems will not be able to handle it. So, while such technological hindrance associated with Indian traffic are there, that is where we have a USP because our tech is particularly suited for Indian traffic.

Q. What is the difference between your tech and that of your counterparts?

A. Our biggest USP, in this case, is that our AI is less dependent on data. As a self-driving firm, you usually need more and more data for your system to increase its accuracy. So, data is both a boon and bane to the AI industry. All other self-driving companies require collection of a humongous amount of data for each city to make their system robust for that city.

This is where we have the advantage since we don’t need as much data. Our system is a nature-inspired AI. Humans do not need to see innumerable images of a bird from various angles to understand what it is; one or two pictures are usually enough to render that information into our brain using which we can identify one when it is in front of us.

Similarly, our system can extract 3D information from 2D data only. We have zero dependencies on lidar. What lidars do to generate 3D information, we can get the same 3D data from our camera feed only without compromising on accuracy.

Q. In other words, it’s primarily an edge-based AI?

A. Yes, it is totally on edge. In fact, the e-rickshaw prototype that we shot the video of, the entire processing was done on a gaming laptop. It was a clear indicator that our system doesn’t require huge processing power to make decisions. The target of the rickshaw prototype was three things. First was to show that it can handle Indian traffic. Second, to show that we do not need very high-end hardware to process that kind of data and, if you have an intuitive algorithm, the same thing can be worked on normal hardware. Third, this technology is not that expensive as the industry claims it to be.

Q. You’re still pursuing your engineering degree. So, where did you get the background to develop such a revolutionary tech?

A. While I am still studying engineering at the Thapar Institute of Engineering and Technology, I have been in the AI industry for a very long time. I started coding from grade 4 and by the time I graduated from high-school, I had already tested my hands on different types of technologies with AI in particular. You can often find me as a judge, mentor, or speaker on AI and autonomous robotics in various technical events, conferences, and colleges across works.

The idea of Minus Zero started as a research paper on ‘AI that is less dependent on data,’ but research limited to a pen and paper holds little value in real-life applications. We wanted to use this research for the benefit of the human race. That is when Gursimran (co-founder of Minus Zero) and I started exploring options where this technology would be most feasible, and we realised that self-driving cars are something that will have the most prominent benefits from this kind of AI.

Q. Are your patents for software-only or is there one for electronics hardware too?

A. There are for hardware configurations as well. People think that self-driving is only a software matter, but it is not. It is a software plus hardware problem. If you want to do Level 5 self-driving autonomy, then hardware also plays a major role.

Q. How does your tech reduce dependence on data?

A. There are certain companies that have an end-to-end approach. An end-to-end approach is a very big neural network with billions of parameters. Where on one side you input all the sensor data, and on the other side you get the output. The bigger the network is, the more they will have to drive on the same lane over and over to capture the maximum number of possible scenarios. That is why, with an end-to-end approach, you need a lot of data to train on. You do not know what is going on behind neural networks and how the bits are being optimised. You just know that you need data to increase accuracy, so you keep on feeding more and more data. This is the end to end approach which is being followed by companies that are on Level 4 autonomy in certain countries.

Then comes the approach of companies like Tesla and ours. Instead of considering driving as one big task, we divided it into multiple subtasks. Instead of one task, we have multiple tasks, which include object detection, semantic segmentation, motion planning, and other small tasks. Our goal is that instead of perfecting that one big task, we try to perfect each small task individually, so that we know where exactly we need to improve, what exactly needs data, and what has already been perfected.

It is easier to perfect an object detection algorithm with less data than an entire driving task. In a driving task, many kinds of situations can happen, and every time the situation will be different. You will need more and more data for that but if, for instance, you have to perfect the object detection algorithm, that can be done with less amount of data. Tesla has also done the same and that is why it can drive autonomously in few cities. Their level 3 car can drive autonomously at almost every place in the world where it is deployed right now, whereas other companies are still limited to a few cities. That is because, in order to expand to other cities, they will have to collect more and more data.

Second, since we do not use lidar, we don’t need lidar data. And that reduces the data dependency further. This is how a major chunk of the data dependency is removed.

The next thing is that, normally other companies need continuous data when driving from one point to another. But in our case, we do not need continuous data, we need specialised data. For example, we need a couple of minutes in typical Delhi traffic or discontinuous data from different places. In a multi-task approach, other companies collect all the data like sensor data, camera data, and other data for ultrasonic radar, IMUs, and GPS, etc.

This is where our AI, which is less dependent on data, comes in. We only need camera data and that too for particular tasks like object detection, segmentation, etc. Instead of predicting things with a neural network, these are calculated from the output of the first few subtasks. So, we need data for only five tasks instead of all tasks because the tech is able to deduce things like distance of a car and its size using the 2D data with the help of certain proprietary algorithms. So, it uses those kinds of heuristics so that instead of predicting everything, certain things can be calculated, which is computationally efficient.

Our system decreases data usage in three ways. You don’t need lidar data, you need selective data due to multi-task learning, and our proprietary AI is less dependent on data, which can extract the maximum of information from a limited amount of data. We are in the process of filing patents for the same.

Q. While for the demo you used a gaming laptop, will you be developing any specialised electronics hardware in the near future?

A. We are a technology company. We will be sourcing electronics hardware with custom configurations through supply chain partnerships.

Q. Can you shed some light on how you reduce the number of cameras and radars?

A. We use eight cameras to get a 360-degree view of the surroundings and prepare a bird’s eye view. We have the size and acceleration of all the objects that get detected by the cameras, and get their vertical and horizontal speed from 2D data only. Following this we reconstruct a map from an aerial view, which has different objects plotted on it, and then we plan trajectories to move our vehicle forward. All of these things are done by our software.

Q. Basically, your system works on a reconstruction where you capture the image of the objects from different cameras to get the 3D data. Is that correct?

A. Yes, there are eight cameras at different locations to generate a 360-degree view. Each camera has a certain view angle, so when you combine all the feed of the cameras together, it gives you a 360-degree video. From a 360-degree horizontal video and that data, we can extract information like speed and distance of the non-ego vehicles. But for each vehicle, this information is captured using a single camera only. This is our first patent, which is getting the dynamics of all the entities around you. From a single camera, you can detect the vertical or horizontal speed, acceleration, size, and its trajectory, which in turn is used to plan our own vehicle’s trajectory.

Q. Are you using any sensors and radars?

A. Yes, we are also using radar and ultrasonics as backup sensors. These are not active sensors but passive sensors to optimize the output from the cameras. For our own localisation, we use GPS and IMU, which are necessities because you need to know the velocity of your own vehicle also and these are comparatively cheaper. The sensors basically removed by us are just lidars to reduce the expense. Overall, we have some radars and sensors as backups to ensure that the tech without lidar is safer than the with-lidar tech.

I must add that while the tech itself is cool, safety is our primary goal. We don’t need self-driving cars because they are cool but because there are too many accidents due to human negligence. We need to replace humans with something safer.

Q. What is your proposed business model?

A. We are starting with driverless fleets for micro-mobility in private institutions like educational campuses, industrial parks, theme parks, airports, etc. Post that, we will be entering into public-road driverless shared mobility solutions in partnership with OEMs. Singapore will serve as our first target market due to favourable regulatory infrastructure.

Usually, for a ride, the company takes twenty percent of the ride charge as commission while the remaining eighty percent goes to the drivers. But in our case, we have no drivers, and keeping in mind the maintenance and charging costs, we have a better return on investment than normal car-hailing services.

Q. Are we correct to understand that you might be forced to launch a driverless fleet in Singapore rather than India?

A. We’ll be ready for India by 2023, but the lack of regulations is what is stopping us from entering it right away. Now, these regulations are not a one-person show. Any moment you are making a disruptive change, that can’t be done by one person or company. It is a collaborative effort of the automotive companies, major OEMs, regulatory bodies, and the media particularly, and which is why I take every opportunity to give that message out.

But things don’t end here. We’ll be starting with a smaller fleet for private campuses like educational institutions, industrial parks, theme parks, etc, and we’ll be starting with our beta testing in these campuses within a year. Post that, we will be entering Singapore for public road testing and deployment.

Q. Why do you want to shift to Bengaluru when you are studying in Punjab?

A. The community and the people working for us are mostly from that area, and also because Bengaluru provides better industry connections. Since we will be partnering a lot, Punjab is not the ideal place for setting up an industry as technical resources available there are limited.

There are also other factors that add to the costs and eat away most of your capital. The Karnataka government is very supportive of startups. They have been very supportive of electric vehicles, and we really hope that it will be the same for autonomous vehicles as well. Moreover, there are large aerospace parks in Bangalore where even space-tech companies test their engines; we need that kind of space to test our vehicles as well.

Q. Are you inviting investments?

A. Yeah, we are open to it.