Car companies have developed a design for universal data format that will allow for standardised vehicle data exchange, making the crowd sourcing paradigm available for self-driving automobiles.

Sharing data such as real-time traffic, weather and parking spaces will be much faster and easier. The design is called sensor ingestion interface specification (SENSORIS), and it has been picked up by ERTICO – ITS Europe. It is a UK body overseeing the development of globally-adopted standards related to future automotive and transportation technologies.

The aim is to define a standardised interface for exchanging information between in-vehicle sensors and a dedicated Cloud as well as between Clouds that will enable broad access, delivery and processing of vehicle sensor data, enable easy exchange of vehicle sensor data between all players and enable enriched location based services that are key for mobility services as well as for automated driving.

Currently, 11 major automotive and supplier companies have signed up for SENSORIS innovation platform. These are AISIN AW, Robert Bosch, Continental, Daimler, Elektrobit, HARMAN, HERE, LG Electronics, NavInfo, PIONEER and TomTom. Pooling analogous vehicle data from millions of vehicles around the world will allow for a fully-automated driving experience. Each vehicle will have access to near-real-time info of road conditions, traffic data and various hazards that will help these self-driving vehicles make better decisions on the road.

Many of today’s futuristic projects—from self-driving cars to advanced robots to the Internet of Things (IoT) applications for smart cities, smart homes and smart health—rely on data from sensors of various kinds. To achieve machine learning and other complex technical goals, enormous quantities of data must be gathered, synthesised, analysed and turned into action, in real time.

A self-driving car uses complex image recognition and a radar/lidar system to detect objects on the road. The objects could be fixed (curbs, traffic signals, lane markings) or moving (other vehicles, bicycles, pedestrians). The scope involves validating the data of more than 1000 hours of car camera and radar/lidar recordings. At three frames per second, there are almost 11 million images to analyse and correct, which is too many to do using off-the-shelf tools.

Architecture for a tool enabling the car system is meant to validate large amounts of information provided by real, direct observations—also known as ground-truth data. Creation of the tool provides a way to validate the accuracy of sensor-derived data, and to make sure that the car would respond appropriately to actual objects and events, and not to sensor artefacts.

The future self-driving vehicle value chain will be driven by software feature sets, low system costs plus high-performance hardware. Simulation software based on agent based modelling methodology, which is used to create real-world driving scenarios to test complex driving situations for autonomous vehicles in agent based simulation in terms of advancing virtual testing and validation, will be used to test future autonomous vehicle concepts. This method of testing puts agents (vehicles, people, infrastructure) with specific driving characteristics (such as selfish, aggressive, defensive) with their connections in a defined environment (cities, test tracks, military installations) to understand complex interactions that occur during simulation testing.

Benefits to car manufacturers and their suppliers that this approach aims to deliver are faster product development cycles, reduced costs related to test vehicle damage and lower risk of harming a vehicle occupant under test conditions.

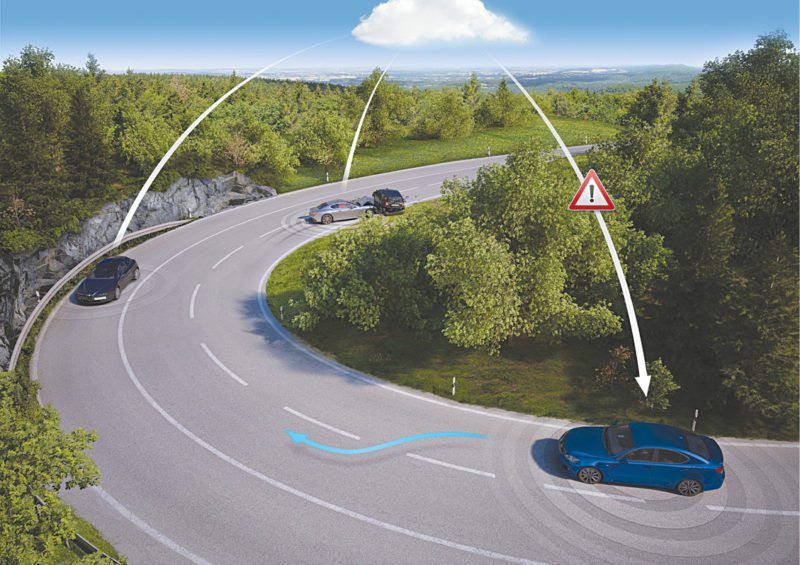

The goal has always been to find a home for this specification that is open, global and accessible to all. This is a vital step on the path to creating a shared information network for safer roads. If a car around the next corner hits the brakes because there is an obstruction, that information could be used to signal to the drivers behind to slow down ahead of time, resulting in smoother, more efficient journeys and lower risk of accidents. But that can only work if all cars can speak and understand the same language.

SENSORIS

Vehicles driving on the road are equipped with a magnitude of sensors. This sensor data may be transferred over any kind of technology from the vehicle to an analytic processing backend. Between individual vehicles and the analytic processing backend, an OEM or system vendor backend may be located as a proxy.

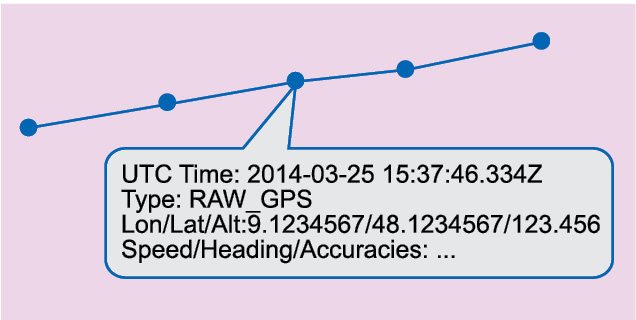

The sensor data interface specification defines the content of sensor data messages and their encoding format(s) as these are submitted to the analytic processing backend. However, the specification may be used between other components as well. Sensor data is submitted as messages with various types of content. It is common to all kinds of submitted messages as these are related to one or multiple locations.

Sensor data messages may be time-critical and submitted near-real-time but also may be of informational value and submitted with an acceptable delay accumulated within other data. Neither priority nor requirements on latency are part of the specification. The content and format of sensor data messages is independent of submission latency (in near-real-time or delayed).Vehicle metadata provides information about the vehicle that is valid for the entire path. This includes vehicle type information and vehicle reference point. All absolute positions (longitude/latitude) that are reported to the sensor data ingestion interface are expected to be at the centre of the vehicle. All offsets that are reported are expected to be offsets from this centre point of the vehicle.

Altitude that is reported to the interface is expected to be the altitude on ground (not altitude of the location of GPS antenna). Instead of providing the altitude on ground, it is possible to report a different altitude with a constant offset. This offset from the ground must be provided through the vehicle metadata.

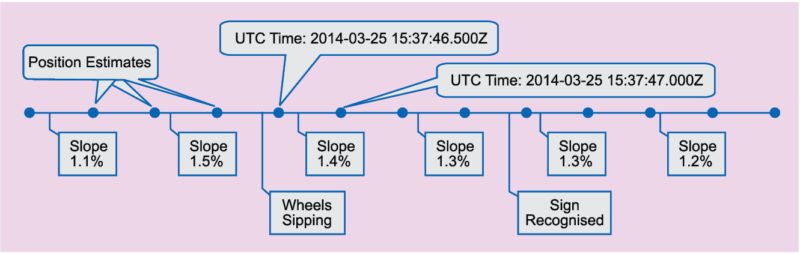

Vehicle dynamics are measurements beyond the position of a vehicle. Typically, vehicle dynamics information is measured by the vehicle using onboard sensors at high frequency as compared to positions (for example, 5Hz or 10Hz). Depending on the set of sensors in the vehicle, different values could be provided. In order to keep the complexity at a manageable level, these raw measurements must be converted into meaningful values and, hence, are a result of calculations either in the vehicle or in the OEM or system vendor backend.

The fundamental data element for rich data sensor submission is a path, which is a list of position estimates, which are ordered starting with the oldest position estimate. A path can be very short, for example, for near-real-time events that are transmitted immediately after these occur. It can be very long, for example, like an entire drive over many hours that records the vehicle trace and events for later submission.

Vehicles may have different collection policies for different types of sensor data. According to individual priorities of sensor data information, a message could be compiled and sent out the moment specific sensor data reading is detected, or events could be accumulated into one message and submitted after a given amount of time.

By way of example, this could be due to reducing computational performance in critical moments while driving due to reduction in transmission volume through mobile phone connection, or due to accumulation and, thus, referencing all detected path events onto one single path. The latter may happen if referencing of multiple path events to one single vehicle is needed and usage of transient vehicle ID is not supported or not wished.

Self-driving cars

A self-driving car is capable of sensing its environment and navigating without human input. To accomplish this task, each vehicle is outfitted with a GPS unit, an inertial navigation system and a range of sensors including laser range-finders, radar and video. The vehicle uses positional information from the GPS and inertial navigation system to localise itself and sensor data to refine its position estimate as well as to build a 3D image of its environment.

Data from each sensor is filtered to remove noise and is often fused with other data sources to augment the original image. The vehicle subsequently uses this data to make navigation decisions, determined by its control system.

The majority of self-driving vehicle control systems implement a deliberative architecture, meaning that these are capable of making intelligent decisions by maintaining an internal map of their world and by using that map to find an optimal path to their destination that avoids obstacles (for example, road structures, pedestrians and other vehicles) from a set of possible paths. Once the vehicle determines the best path to take, the decision is dissected into commands, which are fed to the vehicle’s actuators.

These actuators control the vehicle’s steering, braking and throttle. This process of localisation, mapping, obstacle avoidance and path planning is repeated multiple times each second on powerful onboard processors until the vehicle reaches its destination.

Mapping and localisation

Prior to making any navigation decisions, the vehicle must first build a map of its environment and precisely localise itself within that map. The most frequently-used sensors for map building are laser range-finders and cameras. Laser range-finders scan the environment using swaths of laser beams and calculate the distance to nearby objects by measuring the time it takes for each laser beam to travel to the object and back.

Where video from camera is ideal for extracting scene colour, with laser range-finders depth information is readily available to the vehicle for building a 3D map. Because laser beams diverge as these travel through Space, it is difficult to obtain accurate distance readings from more than 100 metres away using the most state-of-the-art laser range-finders, which limit the amount of reliable data that can be captured on the map. The vehicle filters and discretises data collected from each sensor and often aggregates the information to create a comprehensive map, which can then be used for path planning

For the vehicle to know where it is in relation to other objects on the map, it must use its GPS, inertial navigation unit and sensors to precisely localise itself. GPS estimates can be off by many metres due to signal delays caused by changes in the atmosphere and reflections off nearby buildings and surrounding terrain, and inertial navigation units accumulate position errors over time. Therefore localisation algorithms often incorporate maps or sensor data previously collected from the same location to reduce uncertainty. As the vehicle moves, new positional information and sensor data are used to update the vehicle’s internal map.

Obstacle avoidance

A vehicle’s internal map includes the current and predicted location of all static (buildings, traffic lights and stop signs) and moving (other vehicles and pedestrians) obstacles in its vicinity. Obstacles are categorised depending on how well these match with a library of pre-determined shape and motion descriptors.

The vehicle uses a probabilistic model to track the predicted future path of moving objects based on its shape and prior trajectory. For example, if a two-wheeled object is travelling at 60 kilometres per hour versus 15 kilometres per hour, it is most likely a motorcycle and not a bicycle, and will get categorised as such by the vehicle. This process allows the vehicle to make more intelligent decisions when approaching crosswalks or busy intersections. The previous, current and predicted future locations of all obstacles in the vehicle’s vicinity are incorporated into its internal map, which the vehicle then uses to plan its path.

Path planning

The goal of path planning is to use the information captured in the vehicle’s map to safely direct the vehicle to its destination while avoiding obstacles and following the rules of the road. Although vehicle manufacturers’ planning algorithms will be different based on their navigation objectives and sensors used, the following describes a general path-planning algorithm that has been used on military ground vehicles.

The algorithm determines a rough long-range plan for the vehicle to follow while continuously refining a short-range plan (for example, change lanes, drive forward ten metres and turn right). It starts from a set of short-range paths that the vehicle would be dynamically capable of completing, given its speed, direction and angular position, and removes all those that would either cross an obstacle or come too close to the predicted path of a moving vehicle. For example, a vehicle travelling at 80 kilometres per hour would not be able to safely complete a right turn five meters ahead, therefore that path would be eliminated from the feasible set.

Remaining paths are evaluated based on safety, speed and time requirements. Once the best path has been identified, a set of throttle, brake and steering commands are passed on to the vehicle’s onboard processors and actuators. Altogether, this process takes on average 50 milliseconds. It can be longer or shorter depending on the amount of collected data, available processing power and complexity of the path-planning algorithm.

The process of localisation, mapping, obstacle detection and path planning is repeated until the vehicle reaches its destination. Currently, vehicle sensor data exists in multiple different formats across automakers. Pooling analogous vehicle data from millions of vehicles will be a key enabler for highly- and fully-automated driving, ensuring that each vehicle has a near-real-time view of road conditions and hazards, which can lead to better driving decisions.

The development is on for required location where Cloud technology that can detect and process changes in the real world as these happen, including on roads in dozens of countries, on an industrial scale and in high quality.

If a car around the next corner hits the brakes because there is an obstruction, that information could be used to signal to the drivers behind to slow down ahead of time, resulting in smoother, more efficient journeys and a lower risk of accidents.

Standardised vehicle data exchange will enable the crowd-sourcing paradigm to spread across the fragmented automotive ecosystem, leveraging the synergies between connectivity and sensor data to provide smart mobility services such as real-time traffic, weather and parking spaces in the short term, while holding the promise to power self-driving cars with critical high-accuracy real-time mapping capabilities in the future.

ERTICO – ITS Europe includes in particular Advanced Driver Assistance Systems Interface Specifications (ADASIS), a forum that defines how maps connect and interact with the advanced driver assistance systems of a car.

Part 2 of the article will cover Lidar, GPS modules, MEMS devices and a distributed solution used for self-driving cars, next month.