One of the recent applications of neural networks has been in the field of art and music. Can creativity be accomplished through artificial neural networks (ANN)? ANNs are capable of some level of creativity, although not on par with the human faculty. What are the challenges of achieving music and art through algorithms? What are the various approaches? These are some of the questions we shall talk about in this article.

Over decades, ANNs have come a long way from single layer perceptrons that solved simple classification problems to highly complex networks for deep learning. Today, neural networks are used for more than just classification or optimisation problems in various fields of application. One of the most recent applications of ANNs is in the field of art and music. The goal of such an application is to simulate human creativity and to serve as an assisting tool for artists in their creations.

Early works began as early as 1988

One of the first works of using ANNs for music composition was carried out as early as 1988 by Lewis and Todd. Lewis used a multi-layer perceptron, while Todd experimented with a Jordon auto-regressive neural network (RNN) to generate music sequentially.

The music composed with RNNs algorithmically had global coherence and structure issues. To circumvent that, Eck and Scmidhuber used long short-term memory (LSTM) neural networks to capture the temporal dependencies in a composition.

In 2009, when deep learning networks became more interesting for research, Lee and Andrew Ng started using deep convoluted neural networks (CNN) for music genre classification. This formed a basis for advanced models that used high-level (semantic) concepts from music spectrograms.

Most recently, wavenet models and generative adversarial networks (GAN) have been used for generating music. Waveform based models outperformed spectrogram based ones, provided enough training material was available.

A multitude of network models

In the following section, we shall discuss the various ANN models that are used to create music and art.

LSTM networks are a variant of RNNs that are capable of learning long-term dependencies. The key to LSTMs is the cell state which stores information. The ability to add or remove information to the cell state is regulated by gates that are a sigmoid neural-network layer coupled with a point-wise multiplication operation. Since music sequences are time-series with long-term dependencies, it is appropriate to use LSTM.

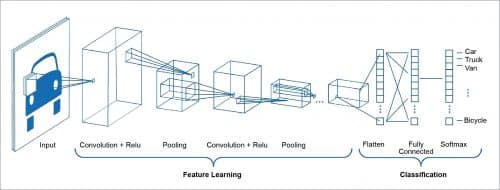

CNNs are designed to process input images. Their architecture is composed of two main blocks. The first block functions as a feature-extractor that performs template-matching by applying convolution filtering operations. It returns feature maps that are normalised and/or resized. The second block constitutes the classification layer.

The network constitutes an input layer, hidden layers, and an output layer. In CNNs, the hidden layers perform convolutions. That includes typically a layer that does multiplication or dot product with rectified linear activation function (ReLU), followed by other convolution layers like the pooling layers and normalisation layers. A typical CNN architecture is shown in Fig. 1.

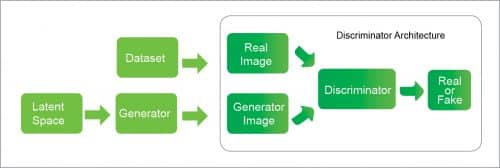

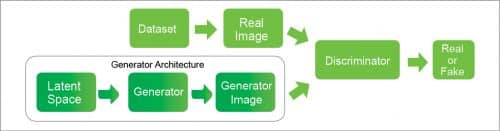

Generative adversarial networks (GANs) have components of generator, discriminator, and loss function. The generator role is to maximise the likelihood that the discriminator misclassifies its output as real. The discriminator’s role is to optimise towards 50 per cent where the discriminator cannot identify between real and generated images. The generator will start training alongside the discriminator. The discriminator trains a few epochs prior to starting the adversarial training as the discriminator will be required to actually classify images. The loss function provides the stopping criteria for the generator and discriminator training processes.

Fig. 2 through Fig. 4 show pictorial block diagrams of the GAN architecture, the GAN generator, and the GAN discriminator. GANs are better than LSTMs in producing music because these are able to capture the large structural patterns in music.

Magenta: A Google Brain project

Magenta was an open source project developed by Google Brain in 2016 for the purpose of creating a new tool for artists when they work on developing new songs or art-work. Magenta is powered by Google’s TensorFlow machine learning platform.

Magenta can work with music and images. In the music domain, an agent automatically composes background music in real time using the emotional state of the environment in which it is embedded. Beneath, it chooses an appropriate composition algorithm dynamically from a database of previously chosen algorithms mapped to a given emotional state.

Doug Eck and team worked with an LSTM model tuned with reinforcement learning. Reinforcement learning was used to teach the model to use certain rules while still allowing it to retain information learnt from data.

The LSTM model works on a couple of metrics—the metrics we want to be low and the metrics we want to be high. The metrics associated with penalties are notes-not-in-key, mean-autocorrelation (since the goal is to encourage variety, the model is penalised if the composition is highly correlated with itself), and excessively-related-notes (LSTM is prone to repeating patterns). Reinforcement learning is brought in for creativity.

The metrics associated with rewards are compositions starting with a tonic note, leaps resolved—in order to avoid awkward intervals, leaps are taken in opposite directions and leaping two times in the same direction is negatively rewarded, and composition with unique max-note and min-note—notes in motif which are succession of notes representing a short musical idea. These metrics form a music theory rule. The degree of improvement of these metrics is determined by the reward given to a particular behaviour.

The choice of metrics and weights determines the shape of the music created. The most recent model of magenta has used GAN and transformers to generate music with improved long-term structure.

Challenges in music generation

The greatest challenge in generation of music is to be able to encode various musical features. Once that is accomplished, the generative music is supposed to follow the broader structure, dynamics, and rules of music. Musical dimensions such as timing and pitch have relative rather than absolute significance when it comes to how notes are placed in these dimensions.

Features such as dynamics (that tells the volume of the sound from the instrument) and timbre (that differentiates between notes having the same pitch and loudness) are difficult to encode. There are other features such as duration, rest, timing and pitch that also are challenging to represent as extracted features.

What the future could hold

Various GAN models such as MidiNet, SSMGAN, C-RNN-GAN, JazzGAN, MuseGAN, Conditional LSTM GAN, and others have been attempted for composing melody. Other generative models like the VAE, flow based models, autoregressive models, transformers, RBMs, HMMs, and many others have been used in research in this area.

The future direction of melody generation is moving towards bringing in more musical diversity and structure-handling capacity. Better interpretability and human control are aimed for. Standardised test datasets and evaluation metrics, cross modal generation, composition style transfer, lyric-free singing, and interactive music generation are some future research directions in this area.

Anita Nair is a software and technology enthusiast who works at EY, India. Her areas of interests include cloud computing, cyber security, software design patterns, DevOps, computer networking and infrastructure, emerging technologies and research. She is a senior member of IEEE and a life member of CSI.