Just like sense organs play an important role in the human body, electronic sensors play a vital role in robotics and artificial intelligence.

New and advanced technologies have recreated most human sense organs, or sensors, in robots including eyes, ears, skin and nose. Just like sense organs play an important role in the human body, electronic sensors play a vital role in robotics and artificial intelligence (AI).

Robotics and AI serve different purposes. Robotics involves building robots, whereas AI deals with programming intelligence or making computers behave like humans.

Accordingly, the context of sensors in robotics and AI may be slightly different. Robots are programmable machines, interact with the physical world via sensors and actuators, and can be made to work autonomously or semi-autonomously. However, many robots have limited functionalities and are not artificially intelligent. Some industrial robots can only be programmed to perform a repetitive task, which does not require AI. Some AI algorithms are often required to allow the robots to perform more complex tasks.

Human-like operations of machines induced by AI include speech recognition, planning, problem solving and learning, among other tasks. Not all AI algorithms are used to control robots. For example, AI algorithms are used in virtual assistants like Google Assistant, Amazon Alexa and Apple Siri.

When robotics and AI are combined, we get artificially-intelligent robots. However, without sensors, AI robots are blind, deaf and dumb. Machine perception, learning, speech recognition and the like are achieved through sensory inputs such as cameras, microphones, wireless signals, active lidar, sonar and radar to deduce the different aspects of the world (including facial, object and gesture recognition).

Modern technology for AI robots can be a blessing or a disaster for human beings, at the same time. For example, a killer robot based on AI could be a very dangerous thing. There has been a huge campaign by AI experts all over the world to boycott killer robots reported to be under-development at Korea Advanced Institute of Science and Technology (KAIST). However, KAIST’s president, Sung-Chul Shin, has denied any such development.

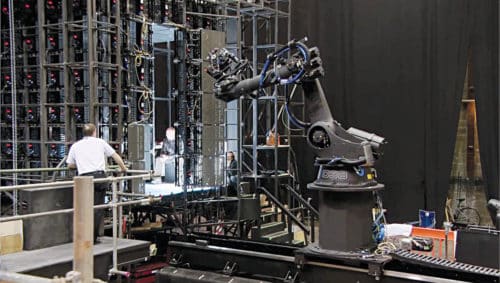

Robots are not only used for educational, industrial, military and civil applications, but also in movies and entertainment industry. Gravity, a science fiction thriller, used robots that were originally designed for performing assembly, welding and painting applications. The robotic arm driven by sensors was made to carry cameras, lights, props and even actors. By using the robotic arm behind the scenes, the director was able to achieve remarkable shots for the movie.

Then, there are robots such as QRIO and RoboSapien that are capable of advanced features like voice recognition or walking.

Sensors and robotics

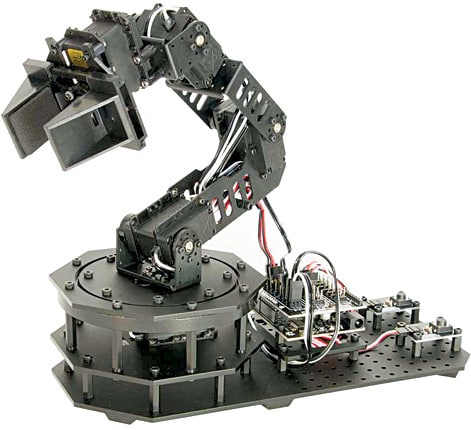

A typical robot has a movable physical structure driven by motors, sensor circuitry, power supply and computer that controls these elements.

Some popularly known robots are:

- Educational robots are mostly robotic arms used to lift small objects, or wheels to move forwards, backwards, left or right, with some other features.

- Honda’s ASIMO robot can walk on two legs like a person.

- Industrial robots are automated machines that work on assembly lines.

- BattleBots are remote-controlled fighters.

- DRDO’s Daksh is a battery-operated, remote-controlled robot on wheels. Its primary role is to recover bombs.

- Robomow is a lawn-mowing robot.

- MindStorms are programmable robots based on Lego building blocks.

Some robots only have motorised wheels, while others have dozens of movable parts, including spin wheels and pivot-jointed segments with some sort of actuators. Some robots use electric motors and solenoids as actuators, while others use a hydraulic or a pneumatic system. Most autonomous robots have sensors that can move and perform a specific task without human intervention or control.

One interesting technology under development in the field of robotics is the micro-robot for biological environment. A micro-robot may be integrated with sensors, signal processing, memory unit and feedback system working at a micro-scale level. This development can have important implications for integrated micro-bio-robotic systems for applications in biological engineering and research.

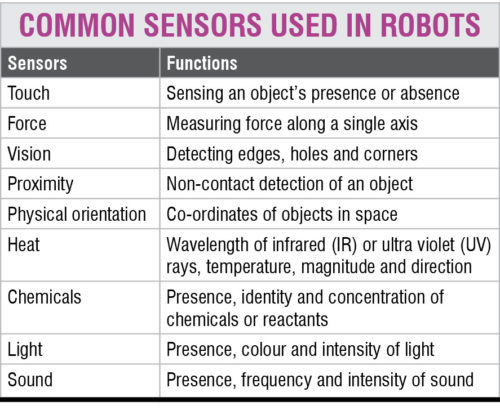

Some common robotic sensors are given in the table.

Biosensors

Biosensors use a living organism or biological molecules, especially enzymes or antibodies, to detect the presence of chemicals. Examples include blood glucose monitors, electronic noses and DNA biosensors.

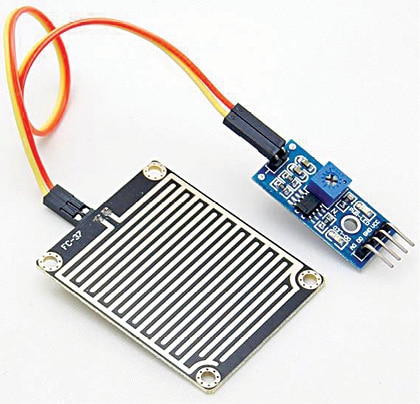

Raindrops sensors

These sensors are used to detect rain and weather conditions, and convert the same into number of reference signals and analogue output.

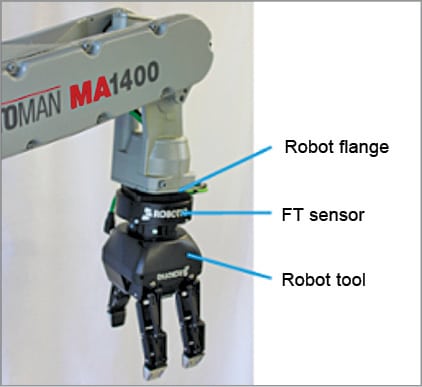

Multi-axis force torque sensors

Such sensors are fitted onto the wrist of robots to detect forces and torques that are applied to the tool.

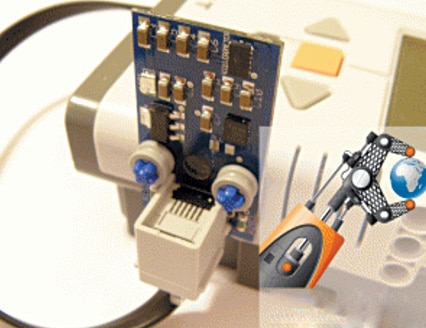

Optoelectronic torque sensors

These sensors are used for collaborative robot applications, ensuring safer and more effective human-machine-collaboration. These have less than one microvolt of noise even in low-torque range, can self-calibrate and measure to an accuracy of 0.01 per cent. A contactless measurement principle ensures that the sensors are insensitive to vibration and are wear resistant.

Inertial sensors

Motion sensing using MEMS inertial sensors is applied to a wide range of consumer products including computers, cell phones, digital cameras, gaming and robotics. There are various types of inertial sensors depending on applications. LORD MicroStrain manufactures industrial-grade inertial sensors that provide a wide range of tri-axial inertial measurements, and computed attitude and navigation solutions.

Sound sensors

Sound sensors are used in robots to receive voice commands. High-sensitivity microphones or sound sensors are essential in voice assistants like Google Assistant, Alexa and Siri.

Emotion sensors

Robots can react to human facial expressions using emotion sensors. B5T HVC face-detection sensor module from Omron Electronics is a fully-integrated human vision component (HVC) plug-in module that can identify faces with speed and accuracy. The module can evaluate the emotional mood based on one of the five programmed expressions.

Grip sensors

RoboTouch Twendy-One gripper features embedded digital output, which is ideal for OEM integration into a gripper. These sensors comprise multiple sensing pads, each having multiple sensing elements. Data from sensors is transferred to grippers via I2C or SPI digital interfaces, enabling easy integration of the sensors into grippers.

Artificial intelligence

Sensors used in AI robots are the same as, or are similar to, those used in other robots. Fully-functional human robots with AI algorithms require numerous sensors to simulate a variety of human and beyond-human capabilities. Sensors provide the ability to see, hear, touch and move like humans. These provide environmental feedback regarding surroundings and terrain.

Distance, object detection, vision and proximity sensors are required for self-driving vehicles. These include camera, IR, sonar, ultrasound, radar and lidar.

A combination of various sensors allows an AI robot to determine size, identify an object and determine its distance.

Radio-frequency identification (RFID) are wireless sensor devices that provide identification codes and other information.

Force sensors provide the ability to pick up objects.

Torque sensors can measure and control rotational forces.

Temperature sensors are used to determine temperature and avoid potentially-harmful heat sources from the surroundings.

Microphones are acoustical sensors that help the robot receive voice commands and detect sound from the environment. Some AI algorithms can even allow the robot to interpret the emotions of the speaker.

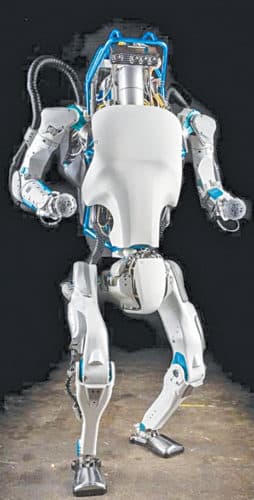

Humanoid robots with AI algorithms can be useful for future distant space exploration missions. Atlas is a 183cm (6-feet) tall, bipedal humanoid robot, designed for a variety of search-and-rescue tasks for outdoor, rough terrains. It has an articulated sensor head that includes stereo cameras and a laser range finder.

To sum up

The right use of sensors can improve the performance of artificially-intelligent robots. Getting raw sensor data alone is not enough. AI tools can be useful when applied to sensor systems. Many new sensors are being developed, and the use of hybrid tools can further improve the strengths of AI robots.

New advances in machine intelligence are creating seamless interactions between people and digital sensor systems. AI has powerful tools for use along with sensor systems, for automatically solving problems that would normally require human intelligence. These are knowledge-based systems, fuzzy logic, learning, neural networks, reasoning and ambient intelligence.