The term ‘self-driving cars’ or ‘autonomous vehicles’ have been making rounds in the industry for quite some time now. We have even seen a few of them in action on the roads. It is almost a consensus that self-driving cars are the future of the automobile industry. Then why is it taking so long for this future to arrive? In this article, we discuss the need for self-driving car, the current predicaments that it faces, and what the future holds for this amazing AI-powered technology.

The one thing that I have missed this past year, thanks to the pandemic, is driving a car. I know it’s a bit of an unpopular opinion, but you have to admit, driving a car with some music is soothing. I would go as far as to say it is borderline therapeutic. Not to say I haven’t driven at all. I take my car out every now and then to run errands, but my time behind the wheel has significantly reduced. Now you might say that’s a good thing. And you wouldn’t be wrong. Time spent not driving is time that could be used more productively on other things. It helps the earth greatly with less CO2 emissions. And if you are in India, then you get to avoid all the harmful side effects caused by the incessant pollution on the streets.

But all the reasons mentioned above pale in comparison to the primary reason why driving a car is not good for you, that is, the risk of meeting with an accident. This is the major motivating factor behind the development of autonomous vehicle technology. Across the globe, roughly 1.35 million people die each year as a direct cause of traffic accidents. And that’s besides the injuries, amputations, mental and physical trauma that the survivors of the accidents have to go through. This spills into other things as well, such as huge medical bills, high insurance rates, damage or loss of property, etc.

It is needless to say that we need autonomous vehicles. But apart from the risk to life, there are other interesting reasons as well. Let us briefly look through some of those.

Why we need autonomous vehicles

Besides saving human lives, there are other major benefits of autonomous vehicles as well. Such as:

- Self-driving cars can help people with disabilities. Imagine being blind and still being able to own a car and go anywhere.

- Self-driving cars mean less accidents. Which in turn means no need to pay a high premium on your insurance.

- More than half of the traffic jams occur due to traffic flow disruptions which are caused primarily by fender-benders and other accidents. With autonomous vehicles, the time spent on the road can massively decrease.

- You can save money on fuel each year as fewer traffic disruptions help you commute faster, thus using less fuel than you do now. This also helps the environment.

- Self-driving vehicles reduce the cost of logistics and will make delivery of products to your doorstep much faster and cheaper.

- In the pandemic that we are in right now, delivery of essentials has been a challenge as it leads to inevitable contact between you and the delivery executive. Additionally, it also puts the life of the delivery executive at risk. By using autonomous vehicles, social distancing during epidemics/pandemics can be maintained better, thus saving more lives.

- Emergencies such as curfews, outbreak of diseases or even war often cause people to get stranded. Delivery of essentials such as medicine and food to such places is an ongoing challenge. With the help of self-driving vehicles, much needed assistance can be provided in times of emergencies.

How does a self-driving car work?

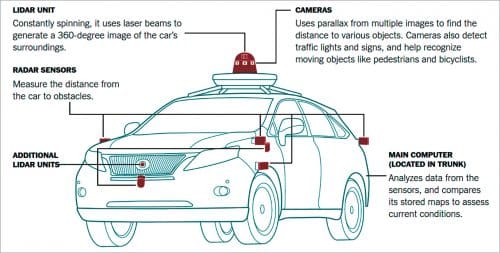

There are two aspects to self-driving cars that we should consider in order to understand, as simply as possible, how a self-driving car works. The first aspect to consider is the hardware that goes into an autonomous vehicle. The second is the software that runs in the background making the car functional.

The hardware required for an autonomous vehicle includes the following:

- Lidar (light detection and ranging)

- Radar

- Sophisticated cameras

- Integrated photonics circuit

- Mach-Zender modulator

- Main computer

The software, languages, frameworks, and tools used to build an autonomous vehicle include:

- MATLAB

- Simulink

- Version control system (Git)

- Vehicle design—CAE/CAD/PLM/FEA

- LS-DYNA, MSC ADAMS, CarSim, CarMaker, Dymola, OptimumG, SusProg3D, Oktal SKANeR, etc.

- Docker, CMake, Shell, Bash, Perl, JavaScript, Node.js, React, Go, Rust, Java, Redux, Scala, R, Ruby, Rest API, gRPC, protobuf, Julia, HTML5, PHP

- Verilog, VHDL, DSP, Cadence, Synopsys, Xilinx Platform Studio (ISE and XPS)

Apart from the list of software and tools mentioned above, the data is stored in the cloud and the control algorithms are trained on highly sophisticated deep learning and machine learning models. How it all comes together?

The car has a main computer, generally located in the back trunk, that controls all the necessary software installed. It collects data from all the sensors, processes it, and decides using highly accurate deep learning models. The computer learns with every decision it makes and gets better and better the more the car is driven. The computer also requires a high-quality and low-latency connection to the Internet, ideally using 5G technology.

The radar sensors placed strategically around the car, as shown in Fig. 1, help in detecting objects and obstacles on the road. Additionally, they help measure the distance of the car from the obstacles. This is highly important for avoiding hitting immobile objects or pedestrians and animals. It also facilitates changing lanes, taking turns, and adjusting the speed.

But detecting the existence of an object isn’t enough for the car to drive safely. You require a technology that can not only detect an object and its distance from you, but also its shape and density in order to identify what the object is. Why is this important?

Because a pedestrian does not move on the road the same way as a car does. Nor does a deer move the same way a cow does. This is important information because only by knowing what kind of an obstacle you are dealing with can you deal with it properly. A deer can be fidgety and make sudden moves. A cow may not care as much about being run over. A person could be jay-walking and a car might suddenly shift lanes without any signal or warning sign.

This is where lidar technology comes in. Unlike its predecessor, lidar fires a train of super-short laser pulses to get the depth resolution of the object. In order for these laser pulses to be shot accurately at regular intervals, we use what is called an integrated photonics circuit to periodically block the light.

The data of the Internet is carried by precision timed pulses of light shot every 100 picoseconds (0.0000000001 second). This is done with the help of what is called a ‘Mach-Zehnder modulator’ that uses wave interference to achieve this goal. The light pulse lasting 100 picoseconds leads to a depth resolution of a few centimeters.

Apart from radars and lidars, high-tech cameras are also used to identify and understand traffic lights and measure distance between different objects on the road. They are also used to cover potential blind spots.

Algorithms used in the vehicles

The algorithms used in self-driving vehicles belong to the family of what we refer to as ‘machine learning’ algorithms. But that is a broad term. Machine learning can mean anything from forecasting the weather based on historical weather data to recognising patterns in transactions made by customers at a bank. In order to bring more specificity, we need to briefly discuss what machine learning is.

Machine learning can be loosely defined as the ability of a machine (primarily a computer) to accomplish a task effectively by learning through repetitions. This is similar to how humans learn. We act based on some preliminary knowledge, see the results, contemplate on whether those results were what we were looking for, and then reassess our actions accordingly. Over time, we keep getting better at performing our tasks because we are learning from our mistakes.

Similarly, machines can also be trained to perform certain tasks more efficiently by implementing what we call ‘machine learning algorithms. Machine learning algorithms can be broadly divided into three types, namely, supervised learning, unsupervised learning, and reinforcement learning.

Supervised learning type of algorithms work in conjunction with datasets. Basically, a computer is provided with (ideally) large sets of data and supervised learning algorithms are designed to train the computer-based on that data. The better the training algorithm, the lesser the probability of error by the machine. Linear regression is a good example of supervised learning, which is often used for tasks such as predicting the probability of changes in the weather given the historical data.

Unsupervised learning type of algorithms work in conjunction with ‘untagged’ datasets. Not all datasets provide you with a clear picture and you may not always know what you are looking for. In such scenarios, unsupervised learning algorithms are used on these datasets to help find certain patterns. These algorithms also learn and get better at reducing the probability of errors with each iteration. Clustering algorithms such as K-means are a good example of unsupervised learning algorithms that help find patterns in large datasets.

Reinforcement learning type of algorithms are the most similar in terms of how humans learn. They consist of three major elements, namely, a goal, a utility function, and a reward system. A machine is placed in an environment with a predefined goal or set of goals. Rewards are set for each action based on the goal and the utility function is given to the machine. The utility function helps the machine take the actions that have the highest probability of reward and the lowest probability of risk. The focus is to reach the goal by gaining the highest rewards possible.

So, what type of machine learning algorithms do autonomous vehicles utilise? The answer, all of the above. It is tempting to put an autonomous vehicle into the reinforcement learning box, but the reality is that given the complexity of the tasks that the self-driving car has to tackle, some form of each algorithm is used to tackle one or more of each type of problems.

Following are some commonly used ML algorithms by autonomous vehicles based on the environment or task:

Decision matrix algorithms

These types of algorithms identify and analyse the relationships between different types of data in the given dataset. Based on these relationships, decision matrix algorithms come up with scores for each possible decision that could be made given the data. For instance, should the car press the brakes given the obstacle is 200 metres ahead? And if so, by what level of intensity should the brakes be pressed? Decision matrix algorithms help autonomous vehicles make such crucial decisions with a level of confidence that is calculated by the least probability of errors in the decision.

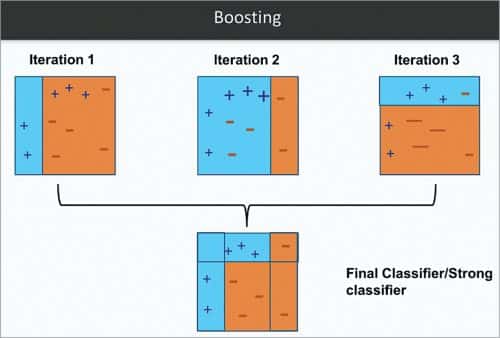

The Adaboost algorithm is a good example of a decision matrix implementation. As shown in Fig. 2, Adaptive Boosting or Adaboost is a pattern recognition algorithm that learns to differentiate between different patterns. It focusses on giving ‘weight’ or priority to its weakest links where ambiguity is the highest, until it can eliminate the maximum amount of errors possible in its decision making.

Clustering algorithms

As discussed previously, clustering is a form of unsupervised learning that helps recognise difficult patterns from data points in datasets with high ambiguity. Even though self-driving vehicles make use of high-resolution, high density cameras, the environment, weather, lighting and many other such variables can affect the clarity and resolution of the images making object detection a difficult task. Clustering algorithms are specialised in recognising the objects in such a scenario. This can potentially save lives and make the vehicle super-efficient even in unfavourable situations.

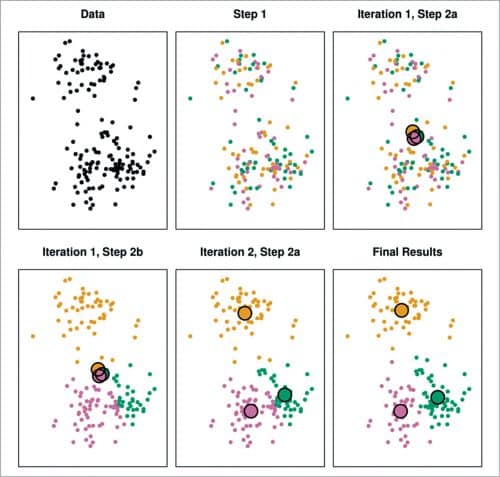

A common clustering algorithm used for this purpose is the k-means clustering algorithm. K-means divides the data points into k number of clusters. It does so by using data points called centroids that are utilised to define each cluster. A data point is said to belong to a certain cluster given its affinity to the centroid of the respective cluster. With a number of iterations that include choosing centroids and assigning data points to them, the k-means clustering algorithm becomes efficient at allocating a given data point to a particular cluster. Thus, getting efficient at recognising particular objects in an image. Fig. 3 shows how k-means algorithms cluster data with each iteration.

Data reduction algorithms

The images that are obtained from different sensors in the autonomous vehicle contain a lot of noise. By noise we mean data that is irrelevant to the task at hand. For instance, a self-driving car may detect a cat crossing the street. But in order to detect that, a whole bunch of other data that the sensor catches first needs to be disregarded and only the data related specifically to the cat on the road needs to be considered. In such scenarios, we use what are called data reduction algorithms that help in removing noise from the data and recognising relevant patterns.

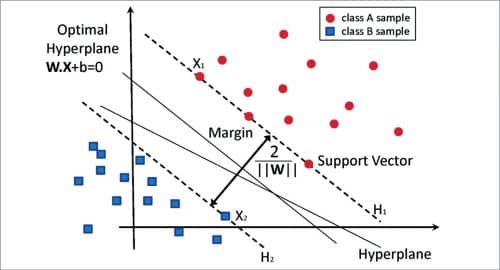

Principal Component Analysis (PCA) and Support Vector Machine (SVM) are good examples of data reduction algorithms. For instance, in the case of SVM, we draw a hyperplane between two distinct classes of data, thus differentiating between different patterns. With each iteration, the boundary is adjusted until the most optimal boundary can be achieved.

This is done with the help of the data points at the boundaries that are referred to as support vectors as shown in Fig. 4.

Regression based algorithms

As discussed previously, regression algorithms are a form of supervised learning algorithms that are trained on ‘tagged’ datasets with predefined independent and dependent variables. Based on the relationship between these variables, over a period of iterations, regression algorithms become efficient at predicting certain scenarios. In the case of the autonomous vehicles, the distance of a particular object can be measured in relation to the progressive change of size and the position of the object in each image incrementally.

To make this simpler, consider a traffic light. If the self-driving car takes an image of an upcoming traffic light from 500 metres away, the light will have a certain size and position in that image. But as the car gets closer to the light, say 200 metres away, the size and the position of the light in the latest images obtained by the car will be significantly larger. This form of data can be fed to a dataset on which regression can be applied to predict the distance of traffic light from the car. With this information, the car can decelerate accordingly.

Simple Linear Regression is a common example of regression analysis with which the value of a dependent variable can be predicted by defining its relationship with an independent variable. In a dataset if two variables show a strong positive/negative linear relationship, then a line can be drawn to predict the outcome of a given input variable depending on its relation with the independent variable. With each iteration the line can be adjusted, until the probability of errors is significantly reduced and the algorithm is optimised as shown in Fig. 5.

The big players

The autonomous vehicle industry has an interesting mix of tech giants and startups racing against time to be first. While all of them are working to build self-driving vehicles, they don’t all share the same goals.

- Waymo. A subsidiary of Google, Waymo is leading the race in developing self-driving cars. Having driven over 32 million kilometres (20 million miles) on public roads, Waymo has spent the past few years testing its technology in 25 different cities across the USA. This is way higher than its competitors such as Baidu whose vehicles so far have driven over 1.6 million kilometres (1 million miles). Waymo intends to use its driverless technology as a ride-hailing service, similar to Uber. You get to download an app using which you can hail a Waymo ride. This service is currently in its beta phase, available only at Phoenix Metro Area in Arizona, USA.

- General Motors (GM). General Motors plans to launch their own taxi service using autonomous vehicles. With years of reputation and outrageous amounts of financial backing, GM requires no formal introduction in the automobile industry. Unlike Waymo, GM engineers its cars completely by themselves. Even the sensors used in the car are either manufactured by them or the subsidiaries they own.

- Baidu. Baidu is the Chinese equivalent of Google who is heavily invested in building its own autonomous vehicle technology. The major difference between Waymo and Baidu is that, unlike Waymo, which is focused more on autonomous cars, Baidu is focused on building a commercial autonomous bus service that will run across China.

- Yandex. The Russian equivalent of Google, Yandex has built its own cab hailing service using autonomous vehicle technology. It has been driven for over 8 million kilometres (5 million miles) and is predicted to become a household name in the Russian landscape within the next five years.

Why is it taking so long?

With all the big players mentioned in the previous section pouring in money, manpower and insane amounts of computational power behind the technology, one can’t help but wonder why it is taking so long for self-driving cars to become an everyday phenomenon on the streets?

We have the tech, we have the big data, and we have the deep learning models in place. So, what is the problem? Short answer: The technology is not ready, nor are we.

It is a misconception to assume that the technology is ready. Even with millions of kilometres driven with the help of amazing gadgets there are certain pitfalls that we are yet to overcome. Additionally, we don’t just require the technology, but also the infrastructure, legislation, and a change of mindset to handle this massive transformation.

Make no mistake, in the automobile industry, the impact of a self-driving car is no less than the invention of the wheel. It completely transforms not just how transportation is used, but many different aspects of our lives.

Technical limitations

Listed below are some of the major reasons that are causing the delay in making this technology available to everyone.

1. Range, speed, and resolution of ultrasonic sensors. While we do have faster and more agile sensors like radar and lidar, a large part of the data that is collected by autonomous vehicles is still dependent on ultrasonic sensors. A major technical snag faced by ultrasonic sensors is that their efficiency massively reduces as the vehicle accelerates beyond a certain point. So, the faster a vehicle, the less reliable the ultrasonic sensor becomes.

Additionally, ultrasonic sensors have a range of only two metres, which provides barely enough time for the collection of data, its computation, and critical decision making. Another drawback of ultrasonic sensors is the resolution which is way low as compared to other sensors such as radar. This creates ambiguity in data and leads to errors in decision making.

2. Range, resolution, and detection issues with radar. Radars are certainly an improvement on ultrasonic sensors but come with their own set of shortcomings. One of the major issues with radars is the false alarms raised by the sensor due to surrounding metal objects. The sensor can often confuse a metal object with another vehicle when, in reality, the object could be anything. To overcome this error, a lot of computational power has to be wasted on correlating the radar data with the data of other sensors to understand false flags.

The range of a radar varies from 5 metres to 200 metres, depending on the quality. But even with the most expensive radar sensors, the resolution remains a major issue. Though an improvement over ultrasonic sensors, the range and resolution of radars still remain an issue.

3. Lidar pricing, range, and issues with bad weather. The sensors that the autonomous vehicles most heavily depend upon are lidars. With their higher resolution and accuracy, lidars are, in a sense, what made self-driving vehicles a reality. But it is this higher accuracy of lidars that has become its own enemy.

Lidars are not good at handling rain. As we have already discussed, lidars overcome the pitfalls of radars by firing faster laser pulses that then get reflected back to the sensor when they hit an obstacle. This gives lidar the information of the object, its shape, size, and distance from the vehicle. Turns out, lidars are fast enough that their laser pulses hit the droplets of water falling in the rain. When these laser pulses are reflected back to the lidar, it mistakes the droplets of water for an object. Correcting this critical error is still an open problem and expensive both in terms of computational power and resources.

Additionally, lidars have the same range as radars, that is, 200 metres. Though more efficient than radars, lidars can look no further than radars in terms of distance. Another complication is the pricing. Lidars can be extremely expensive, thus making them harder for companies to install as they increase the overall pricing of the product.

4. Range, resolution, and complexity of cameras. High-resolution cameras are still an economical option as compared to lidars, but they do come with a set of unique challenges of their own. For starters, there is a huge gap between the ratio of range and resolution between ultrasonic sensors, radars, lidars, and high-definition cameras. Needless to say, at a longer distance, cameras can give you a higher resolution as compared to any other sensor used. With each sensor giving its own limited resolution given its capacity and the added complexity of the high resolution of the camera, defining an object is an extremely computationally expensive task.

Additionally, with the given time limit in decision making (which can be milliseconds) and the lack of infrastructure, especially of the 5G Internet, this problem is further compounded. Another major problem with images obtained from cameras is that they require very advanced machine learning algorithms to run with the help of huge datasets. This creates a two-fold problem. First is the allocation of the computational resources and second is the allocation of memory and storage.

Human/societal limitations

As mentioned earlier, we are not ready for autonomous cars yet. Following are some of the hurdles we face:

1. We don’t have the mindset for self-driving cars. For over a hundred years, cars have been created to be driven. As such, if you know how to drive, then purchasing an autonomous car requires a complete shift in mindset from you. You know how you hit the imaginary brake anytime you are sitting in the car with someone else? No matter how much you trust the driver, or how familiar you are with his/her driving, you cannot help but feel that sense of panic whenever you feel them racing to make it through the green light. Now imagine the same situation, only this time there is no driver and you are on the passenger side. No matter how excited you might be about self-driving cars, it is going to be a hard adjustment to make.

2. We are not educated about autonomous driving. There is a reason why most of the tech giants want to offer self-driving cars as a cab hailing service. Because that way, you don’t have to spend any time learning how to handle the car. You simply order a cab using an app. You get in and get out upon reaching your destination, and that’s it. However, owning a self-driving car is a whole different scenario. There are a whole bunch of safety features that you will have to learn to use. You will also have to get educated and trained to handle emergencies that could occur with the car. It is a technology, so it will fail you from time to time. And, in the early days, the snags are going to be even more. It is not going to be a picnic to own a self-driving car.

3. We don’t have the money. Self-driving cars cost … A LOT. The sensors, lidar, cameras, and a whole bunch of other sensors and technology that will have to go into it will massively increase the cost of owning a self-driving car. This further puts a hurdle between these cars becoming an everyday phenomenon on the streets. For self-driving cars to become common, they will have to be built keeping in mind what a common man can afford, not just in terms of purchasing a vehicle but also maintaining it. Self-driving cars cannot just be for the elite, like owning a Tesla.

4. We don’t trust technology. If you are following the news right now, then you are aware of the fiasco that is the Covid vaccine rollout in any country that has the vaccines. A huge part of it is due to mismanagement by the governments. But a significant problem is also the lack of trust that people have in medical technology when it comes to their own health. What is the correlation in the vaccine rollout and self-driving vehicles? A major one. People don’t trust technology, especially when it is made by the industry giants. If a substantial part of the population is not willing to get a vaccine to save their lives, will they be willing to put their lives into the hands of a self-driving car built by big tech? Not everyone is thrilled about the self-driving car. And these concerns are genuine. A huge media campaign is required to help people build public trust in this technology. And this can take a long time.

5. We don’t have the infrastructure. Most countries in the world do not have the right infrastructure to support self-driving cars. The technology is good, but it is still far from being able to drive on unmapped dirt roads in the mountains or deserts. Additionally, network connectivity is an ongoing issue in the entire world. Even developed countries such as the USA have many issues with network connectivity. For self-driving cars to be fully functional, we require solid, uninterrupted 5G network connectivity throughout the country and wherever else we may choose to go by car. We are still way behind achieving this goal.

6. We don’t have the rules. A complete overhaul of transportation legislation is required in every country. And this is not a one and done thing. Overhauling of the legislation will be an ongoing process for at least a good twenty years after self-driving cars arrive. Why? Because we are going to learn from our mistakes. The legislation designed the first time will inevitably have many issues. It is going to have to be altered many times before we get it right. There is also the fact that self-driving cars cannot arrive in the scene before we do this. A complete change of driving rules is a necessary, albeit tough, prerequisite to autonomous vehicle technology.

What is the future?

I am not a ‘tech swami.’ I cannot give you a definitive answer as to when the self-driving car will ‘arrive in the scene.’ But I can give an answer that is rooted in probability and research. I understand that there is a shared sense of lethargy and pessimism in the public opinion about self-driving cars right now. What was a ‘big deal’ in 2010 doesn’t really seem as such right now.

But a self-driving car cannot and should not be equated with a piece of technology like ‘Google glass.’ Self-driving cars are here to stay. But the transition isn’t going to be as fast as was the case with smartphones. Instead, this is going to be a slow and painful transition in the case of most countries. There are going to be a lot of technological and social snags that will have to be dealt with before this technology completely takes over.

However, as far as the technology behind autonomous vehicles is concerned, the self-driving car is most definitely the next step in the evolution of the automobile industry. Experts currently put the estimate at the year 2050 in order to see a massive reduction of up to 90% in road accidents caused as a result of driving. Is the estimate too optimistic? I don’t think so.

From the perspective of an AI researcher, I feel safe enough to say that the one thing that we don’t lack is data. We have a lot and I mean A LOT of data to better our deep learning models. We are in the golden period of AI, and although AI is already being used everywhere, we are just seeing the beginning of its potential. With the speed with which AI research is moving, a lot of the challenges I mentioned previously can be taken care of by using AI. For instance, we don’t need a complete overhaul of our infrastructure if the AI learns to handle driving in the current conditions. AI can also help in reducing the cost of the technology by being able to get the job done with lesser resources.

The sheer power and potential of AI makes me highly positive and optimistic about autonomous vehicle technology. And while we might go through many phases of transformation before we are able to call this technology ‘mature,’ we certainly can and will get there.

Mir H.S. Quadri is a research analyst with a specialisation in artificial intelligence and machine learning. He shares a deep love for analysis of technological trends and understanding its implications. Being a FOSS enthusiast, he has contributed to several open source projects.