Here’s a primer on generative AI, thoughts on how you can empower yourself with this tech, and an insight into how Stable Diffusion has achieved success as an open source effort

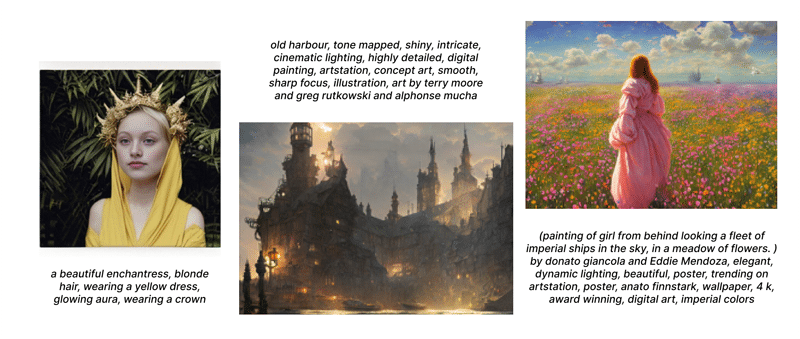

Take a look at Fig. 1. Can you guess what these three images have in common? From a quick look, you may think that it’s just the fantastical art style. What if I told you that none of them were drawn by a human artist but were generated on-the-fly within seconds by an AI model?

This is the power of generative AI—models capable of generating novel content based on a broad and abstract understanding of the world, driven by training over immense data sets. The ‘artist’ of the above images is a model named ‘Stable Diffusion’ released as open source software by the organisation Stability AI. All you have to give it is a text prompt describing the image you want in detail, and it will do the rest for you. If you’re curious, I’ve dropped example prompts that can generate the images in Fig. 2.

Stable Diffusion is not the first of its kind. OpenAI’s ‘DALL-E’ beat it to the chase as it was released a few months prior. However, looking at the state of the art, it is the first generative AI model capable of image synthesis and manipulation that was released to the public as FOSS software while also being practical to adopt.

These models are not limited to images. The first generation (and possibly the most popular use case) of these models targets text generation, and sometimes more specifically code generation. Recent advances even include speech and music creation. Zooming out into a timeline with some of the major generative AI milestones (Fig. 3), we see that this technology has been out for a while, but only gained monumental traction recently.